by jsendak | Feb 18, 2024 | DS Articles

This April 17-19, ShinyConf returns for its third edition of the Largest Virtual R Shiny Conference. This year, we’re thrilled to present a lineup of keynotes that not only set the tone for the conference but also promise to delve deep into the diverse applications and future of R Shiny.

From reproducible data science to sustainable leadership in open source, the keynotes at ShinyConf 2024 span a wide range of topics, each offering unique insights and practical knowledge that you don’t want to miss.

Why Attend?

ShinyConf 2024 is more than an event; it’s an experience. Attendees will:

- Connect Globally: Network with a diverse group of Shiny enthusiasts, fostering a collaborative environment for learning and innovation.

- Showcase Innovation: Discover the latest breakthroughs in R Shiny technology, featuring open-source packages, interoperability, and commercial applications.

- Expand Your Knowledge: Elevate your Shiny skills through practical insights, inspiring stories, and state-of-the-art applications.

Keynote Highlights

Eric Kostello – A Decade of Shiny in Action: Case Studies from Three Enterprises (Track: Shiny in Enterprise)

Eric Kostello – A Decade of Shiny in Action: Case Studies from Three Enterprises

Eric Kostello, Executive Director of Data Science at Warner Brothers Discovery, will share his personal experiences with Shiny in the entertainment industry, showcasing its versatility and power to drive insights across various sectors. His case studies from three enterprises underscore Shiny’s role in data science, offering lessons of broad applicability and inspiring innovation.

Learn more about his keynote.

Tracy K Teal – It’s not just code: surviving and thriving as an open source maintainer (Track: Shiny for Good)

Tracy K Teal – It’s not just code: surviving and thriving as an open source maintainer

Dr. Tracy K. Teal has been the Open Source Program Director at RStudio/Posit, Executive Director of Dryad, and a co-founder and Executive Director of The Carpentries. She will explore the multifaceted role of open-source maintainers, emphasizing the importance of sustainable leadership for fostering healthy communities and effective code. Her keynote is a treasure trove of strategies, tips, and templates for anyone involved in open source, offering a roadmap to thrive in these vibrant ecosystems.

Learn more about her keynote.

George Stagg – Reproducible data science with webR and Shinylive (Track: Shiny in Life Sciences)

George Stagg – Reproducible data science with webR and Shinylive

George Stagg is a Software Engineer working on the webR project as part of the Open Source Team at Posit Software PBC. He will bring to light the critical issue of scientific reproducibility through WebAssembly, webR, and Shinylive. His talk addresses the challenges researchers face in ensuring their findings are error-free and replicable across different computational environments, offering a pioneering solution that promises to revolutionize scientific sharing and transparency.

Learn more about his keynote.

Tanya Cashorali – Beyond the Hype: Real-World Use Cases for AI in Shiny (Track: Shiny Innovation Hub)

Tanya Cashorali – Beyond the Hype: Real-World Use Cases for AI in Shiny

Tanya Cashorali is the founder and CEO of TCB Analytics, a boutique data and analytics consultancy. She will bridge the gap between the hype and reality of AI in Shiny, presenting real-world use cases that highlight the practical applications of AI technologies. Her insights are drawn from a rich career in data science, providing attendees with actionable knowledge to leverage AI in their Shiny projects.

Learn more about her keynote.

Pedro Silva – Shaping the Next 10: The Bright Future of Shiny (Track: Shiny Innovation Hub)

Pedro Silva – Shaping the Next 10: The Bright Future of Shiny

Pedro Silva is the Learning & Development Lead at Appsilon. He will take us on a forward-looking journey into the next ten years of Shiny, highlighting its recent improvements and expanding its role in critical sectors like pharma and life sciences. His talk promises to be an enlightening exploration of Shiny’s potential to transform data communication and decision-making processes.

Learn more about his keynote.

Conference Registration and Early Bird Specials

Registration for ShinyConf 2024 is still open, with early bird tickets available until March 1st.

Student tickets are also available for free, with full access to sessions, workshops and recordings after the event.

This is your chance to immerse yourself in the vibrant world of R Shiny, gaining insights from leading experts and connecting with a global community of enthusiasts.

Whether you’re a seasoned professional or new to the field, ShinyConf 2024 offers an enriching experience that spans the spectrum of data science and application development.

Register for the conference today!

Conclusion: Why Attend ShinyConf 2024?

ShinyConf 2024 is more than a conference; it’s a convergence of innovation, learning, and community. The keynotes are just the beginning—attendees will also benefit from practical workshops, diverse tracks, and invaluable networking opportunities.

Don’t miss out on the chance to expand your knowledge, connect with like-minded individuals, and explore the future of R Shiny together.

Register now to secure your spot at ShinyConf 2024 and take advantage of the early bird prices. Join us on this exciting journey into the world of R Shiny, where innovation meets data to create a brighter future.

The post appeared first on appsilon.com/blog/.

Continue reading: Get Ready for ShinyConf 2024: Explore Keynotes & Secure Your Spot

Long-term implications and future developments from ShinyConf 2024

The upcoming ShinyConf 2024 promises to deliver a rich spread of knowledge exchange, networking opportunities and innovation in R Shiny technology. This event, as outlined in the original article, is a prime opportunity to gain insights from a lineup of distinguished speakers who will explore the diverse applications and future of R Shiny in broad verticals such as open source, enterprise, life sciences and innovative use cases of AI.

Expanding global connections

ShinyConf serves as a significant platform where Shiny enthusiasts can globally network. This environment promotes learning and collaboratively innovating, further driving advancements in R Shiny. In the long term, this continued interaction can enhance the evolution of R Shiny technology and its applications, resulting in more sophisticated tools and advanced solutions.

Shiny – A solution for diverse sectors

Speakers like Eric Kostello and George Stagg spotlight the versatility of Shiny in different settings – from the entertainment industry to scientific research. The use cases presented might inspire further exploration of Shiny technology across varied sectors. They also indicate towards a future where data science solutions like Shiny become integral to decision-making processes in different industries.

Sustainable open source leadership

A crucial point highlighted by Dr. Tracy K. Teal is the indispensability of sustainable leadership in open source. The reflections she shares can steer open source maintainers towards better ways of community management and code governance. This could lead to healthier open source ecosystems and fuel future growth for projects like R Shiny.

Unveiling the future of Shiny

Pedro Silva’s endorsed plan to reveal what the next decade holds for Shiny is exciting, particularly as recent technological advancements have expanded Shiny’s role in sectors like pharma. The concepts and visions shared at ShinyConf 2024 could shape the development roadmap for future versions of the technology.

Actionable Advice

The key takeaways from the ShinyConf 2024 event offer significant value to any individual or organization interested in R Shiny. Here are a few pieces of advice on how best to capitalize on this opportunity:

- Register Early: Secure your spot and take advantage of early bird prices. This way, you not only save on registration costs but also ensure you do not miss out on this golden opportunity.

- Attend Keynotes and Sessions: The keynotes and other sessions hold a wealth of information from industry veterans. Attend these to gain unique insights and learn practical applications of R Shiny.

- Network: Take advantage of the global platform provided by ShinyConf to meet other Shiny enthusiasts and experts. Networking can lead to valuable relationships, collaborations, or even job opportunities.

- Participate in Workshops: Immersive workshops offer valuable hands-on experience. Take part in these sessions to understand the working of R Shiny better, which can be particularly beneficial for beginners.

- Explore Exhibits: Take time out to explore exhibits showcasing innovative uses of R Shiny. Learning from these can help spur your creativity and give you ideas for new ways of utilizing the technology.

Conclusion

ShinyConf 2024 is more than just a conference; it is an experience that offers opportunities to learn, innovate, and network with industry leaders and enthusiasts. By attending this event, participants can increase their understanding of R Shiny’s potential and plan for its promising future effectively. So, utilize this opportunity to deepen your R Shiny knowledge and engage with a global community.

Read the original article

by jsendak | Feb 18, 2024 | DS Articles

A Step-by-Step Approach to Unearthing Trends, Outliers, and Insights in your Data.

The Analysis and Impacts of Unearthing Data Trends, Outliers, and Insights

The rapid advancement of technology and the need for data-driven decisions have necessitated companies, organizations and individuals to grasp the concept of data analysis. At its core, it involves unearthing trends, outliers, and insights from data. This blog post dives deep into long term implications and future developments in the realm of data analysis. We’ll also discuss actionable advice to make data-informed decisions.

Long-term Implications

In times where data is often termed as the new oil, data-driven decision making has gained tremendous importance. Understanding trends, outliers, and insights may lead to:

- New Business Strategies: These insights could drive proactive business strategies, innovative solutions, and modifications in existing practices.

- Efficiency Improvement: Problem solving and process improvement through data analysis can lead to enhanced operational efficiency.

- Customer Satisfaction: Data-driven personalization of user experiences may lead to increased customer satisfaction and retention.

Possible Future Developments

Data analysis is seen as one of the fastest-growing fields. Thus, it’s expected trends and needs will shift over the next few years:

- AI-Based Analysis: As AI continues to advance, we might see heightened use of AI in data analysis for better accuracy and efficiency.

- Real-Time Analysis: Businesses might need real-time analysis to make instant decisions in critical situations.

- Data Privacy: The focus on data privacy will increase as organizations continue their quest for data-driven insights.

Actionable Advice

To act on the information that arises from data trends, outliers, and insights, consider these points:

- Invest in Tools: Automate the analysis process by investing in advanced data analysis tools. This could lead to more accurate findings and minimize manual errors.

- Experts are Essential: Train or hire experts in data analysis to make the most out of the data available.

- Focus on Data Security: Make Data security and privacy a priority amidst data collection and analysis.

A solid understanding of data and its trends, outliers, and insights can help organizations create strategies that ultimately provide a competitive advantage. By anticipating future developments and acting on sound advice, businesses can use data analysis to the greatest benefit.

Therefore, the ability to derive trends, outliers, and insights from your data is a priceless tool. With the right approach and tools, companies can turn data into actionable knowledge while staying ahead in this data-driven era.

Read the original article

by jsendak | Feb 18, 2024 | DS Articles

30 Python libraries to solve most AI problems, including GenAI, data videos, synthetization, model evaluation, computer vision and more.

Comprehensive Analysis: Python Libraries, AI, and Future Development

The rise of Artificial Intelligence (AI) and machine learning technologies have resulted in the explosion of Python libraries designed to solve AI problems. As mentioned in the original text, there are over 30 Python libraries aimed to fix most AI challenges. we can highlight solutions for GenAI, data videos, synthetization, model evaluation, and computer vision. In this discussion, we will delve into the long-term implications of these technologies and what they might mean for future advancements.

Long-term Implications and Future Developments

Python libraries geared towards addressing AI issues are increasingly becoming sophisticated, offering multiple solutions for complex AI tasks. This proliferation not only signifies increased efficiency in solving AI-related problems but also insinuates greater potential for developing more complex and sophisticated AI models in the future.

“The rapid development and sophistication of Python libraries for AI are building up a path towards increasingly intricate artificial intelligence systems.”

Thinking about these advancements in the long term, it is clear to see several implications and potential future developments. Here are a few of them:

- Improved AI applications: With better libraries, developers will improve existing AI applications to create more efficient, intelligent, and versatile systems.

- Increased automation: As Python libraries become more advanced, they will facilitate higher levels of automation in various industries.

- Enhanced machine learning capabilities: Advanced libraries will allow developers to create machine learning models that learn and adapt better, enhancing their accuracy and effectiveness.

- New AI opportunities: These advancements will likely open doors for new AI applications in fields that have yet to fully utilize the technology.

Actionable Advice

Considering the long-term implications and possible future developments, professionals in this field should equip themselves with necessary skills and knowledge to stay competitive and relevant. Here are a few actionable steps you can take:

- Stay updated: Regularly check for updates on Python libraries and other AI development tools. Understanding the latest developments will equip you with knowledge to develop better AI solutions.

- Continuous learning: Always strive to learn more about AI technologies. Consider taking online courses, attending workshops, or reading up on recent studies and papers.

- Diversify skills: Ensure to master different Python libraries that handle various AI tasks. The more versatile you are, the better your ability to create effective AI solutions.

In conclusion, the evolution of Python libraries is driving significant advancements in AI. By staying updated, acquiring more knowledge, and diversifying their skills, practitioners can tap into these advancements and contribute positively to the field of AI.

Read the original article

by jsendak | Feb 18, 2024 | AI

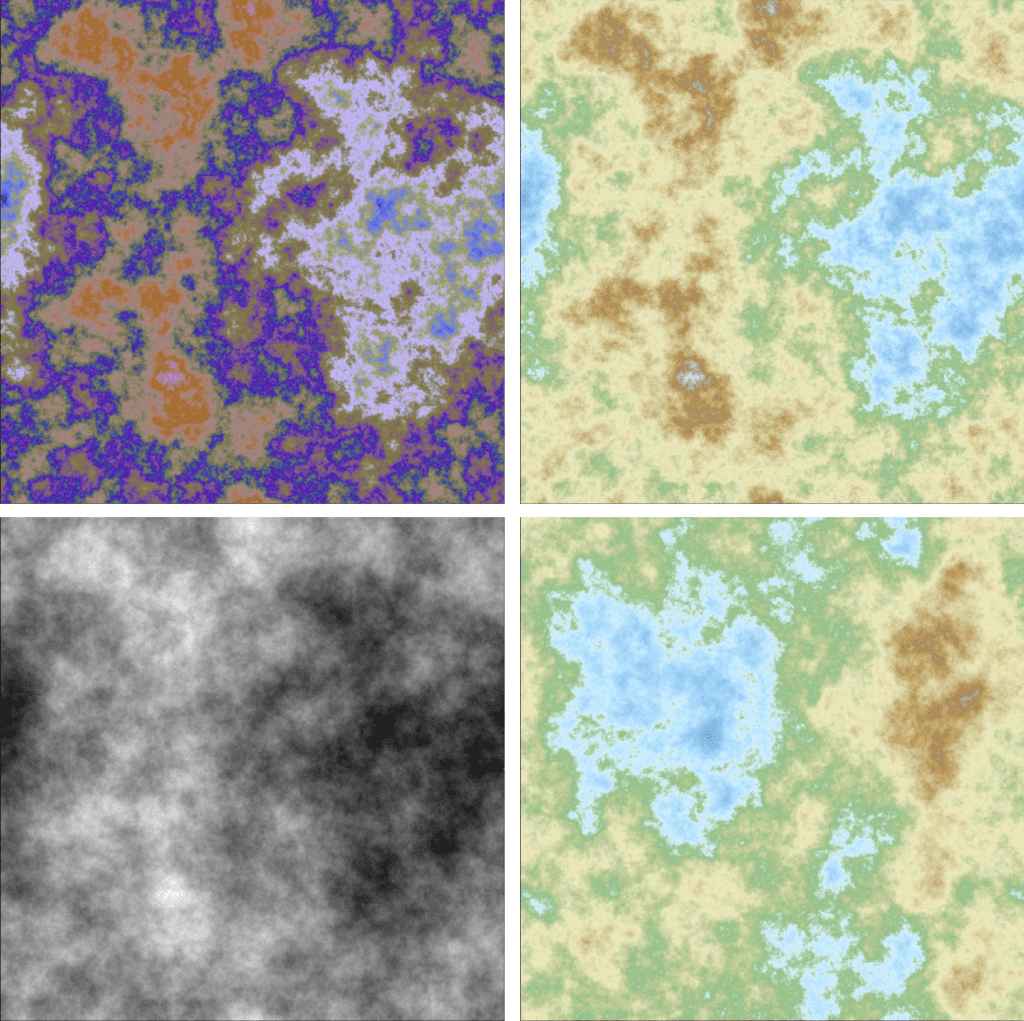

arXiv:2402.10115v1 Announce Type: new Abstract: In this study, we tackle a modern research challenge within the field of perceptual brain decoding, which revolves around synthesizing images from EEG signals using an adversarial deep learning framework. The specific objective is to recreate images belonging to various object categories by leveraging EEG recordings obtained while subjects view those images. To achieve this, we employ a Transformer-encoder based EEG encoder to produce EEG encodings, which serve as inputs to the generator component of the GAN network. Alongside the adversarial loss, we also incorporate perceptual loss to enhance the quality of the generated images.

Title: “Advancing Perceptual Brain Decoding: Synthesizing Images from EEG Signals with Adversarial Deep Learning”

Introduction:

In the realm of perceptual brain decoding, a fascinating research challenge has emerged – the synthesis of images from EEG signals using an innovative adversarial deep learning framework. This groundbreaking study aims to recreate images from diverse object categories by harnessing EEG recordings obtained while subjects view those very images. To accomplish this ambitious goal, the researchers have employed a Transformer-encoder based EEG encoder, which generates EEG encodings that serve as inputs to the generator component of the GAN network. In addition to the adversarial loss, the study also incorporates perceptual loss techniques to further enhance the quality of the generated images. This article delves into the core themes of this study, shedding light on the cutting-edge advancements in perceptual brain decoding and the potential implications for fields such as neuroscience and image synthesis.

Exploring the Power of Perceptual Brain Decoding: Synthesizing Images from EEG Signals

Advancements in the field of perceptual brain decoding have paved the way for exciting possibilities that were once confined to the realm of science fiction. In a recent study, researchers have successfully tackled the challenge of synthesizing images from EEG signals using an innovative approach that combines adversarial deep learning and perceptual loss. This groundbreaking research opens up new avenues for understanding the complex relationship between the human brain and visual perception.

The primary objective of this study was to recreate images belonging to different object categories by utilizing EEG recordings obtained while subjects viewed those images. To achieve this, the research team employed a Transformer-encoder based EEG encoder, a sophisticated neural network model capable of encoding EEG data effectively.

At the heart of this approach lies a generative adversarial network (GAN), a powerful deep learning architecture consisting of a generator and a discriminator. The generator component takes EEG encodings produced by the Transformer-encoder as inputs and synthesizes images based on this information. The discriminator then evaluates the generated images, providing feedback to the generator to refine its output iteratively.

However, simply training the GAN using adversarial loss is often insufficient to generate high-quality images that accurately depict the intended object categories. To address this limitation, the researchers introduced perceptual loss into the framework. Perceptual loss measures the difference between the features extracted from the generated image and the original image, ensuring that the synthesized images capture essential perceptual details.

The incorporation of perceptual loss significantly enhances the quality of the generated images, making them more realistic and faithful to the original visual stimuli. By combining adversarial loss and perceptual loss within the GAN framework, researchers have achieved impressive results in recreating meaningful images solely from EEG signals.

This breakthrough research has far-reaching implications in various domains. Firstly, it sheds light on the possibility of decoding human perception based on brain activity, providing valuable insights into the mechanisms behind visual processing. Additionally, the ability to synthesize images from EEG signals holds immense potential in fields such as neuroimaging, cognitive neuroscience, and even virtual reality.

One potential application of this technology is in assisting individuals with visual impairments. By leveraging EEG signals, it may be possible to create images directly in the human brain, bypassing the need for functioning visual sensory organs. Such advancements could revolutionize the lives of visually impaired individuals, granting them a new way to perceive and interact with the world.

The Future of Perceptual Brain Decoding

While this study represents a significant leap forward in perceptual brain decoding, it is essential to recognize that further research is necessary to fully unlock the potential of this technology. Challenges such as improving the resolution and fidelity of generated images, expanding the range of object categories that can be synthesized, and enhancing the interpretability of encoding models remain to be tackled.

Future studies could explore novel approaches, such as combining EEG signals with other neuroimaging techniques like functional magnetic resonance imaging (fMRI), to provide a more comprehensive and accurate understanding of neural activity during perception. Furthermore, leveraging transfer learning and generative models trained on massive datasets could enhance the capabilities of EEG-based image synthesis.

As we delve into the uncharted territory of perceptual brain decoding, we must embrace interdisciplinary collaborations and innovative thinking. By pushing the boundaries of our understanding, we can pave the way for a future where the human mind’s intricacies are tangibly accessible, unlocking new realms of possibility. The journey towards bridging perception and artificial intelligence has only just begun.

The paper arXiv:2402.10115v1 presents a novel approach to the field of perceptual brain decoding by using an adversarial deep learning framework to synthesize images from EEG signals. This research challenge is particularly interesting as it aims to recreate images belonging to various object categories by leveraging EEG recordings obtained while subjects view those images.

One of the key components of this approach is the use of a Transformer-encoder based EEG encoder. Transformers have gained significant attention in recent years due to their ability to capture long-range dependencies in sequential data. By applying a Transformer-based encoder to EEG signals, the authors aim to extract meaningful representations that can be used as inputs to the generator component of the GAN network.

The integration of an adversarial loss in the GAN framework is a crucial aspect of this research. Adversarial training has been widely successful in generating realistic images, and its application to EEG-based image synthesis adds a new dimension to the field. By training the generator and discriminator components of the GAN network simultaneously, the authors are able to improve the quality of the generated images by iteratively refining them.

In addition to the adversarial loss, the authors also incorporate a perceptual loss in their framework. This is an interesting choice, as perceptual loss focuses on capturing high-level features and structures in images. By incorporating perceptual loss, the authors aim to enhance the quality of the generated images by ensuring that they not only resemble the target object categories but also capture their perceptual characteristics.

Overall, this study presents a compelling approach to address the challenge of synthesizing images from EEG signals. The use of a Transformer-based EEG encoder and the integration of adversarial and perceptual losses in the GAN framework demonstrate a well-thought-out methodology. Moving forward, it would be interesting to see how this approach performs on a larger dataset and in more complex scenarios. Additionally, exploring potential applications of EEG-based image synthesis, such as in neurorehabilitation or virtual reality, could open up new avenues for research and development in this field.

Read the original article