by jsendak | Oct 31, 2024 | DS Articles

Pursue career success with big savings on edX programs using code EDXSUCCESS24 at checkout.

Long-Term Implications and Future Developments of Online Education

Technology has revolutionized education in many ways, bringing learning opportunities right at the click of a button. The recent promotional offer shared by edX, using the code EDXSUCCESS24, emphasizes the growing popularity of online education platforms. This new wave in education has great potential long-term societal and economic implications. Also, there are innovative developments expected in the future.

Long-Term Implications of Online Education

- Greater accessibility and inclusivity: With online platforms such as edX, education is no longer limited to traditional classrooms and those who can afford to attend universities. This creates a far more inclusive learning environment catering to individuals worldwide.

- Flexible learning: Online education offers the flexibility of learning at one’s own pace in their chosen environment. This is ideal for adult learners, working professionals, and students who prefer self-paced learning.

- Cost-effective education: Promotional deals like these offered by edX make quality education more affordable. In the long run, this could heavily influence the democratisation of education.

- Career enhancement: Online platforms provide a great opportunity for upskilling or reskilling. It aids individuals in advancing their careers or transitioning into a new field.

Future Developments

- Innovative teaching methods: Future developments in online education may include increased use of virtual reality, augmented reality, and AI in teaching methods.

- Personalized learning: Advanced analytics and adaptive learning systems could tailor education to individual needs and preferences, making learning more effective.

- Collaboration: Future developments might focus on improving interaction, allowing students to collaborate, brainstorm and communicate virtually.

Actionable Advice

Here is some advice that learners can leverage to advantageously utilise offers like EDXSUCCESS24.

- Research about the course and its content before enrolling. Make sure it aligns with your career aspirations or learning goals.

- Manage your time wisely. Although online education provides flexibility, maintaining discipline is essential for successful completion.

- Utilize collaborative features like discussion forums. They provide an excellent platform for asking questions, exchanging knowledge, and networking.

Indeed, online platforms like edX are not just transforming the realm of education but also making it more accessible, interactive, affordable – in essence, democratizing it. As we move further into this digital age, virtual learning will continue to evolve and enhance the way we learn.

Read the original article

by jsendak | Oct 31, 2024 | DS Articles

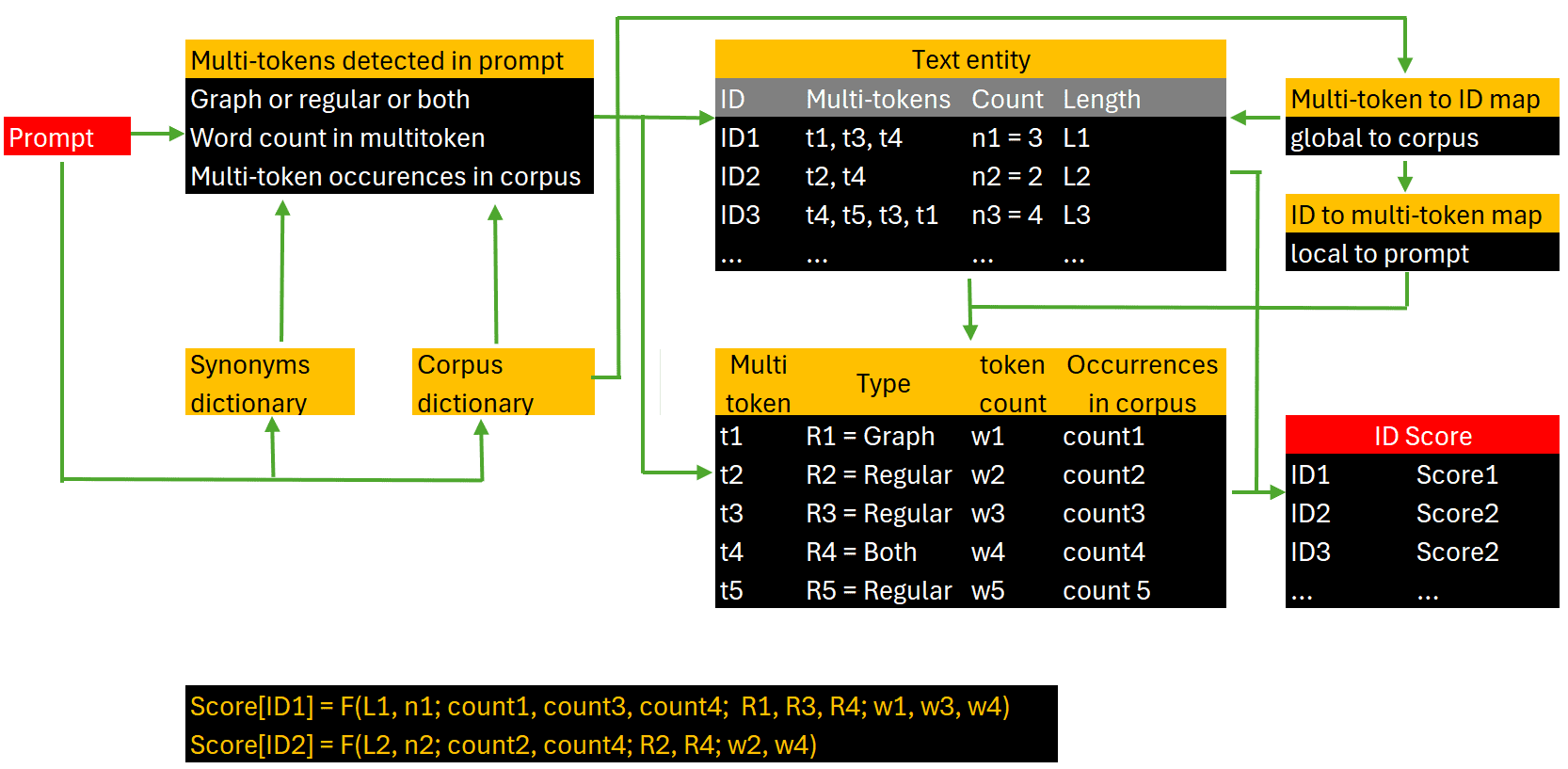

LLM Chunking, Indexing, Scoring and Agents, in a Nutshell. The new PageRank of RAG/LLM. With details on building relevancy scores.

Unpacking the Future of RAG/LLM’s New PageRank: Relevancy Scores, Chunking, Indexing, and Scoring

The recent move of introducing a new PageRank system by RAG/LLM is a significant development in the evolving landscape of digital marketing. Unraveling the importance of chunking, indexing, scoring, and agents in this mix is crucial for understanding its long-term implications and future potential.

Understanding the New PageRank of RAG/LLM

The new PageRank system represents a shift in the way digital content is ranked and viewed online. It’s characterized by an increased emphasis on relevancy scores. This shift towards relevancy is likely to result in more personalized and engaging experiences for internet users, making it more important than ever for content creators to match their offerings to their audience’s interests and needs.

Long-term Implications

Impact on Digital Marketing

The new PageRank system, with its emphasis on relevancy scores, represents a significant shift in the way businesses must approach digital marketing. Rather than simply aiming for high traffic volumes, the focus now should be on creating high-quality, relevant content that meets user needs and creates engagement.

Dominance of Data-Driven Strategies

This shift towards greater relevance also implies an increased reliance on big data and analytics. As the algorithm becomes more sophisticated, businesses will need to become more data-oriented and develop strategies around data insights.

Possible Future Developments

Scoring System Enhancement

With the advancements in technology and rise in data-driven marketing tactics, it’s predictable that RAG/LLM will continue to refine their scoring system. Enhanced capabilities may include real-time updates of scores or predictive scoring based on historical data.

Expanded Role of Agents

As the web becomes more complex, agents – applications that automatically gather and process information – will play an increasingly important role. Their duties may expand to include more comprehensive data gathering, processing, presentation, and decision-making, facilitating a more customized web experience.

Actionable Advice

- Focus on Relevance: In line with RAG/LLM’s shift towards relevancy, businesses should prioritize creating content that is relevant and valuable to their target audience.

- Invest in Data Analysis: As the importance of relevancy scoring becomes the norm, having a solid understanding of data analytics and interpretation will be crucial to business success.

- Leaning on Automation: Invest in sophisticated automation tools and agents. They can conduct efficient data gathering and conversion, facilitating deeper insights and enabling businesses to respond to the fast-paced world of digital marketing.

In conclusion, building a successful online strategy in the era of the new PageRank requires a solid understanding of relevancy scoring and the effective use of data and tools like agents. By staying ahead of these trends, businesses can fully leverage the power of digital marketing to reach and engage their target audience.

Read the original article

by jsendak | Oct 31, 2024 | AI

In this paper, we introduce Auto-Intent, a method to adapt a pre-trained large language model (LLM) as an agent for a target domain without direct fine-tuning, where we empirically focus on web…

In the realm of natural language processing, the ability to adapt pre-trained language models for specific domains without the need for extensive fine-tuning has been a long-standing challenge. However, in a groundbreaking development, a team of researchers has introduced Auto-Intent, a novel method that enables the adaptation of a pre-trained large language model (LLM) as an agent for a target domain, specifically focusing on the web. This paper delves into the empirical exploration of Auto-Intent, shedding light on its potential to revolutionize how language models can be seamlessly integrated into various domains without the resource-intensive process of direct fine-tuning.

Exploring the Power of Auto-Intent: Revolutionizing Language Models

Language models have come a long way in transforming the field of natural language processing. From helping us write emails to generating coherent text, these models have proven to be a force to reckon with. However, the current challenge lies in adapting these models to specific domains without the need for direct fine-tuning. In this article, we introduce Auto-Intent, a groundbreaking method that aims to revolutionize the way we use large language models (LLMs) in target domains.

The Need for Adaptation

When utilizing language models in specific domains, traditional approaches require fine-tuning the model on a dataset from the target domain. Although effective, this process can be time-consuming, resource-intensive, and may not be feasible for every domain. Additionally, constant updates and evolving target domains can pose challenges in keeping the model up-to-date and relevant.

Auto-Intent provides a solution to these problems by eliminating the need for direct fine-tuning. Instead, it utilizes the concept of intent recognition to enable fine-grained adaptation of LLMs.

The Power of Intent Recognition

Intent recognition is the process of identifying the intention behind a user’s input. It has been widely used in applications such as chatbots, voice assistants, and recommendation systems. Auto-Intent leverages this power by training an intent recognition model on a dataset from the target domain.

The intent recognition model learns to identify the specific intents of user queries or inputs in the target domain. By extracting this information, Auto-Intent understands the underlying themes and concepts of the target domain, enabling the LLM to generate contextually relevant responses.

Improving Adaptability with Auto-Intent

Once the intent recognition model is trained, Auto-Intent utilizes it to fine-tune the LLM without direct modification. Here’s how it works:

- The intent recognition model analyzes the user’s input and identifies its intent within the target domain.

- Auto-Intent then selects the most suitable adaptation strategy for the identified intent.

- The LLM undergoes a contextual adaptation process based on the selected strategy.

- The adapted LLM generates a response that aligns with the user’s intent, specific to the target domain.

This process allows the LLM to adapt dynamically to various intents within the target domain without the need for manual fine-tuning. Furthermore, Auto-Intent can handle evolving target domains by introducing updates to the intent recognition model, ensuring long-term adaptability.

Potential Applications and Benefits

Auto-Intent opens doors to a wide range of applications and benefits:

- Customer Support: An LLM adapted with Auto-Intent can provide contextually relevant responses to customer queries in various industries.

- Content Generation: Content creators can leverage Auto-Intent to generate domain-specific content with ease.

- Virtual Assistants: Personal voice assistants can adapt to user preferences and intents more effectively using Auto-Intent.

Conclusion

Auto-Intent paves the way for a new era in language model adaptation. By harnessing the power of intent recognition, it eliminates the need for direct fine-tuning, saving time, resources, and enabling dynamic adaptability. With its potential applications in customer support, content generation, and virtual assistants, Auto-Intent promises to revolutionize the way we interact with language models in target domains.

“Auto-Intent: Your gateway to contextually adaptive language models.”

domain adaptation. The authors propose a novel approach called Auto-Intent, which enables the adaptation of a pre-trained large language model (LLM) as an agent for a specific target domain, such as the web domain, without the need for direct fine-tuning.

The ability to adapt a pre-trained LLM to a specific domain is crucial for real-world applications. Fine-tuning a large language model on domain-specific data can be time-consuming and computationally expensive. Furthermore, fine-tuning may require a large amount of labeled data, which may not always be available for a target domain.

Auto-Intent addresses these challenges by leveraging intent classification, a fundamental task in natural language understanding. Intent classification involves identifying the intention or purpose behind a user’s query or statement. By using intent classification, Auto-Intent is able to adapt a pre-trained LLM to a target domain without the need for fine-tuning.

The authors propose a two-step process for domain adaptation using Auto-Intent. First, they train an intent classifier on a small amount of labeled data from the target domain. The intent classifier is used to identify the intent behind user queries in the target domain. This step allows the system to understand the specific context and requirements of the target domain.

In the second step, the pre-trained LLM is adapted to the target domain using the intent classifier. The authors propose a method called “intent masking,” where the intent label is used to mask out irrelevant parts of the input during adaptation. By focusing on the intent of the user query, the pre-trained LLM can be effectively adapted to the target domain without the need for direct fine-tuning.

The experimental results presented in the paper demonstrate the effectiveness of Auto-Intent for domain adaptation in the web domain. The authors compare their method to different baselines, including fine-tuning on target domain data and using a pre-trained LLM without adaptation. The results show that Auto-Intent achieves comparable or even better performance than these baselines, while requiring significantly less labeled data and computational resources.

One potential limitation of Auto-Intent is its reliance on intent classification. If the intent classifier fails to accurately identify the intent behind user queries, it may lead to suboptimal adaptation of the pre-trained LLM. However, the authors address this issue by proposing a self-training approach, where the intent classifier is iteratively improved using pseudo-labeled data from the target domain. This iterative process helps to mitigate the impact of potential errors in intent classification.

In conclusion, Auto-Intent provides a promising approach for domain adaptation of pre-trained LLMs without direct fine-tuning. By leveraging intent classification and intent masking, Auto-Intent enables the adaptation of a pre-trained LLM to a target domain with minimal labeled data and computational resources. Further research could explore the application of Auto-Intent to other domains and investigate its performance in scenarios with limited labeled data availability.

Read the original article

by jsendak | Oct 31, 2024 | Computer Science

arXiv:2410.22350v1 Announce Type: new

Abstract: In this paper, we propose a quality-aware end-to-end audio-visual neural speaker diarization framework, which comprises three key techniques. First, our audio-visual model takes both audio and visual features as inputs, utilizing a series of binary classification output layers to simultaneously identify the activities of all speakers. This end-to-end framework is meticulously designed to effectively handle situations of overlapping speech, providing accurate discrimination between speech and non-speech segments through the utilization of multi-modal information. Next, we employ a quality-aware audio-visual fusion structure to address signal quality issues for both audio degradations, such as noise, reverberation and other distortions, and video degradations, such as occlusions, off-screen speakers, or unreliable detection. Finally, a cross attention mechanism applied to multi-speaker embedding empowers the network to handle scenarios with varying numbers of speakers. Our experimental results, obtained from various data sets, demonstrate the robustness of our proposed techniques in diverse acoustic environments. Even in scenarios with severely degraded video quality, our system attains performance levels comparable to the best available audio-visual systems.

Expert Commentary: A Quality-Aware End-to-End Audio-Visual Neural Speaker Diarization Framework

This paper presents a novel approach to audio-visual speaker diarization, which is the process of determining who is speaking when in an audio or video recording. Speaker diarization is a crucial step in various multimedia information systems, such as video conferencing, surveillance systems, and automatic transcription services. This research proposes a quality-aware end-to-end framework that leverages both audio and visual information to accurately identify and separate individual speakers, even in challenging scenarios.

The proposed framework is multi-disciplinary in nature, combining concepts from audio processing, computer vision, and deep learning. By taking both audio and visual features as inputs, the model is able to capture a broader range of information, leading to more accurate speaker discrimination. This multi-modal approach allows the system to handle situations with overlapping speech, where audio-only methods may struggle.

One key aspect of this framework is the quality-aware audio-visual fusion structure. It addresses signal quality issues that commonly arise in real-world scenarios, such as noise, reverberation, occlusions, and unreliable detection. By incorporating quality-aware fusion, the system can mitigate the negative effects of audio and video degradations, leading to more robust performance. This is particularly important in applications where the video quality may be compromised, as the proposed framework can still perform at high levels.

Another notable contribution of this research is the use of a cross attention mechanism applied to multi-speaker embedding. This mechanism enables the network to handle scenarios with varying numbers of speakers. This is crucial in real-world scenarios where the number of speakers may change dynamically, such as meetings or group conversations.

The experimental results presented in the paper demonstrate the effectiveness and robustness of the proposed techniques. The framework achieves competitive performance on various datasets, even in situations with severely degraded video quality. These results highlight the potential of leveraging both audio and visual information for speaker diarization tasks.

In the wider field of multimedia information systems, this research contributes to the advancement of audio-visual processing techniques. By combining audio and visual cues, the proposed framework enhances the capabilities of multimedia systems, enabling more accurate and reliable speaker diarization. This has implications for various applications, including video surveillance, automatic transcription services, and virtual reality systems.

Furthermore, the concepts presented in this paper have connections to other related fields such as animations, artificial reality, augmented reality, and virtual realities. The use of audio-visual fusion and multi-modal information processing can be applied to enhance user experiences in these domains. For example, in virtual reality, accurate audio-visual synchronization and speaker separation can greatly enhance the immersion and realism of virtual environments, leading to more engaging experiences for users.

In conclusion, this paper introduces a quality-aware end-to-end audio-visual neural speaker diarization framework that leverages multi-modal information and addresses signal quality issues. The proposed techniques demonstrate robust performance in diverse acoustic environments, highlighting the potential of combining audio and visual cues for speaker diarization tasks. This research contributes to the wider field of multimedia information systems and has implications for various related domains, such as animations, artificial reality, augmented reality, and virtual realities.

Read the original article

by jsendak | Oct 31, 2024 | AI

arXiv:2410.22457v1 Announce Type: new

Abstract: Advancements in Large Language Models (LLMs) are revolutionizing the development of autonomous agentic systems by enabling dynamic, context-aware task decomposition and automated tool selection. These sophisticated systems possess significant automation potential across various industries, managing complex tasks, interacting with external systems to enhance knowledge, and executing actions independently. This paper presents three primary contributions to advance this field:

– Advanced Agentic Framework: A system that handles multi-hop queries, generates and executes task graphs, selects appropriate tools, and adapts to real-time changes.

– Novel Evaluation Metrics: Introduction of Node F1 Score, Structural Similarity Index (SSI), and Tool F1 Score to comprehensively assess agentic systems.

– Specialized Dataset: Development of an AsyncHow-based dataset for analyzing agent behavior across different task complexities.

Our findings reveal that asynchronous and dynamic task graph decomposition significantly enhances system responsiveness and scalability, particularly for complex, multi-step tasks. Detailed analysis shows that structural and node-level metrics are crucial for sequential tasks, while tool-related metrics are more important for parallel tasks. Specifically, the Structural Similarity Index (SSI) is the most significant predictor of performance in sequential tasks, and the Tool F1 Score is essential for parallel tasks. These insights highlight the need for balanced evaluation methods that capture both structural and operational dimensions of agentic systems. Additionally, our evaluation framework, validated through empirical analysis and statistical testing, provides valuable insights for improving the adaptability and reliability of agentic systems in dynamic environments.

Advancements in Large Language Models (LLMs) and the Future of Autonomous Agentic Systems

Recent advancements in Large Language Models (LLMs) are transforming the development of autonomous agentic systems. These systems have the potential to revolutionize various industries by managing complex tasks, interacting with external systems, and executing actions independently. The capabilities of LLMs enable these systems to dynamically adapt to real-time changes and make context-aware decisions.

Advanced Agentic Framework

The first contribution presented in this paper is an advanced agentic framework. This framework is designed to handle multi-hop queries, generate and execute task graphs, select appropriate tools, and adapt to real-time changes. The ability to decompose complex tasks into smaller subtasks and select the most suitable tools is crucial for efficient and effective system performance. This framework sets the foundation for building highly sophisticated and responsive agentic systems.

Novel Evaluation Metrics

In order to comprehensively assess the performance of agentic systems, the paper introduces three novel evaluation metrics: Node F1 Score, Structural Similarity Index (SSI), and Tool F1 Score. These metrics go beyond traditional evaluation measures and capture both the structural and operational dimensions of agentic systems. The Node F1 Score and SSI are particularly important for sequential tasks, while the Tool F1 Score is crucial for parallel tasks. By measuring these metrics, the evaluation framework provides a balanced assessment of system performance.

Specialized Dataset

The paper also contributes by developing a specialized dataset called AsyncHow. This dataset is designed for analyzing agent behavior across different levels of task complexity. By using this dataset, researchers can gain insights into the adaptability and reliability of agentic systems in dynamic environments.

Analysis and Expert Insights

The multi-disciplinary nature of the concepts presented in this paper is noteworthy. The advancements in Large Language Models (LLMs) integrate natural language processing, machine learning, and artificial intelligence to enable autonomous agentic systems. These systems combine knowledge representation, task decomposition, tool selection, and real-time adaptability, which require expertise in areas such as computer science, cognitive science, and information systems.

The findings of this research highlight the importance of asynchronous and dynamic task graph decomposition for system responsiveness and scalability, especially for complex, multi-step tasks. This insight has ramifications across various industries, where the ability to efficiently manage complex tasks can lead to significant time and cost savings.

Another crucial finding is the significance of different evaluation metrics for sequential and parallel tasks. The Structural Similarity Index (SSI) proves to be the most influential predictor of performance in sequential tasks, suggesting the importance of maintaining structural coherence and order. On the other hand, the Tool F1 Score emerges as a key metric for parallel tasks, emphasizing the need for precise tool selection and execution.

The paper’s evaluation framework provides valuable insights for improving the adaptability and reliability of agentic systems in dynamic environments. The empirical analysis and statistical testing conducted to validate the framework enhance its credibility and applicability. Researchers and practitioners can leverage these insights to enhance the performance and efficiency of autonomous agentic systems.

Overall, the advancements in Large Language Models and the contributions presented in this paper open up new horizons for the development and utilization of autonomous agentic systems. The combination of advanced frameworks, novel evaluation metrics, and specialized datasets contribute to the advancement of the field and pave the way for future innovations in automation across various domains.

Read the original article