by jsendak | Mar 30, 2025 | AI News

Potential Future Trends in [Industry]

In recent years, the [industry] has experienced significant advancements and shifts in technology, consumer behavior, and market dynamics. These changes have paved the way for a number of potential future trends that could shape the industry in the coming years. In this article, we will explore some of these key trends and provide our own unique predictions and recommendations for the industry.

1. Embracing Artificial Intelligence (AI)

Artificial Intelligence (AI) has already permeated various industries, and the [industry] is no exception. From automating repetitive tasks to enhancing customer service through chatbots, AI has the potential to revolutionize the way [industry] operates. In the future, we can expect to see AI being integrated into various aspects of the industry, such as predictive analytics for demand forecasting, personalized recommendation systems, and even autonomous vehicles for product delivery.

Prediction: By 2025, AI will be a crucial component of every [industry] company’s operations, leading to increased efficiency, cost savings, and improved customer experiences.

2. Sustainability and Eco-Friendly Practices

As concerns about climate change and environmental sustainability continue to grow, the [industry] will be under increasing pressure to adopt more sustainable and eco-friendly practices. Consumers are becoming more conscious of their purchasing decisions, and they expect companies to operate in an environmentally responsible manner. We foresee a future where environmentally-friendly packaging, renewable energy sources, and responsible waste management will become standard practices in the industry.

Prediction: By 2030, sustainability will be a non-negotiable factor for consumer purchasing decisions, prompting [industry] companies to prioritize eco-friendly practices throughout their entire supply chains.

3. Rise of E-commerce

The rise of e-commerce has already transformed the retail sector, and it is poised to have a similar impact on the [industry]. With the increasing convenience and accessibility of online shopping, consumers are now more inclined to purchase [industry] products online. This shift towards e-commerce will require [industry] companies to develop robust online platforms, invest in logistics and fulfillment capabilities, and provide a seamless online shopping experience.

Prediction: By 2023, e-commerce will account for more than 30% of total [industry] sales, driving [industry] companies to adapt their business models and invest in digital infrastructure.

4. Personalization and Customization

In an era of increasing personalization, consumers now expect tailored experiences and products. This trend is likely to extend to the [industry], where consumers will demand more personalized and customizable options. Companies that can offer personalized recommendations, customizable products, and unique shopping experiences will have a competitive edge in the future.

Prediction: By 2025, customization will become a standard offering in the [industry], with companies leveraging technologies such as 3D printing to provide personalized products to consumers.

Conclusion

The [industry] is poised to undergo significant transformations in the coming years as technology continues to advance and consumer expectations evolve. The integration of AI, adoption of sustainable practices, rise of e-commerce, and emphasis on personalization are just a few of the potential future trends that will shape the industry. [Industry] companies should embrace these trends as opportunities for growth and innovation, ensuring they stay ahead of the curve and meet the evolving needs of their customers.

References:

- [Insert reference]

- [Insert reference]

- [Insert reference]

by jsendak | Mar 29, 2025 | Art

Preface:

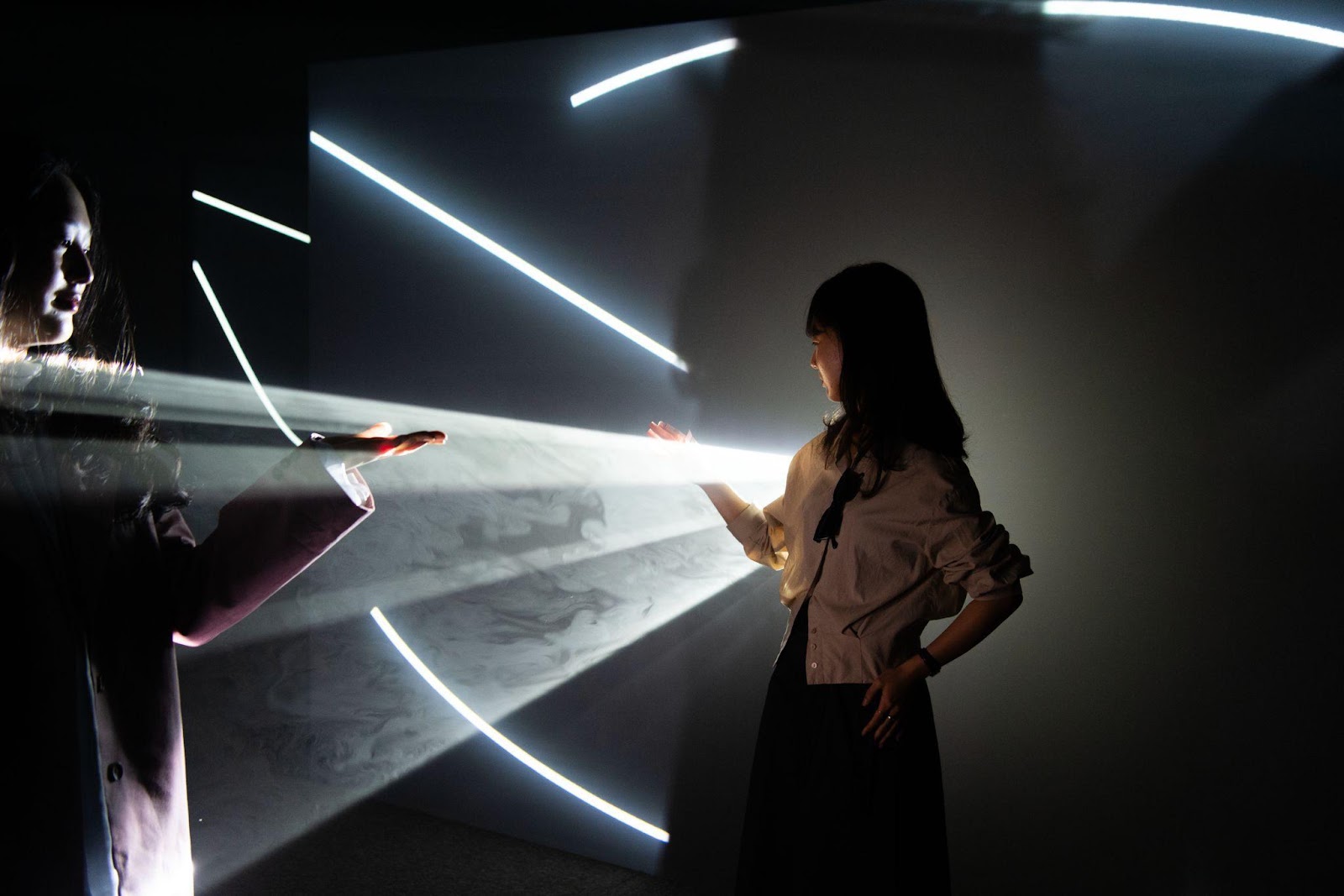

Lights, rights, drawing, landscapes, and a rhino – these seemingly disparate elements are interconnected by a common thread, weaving a tapestry that speaks to the very essence of our existence. As we navigate through the corridors of time, embarking on this journey, we find ourselves exploring the delicate balance between human progress and the preservation of the natural world.

In the realm of art and aesthetics, light has always held a special place. It illuminates masterpieces, casting shadows that evoke emotions and prompt contemplation. From the divine glow of the Renaissance to the experimental use of light in modern installations, artists harness this ephemeral power to communicate profound truths about our shared humanity.

Equally essential are rights – those fundamental principles that have shaped the course of history. Through struggles and triumphs, our collective struggle for justice has given birth to revolutionary ideas and paved the way for a more equitable society. From the Magna Carta to the Universal Declaration of Human Rights, the fight for justice continues to resonate in our present-day world.

When we turn our gaze towards the realm of drawing, we find the manifestation of human imagination and ingenuity. Drawing allows us to capture the fleeting moments of life and reflect upon the beauty and complexity of our surroundings. This ancient art form has evolved alongside humanity, serving as a visual language that transcends cultural barriers and speaks directly to our souls.

The vast landscapes that envelop our planet have long captivated our imagination – from the breathtaking majesty of the Grand Canyon to the serene beauty of the Sahara desert. In the face of urbanization and environmental challenges, these natural wonders remind us of the importance of preserving our planet for future generations.

Finally, the rhino – an emblem of a fragile ecosystem under threat. This majestic creature serves as a symbol of the urgent need to protect our biodiversity. Across continents, efforts are underway to combat habitat loss and poaching, recognizing that the extinction of one species heralds a dangerous ripple effect on our fragile planet.

Within the following article, we embark on a voyage that connects these diverse elements, unraveling the hidden ties that bind our past, present, and future. Through historical anecdotes, contemporary references, and expert insights, we illuminate the intricate tapestry of human progress and the preservation of our natural world – recognizing that the harmony of lights, rights, drawing, landscapes, and the rhino is integral to our shared journey.

Lights, rights, drawing, landscapes and a rhino.

Read the original article

by jsendak | Mar 29, 2025 | DS Articles

[This article was first published on

R Works, and kindly contributed to

R-bloggers]. (You can report issue about the content on this page

here)

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

In February, one hundred fifty-nine new packages made it to CRAN. Here are my Top 40 picks in fifteen categories: Artificial Intelligence, Computational Methods, Ecology, Genomics, Health Sciences, Mathematics, Machine Learning, Medicine, Music, Pharma, Statistics, Time Series, Utilities, Visualization, and Weather.

Artificial Intelligence

chores v0.1.0: Provides a collection of ergonomic large language model assistants designed to help you complete repetitive, hard-to-automate tasks quickly. After selecting some code, press the keyboard shortcut you’ve chosen to trigger the package app, select an assistant, and watch your chore be carried out. Users can create custom helpers just by writing some instructions in a markdown file. There are three vignettes: Getting started, Custom helpers, and Gallery.

gander v0.1.0: Provides a Copilot completion experience that knows how to talk to the objects in your R environment. ellmer chats are integrated directly into your RStudio and Positron sessions, automatically incorporating relevant context from surrounding lines of code and your global environment. See the vignette to get started.

GitAI v0.1.0: Provides functions to scan multiple Git repositories, pull content from specified files, and process it with LLMs. You can summarize the content, extract information and data, or find answers to your questions about the repositories. The output can be stored in a vector database and used for semantic search or as a part of a RAG (Retrieval Augmented Generation) prompt. See the vignette.

Computational Methods

nlpembeds v1.0.0: Provides efficient methods to compute co-occurrence matrices, point wise mutual information (PMI), and singular value decomposition (SVD), especially useful when working with huge databases in biomedical and clinical settings. Functions can be called on SQL databases, enabling the computation of co-occurrence matrices of tens of gigabytes of data, representing millions of patients over tens of years. See Hong (2021) for background and the vignette for examples.

NLPwavelet v1.0: Provides functions for Bayesian wavelet analysis using individual non-local priors as described in Sanyal & Ferreira (2017) and non-local prior mixtures as described in Sanyal (2025). See README to get started.

pnd v0.0.9: Provides functions to compute numerical derivatives including gradients, Jacobians, and Hessians through finite-difference approximations with parallel capabilities and optimal step-size selection to improve accuracy. Advanced features include computing derivatives of arbitrary order. There are three vignettes on the topics: Compatibility with numDeriv, Parallel numerical derivatives, and Step-size selection.

rmcmc v0.1.1: Provides functions to simulate Markov chains using the proposal from Livingstone and Zanella (2022) to compute MCMC estimates of expectations with respect to a target distribution on a real-valued vector space. The package also provides implementations of alternative proposal distributions, such as (Gaussian) random walk and Langevin proposals. Optionally, BridgeStan’s R interface BridgeStan can be used to specify the target distribution. There is an Introduction to the Barker proposal and a vignette on Adjusting the noise distribution.

sgdGMF v1.0: Implements a framework to estimate high-dimensional, generalized matrix factorization models using penalized maximum likelihood under a dispersion exponential family specification, including the stochastic gradient descent algorithm with a block-wise mini-batch strategy and an efficient adaptive learning rate schedule to stabilize convergence. All the theoretical details can be found in Castiglione et al. (2024). Also included are the alternated iterative re-weighted least squares and the quasi-Newton method with diagonal approximation of the Fisher information matrix discussed in Kidzinski et al. (2022). There are four vignettes, including introduction and residuals.

Data

acledR v0.1.0: Provides tools for working with data from ACLED (Armed Conflict Location and Event Data). Functions include simplified access to ACLED’s API, methods for keeping local versions of ACLED data up-to-date, and functions for common ACLED data transformations. See the vignette to get started.

Horsekicks v1/0/2: Provides extensions to the classical dataset Death by the kick of a horse in the Prussian Army first used by Ladislaus von Bortkeiwicz in his treatise on the Poisson distribution Das Gesetz der kleinen Zahlen. Also included are deaths by falling from a horse and by drowning. See the vignette.

OhdsiReportGenerator v1.0.1: Extracts results from the Observational Health Data Sciences and Informatics result database and generates Quarto reports and presentations. See the package guide.

wbwdi v1.0.0: Provides functions to access and analyze the World Bank’s World Development Indicators (WDI) using the corresponding API. WDI provides more than 24,000 country or region-level indicators for various contexts. See the vignette.

Ecology

rangr v1.0.6: Implements a mechanistic virtual species simulator that integrates population dynamics and dispersal to study the effects of environmental change on population growth and range shifts. Look here for background and see the vignette to get started.

Economics

godley v0.2.2: Provides tools to define, simulate, and validate stock-flow consistent (SFC) macroeconomic models by specifying governing systems of equations. Users can analyze how macroeconomic structures affect key variables, perform sensitivity analyses, introduce policy shocks, and visualize resulting economic scenarios. See Godley and Lavoie (2007), Kinsella and O’Shea (2010) for background and the vignette to get started.

Health Sciences

matriz v1.0.1: Implements a workflow that provides tools to create, update, and fill literature matrices commonly used in research, specifically epidemiology and health sciences research. See README to get started.

Mathematics

flint v0.0.3: Provides an interface to FLINT, a C library for number theory which extends GNU MPFR and GNU MP with support for arithmetic in standard rings (the integers, the integers modulo n, the rational, p-adic, real, and complex numbers) as well as vectors, matrices, polynomials, and power series over rings and implements midpoint-radius interval arithmetic, in the real and complex numbers See Johansson (2017) for information on computation in arbitrary precision with rigorous propagation of errors and see the NIST Digital Library of Mathematical Functions for information on additional capabilities. Look here to get started.

Machine Learning

tall v0.1.1: Implements a general-purpose tool for analyzing textual data as a shiny application with features that include a comprehensive workflow, data cleaning, preprocessing, statistical analysis, and visualization. See the vignette.

“}

“}

Medicine

BayesERtools v0.2.1: Provides tools that facilitate exposure-response analysis using Bayesian methods. These include a streamlined workflow for fitting types of models that are commonly used in exposure-response analysis – linear and Emax for continuous endpoints, logistic linear and logistic Emax for binary endpoints, as well as performing simulation and visualization. Look here to learn more about the workflow, and see the vignette for an overview.

Medicine Continued

PatientLevelPrediction v6.4.0: Implements a framework to create patient-level prediction models using the Observational Medical Outcomes Partnership Common Data Model. Given a cohort of interest and an outcome of interest, the package can use data in the Common Data Model to build a large set of features, which can then be used to fit a predictive model with a number of machine learning algorithms. This is further described in Reps et al. (2017). There are fourteen vignettes, including Building Patient Level Prediction Models and Best Practices.

SimTOST v1.0.2: Implements a Monte Carlo simulation approach to estimating sample sizes, power, and type I error rates for bio-equivalence trials that are based on the Two One-Sided Tests (TOST) procedure. Users can model complex trial scenarios, including parallel and crossover designs, intra-subject variability, and different equivalence margins. See Schuirmann (1987), Mielke et al. (2018), and Shieh (2022) for background. There are seven vignettes including Introduction and Bioequivalence Tests for Parallel Trial Designs: 2 Arms, 1 Endpoint.

Music

musicXML v1.0.1: Implements tools to facilitate data sonification and create files to share music notation in the musicXML format. Several classes are defined for basic musical objects such as note pitch, note duration, note, measure, and score. Sonification functions map data into musical attributes such as pitch, loudness, or duration. See the blog and Renard and Le Bescond (2022) for examples and the vignette to get started.

Pharma

emcAdr v1.2: Provides computational methods for detecting adverse high-order drug interactions from individual case safety reports using statistical techniques, allowing the exploration of higher-order interactions among drug cocktails. See the vignette.

SynergyLMM v1.0.1: Implements a framework for evaluating drug combination effects in preclinical in vivo studies, which provides functions to analyze longitudinal tumor growth experiments using linear mixed-effects models, perform time-dependent analyses of synergy and antagonism, evaluate model diagnostics and performance, and assess both post-hoc and a priori statistical power. See Demidenko & Miller (2019 for the calculation of drug combination synergy and Pinheiro and Bates (2000) and Gałecki & Burzykowski (2013) for information on linear mixed-effects models. The vignette offers a tutorial.

vigicaen v0.15.6: Implements a toolbox to perform the analysis of the World Health Organization (WHO) Pharmacovigilance database, VigiBase, with functions to load data, perform data management, disproportionality analysis, and descriptive statistics. Intended for pharmacovigilance routine use or studies. There are eight vignettes, including basic workflow and routine pharmacoviligance.

Psychology

cogirt v1.0.0: Provides tools to psychometrically analyze latent individual differences related to tasks, interventions, or maturational/aging effects in the context of experimental or longitudinal cognitive research using methods first described by Thomas et al. (2020). See the vignette.

Statistics

DiscreteDLM v1.0.0: Provides tools for fitting Bayesian distributed lag models (DLMs) to count or binary, longitudinal response data. Count data are fit using negative binomial regression, binary are fit using quantile regression. Lag contribution is fit via b-splines. See Dempsey and Wyse (2025) for background and README for examples.

oneinfl v1.0.1: Provides functions to estimate Estimates one-inflated positive Poisson, one-inflated zero-truncated negative binomial regression models, positive Poisson models, and zero-truncated negative binomial models along with marginal effects and their standard errors. The models and applications are described in Godwin (2024). See README for and example.

oneinfl v1.0.1: Provides functions to estimate Estimates one-inflated positive Poisson, one-inflated zero-truncated negative binomial regression models, positive Poisson models, and zero-truncated negative binomial models along with marginal effects and their standard errors. The models and applications are described in Godwin (2024). See README for and example.

Time Series

BayesChange v2/0/0: Provides functions for change point detection on univariate and multivariate time series according to the methods presented by Martinez & Mena (2014) and Corradin et al. (2022) along with methods for clustering time dependent data with common change points. See Corradin et. al. (2024). There is a tutorial.

echos v1.0.3: Provides a lightweight implementation of functions and methods for fast and fully automatic time series modeling and forecasting using Echo State Networks. See the vignettes Base functions and Tidy functions.

quadVAR v0.1.2: Provides functions to estimate quadratic vector autoregression models with the strong hierarchy using the Regularization Algorithm under Marginality Principle of Hao et al. (2018) to compare the performance with linear models and construct networks with partial derivatives. See README for examples.

quadVAR v0.1.2: Provides functions to estimate quadratic vector autoregression models with the strong hierarchy using the Regularization Algorithm under Marginality Principle of Hao et al. (2018) to compare the performance with linear models and construct networks with partial derivatives. See README for examples.

Utilities

aftables v1.0.2: Provides tools to generate spreadsheet publications that follow best practice guidance from the UK government’s Analysis Function. There are four vignettes, including an Introduction and Accessibility.

watcher v0.1.2: Implements an R binding for libfswatch, a file system monitoring library, that enables users to watch files or directories recursively for changes in the background. Log activity or run an R function every time a change event occurs. See the README for an example.

Visualization

jellyfisher v1.0.4: Generates interactive Jellyfish plots to visualize spatiotemporal tumor evolution by integrating sample and phylogenetic trees into a unified plot. This approach provides an intuitive way to analyze tumor heterogeneity and evolution over time and across anatomical locations. The Jellyfish plot visualization design was first introduced by Lahtinen et al. (2023). See the vignette.

xdvir v0.1-2: Provides high-level functions to render LaTeX fragments as labels and data symbols in ggplot2 plots, plus low-level functions to author, produce, and typeset LaTeX documents, and to produce, read, and render DVIfiles. See the vignette.

Weather

RFplus v1.4-0: Implements a machine learning algorithm that merges satellite and ground precipitation data using Random Forest for spatial prediction, residual modeling for bias correction, and quantile mapping for adjustment, ensuring accurate estimates across temporal scales and regions. See the vignette.

SPIChanges v0.1.0: Provides methods to improve the interpretation of the Standardized Precipitation Index under changing climate conditions. It implements the nonstationary approach of Blain et al. (2022) to detect trends in rainfall quantities and quantify the effect of such trends on the probability of a drought event occurring. There is an Introduction and a vignette Monte Carlo Experiments and Case Studies.

Continue reading: February 2025 Top 40 New CRAN Packages

Analysis and Future Implications of February 2025 New CRAN Packages

Over the course of February 2025, 159 new packages made it to the Comprehensive R Archive Network (CRAN). With immense advancements in dynamic fields such as Artificial Intelligence, Genomics, Machine Learning and others, this represents another leap into a future powered by groundbreaking data-analytics too. But what does this mean for users of these packages? What longer-term implications do these hold?

Artificial Intelligence-Based Packages

Artificial Intelligence has shown significant advancements recently. The newly released packages, such as chores v0.1.0, gander v0.1.0, and GitAI v0.1.0, showcase versatile features like language model assistants, Copilot completion experience, and functions to scan Git repositories. Considering the increasing importance of automating tasks and the capabilities these packages offer, they’re expected to gain more popularity.

Actionable Advice:

Artificial Intelligence is an ever-evolving field. Stay updated with the latest advancements like large language models and more efficient programming. Learning to use new packages like chores, gander, and GitAI could help improve efficiency in automating tasks.

Computational Methods-Based Packages

New tools like nlpembeds v1.0.0, NLPwavelet v1.0, and rmcmc v0.1.1 are milestones in Computational Methods’ evolution. Such packages demonstrate the community’s focusing on computation efficiency and modeling, even with very large data sets.

Actionable Advice:

Consider updating your skills to effectively handle large volumes of data and make sense of complex data sets using packages like nlpembeds and rmcmc.

Data Packages

Twelve new data packages, including acledR v0.1.0 and Horsekicks v1/0/2, provide the community with preloaded datasets and functions to handle specific types of data efficiently. They offer potential to researchers to undertake complex studies without the hassle of preprocessing big data.

Actionable Advice:

Stay updated with the latest data packages available on CRAN to improve the efficiency of your studies and to provide a robust framework for your research.

Machine Learning Packages

A new package like tall v0.1.1 implies a user-friendly approach to analyzing textual data using machine learning. This shows a clear trend towards user-friendly, visual, and interactive tools for applied machine learning in textual data analysis.

Actionable Advice:

As a data scientist or analyst, consider deploying machine learning tools like tall in your work. It would streamline the process of extracting insights from raw textual data.

Visualization Packages

Visualization tools like jellyfisher v1.0.4 and xdvir v0.1-2 provide intuitive ways to analyze and present data, which is a crucial aspect of data analysis.

Actionable Advice:

Should you be presenting complex data sets to an audience, consider using such visualization tools to simplify consumption and interpretation.

Long-term Implications and Future Developments

CRAN’s latest package releases suggest exciting developments in fields of Artificial Intelligence, Computational Methods, Machine Learning, Data and Visualization. With the pace at which these fields are growing, professionals relying on data analysis and researchers should anticipate even more sophisticated tools and computations in the pipeline. This further indicates a clear need to keep up with understanding and ability to deploy these constantly evolving tools.

Actionable Advice:

Continually learning and applying newly released packages should be a part of your long-term strategy. This will ensure you stay ahead in the data science world, leveraging the most effective and sophisticated tools at your disposal.

Read the original article

by jsendak | Mar 29, 2025 | DS Articles

Learn model serving, CI/CD, ML orchestration, model deployment, local AI, and Docker to streamline ML workflows, automate pipelines, and deploy scalable, portable AI solutions effectively.

Understanding and Streamlining Machine Learning Workflows

Machine Learning (ML) workflows are becoming more efficient and seamless thanks to advancements in tooling aimed at enhancing processes like model serving, Continuous Integration and Continuous Delivery (CI/CD), ML orchestration, model deployment, local AI and Docker. These tools are redefining how organizations across the globe handle their ML pipelines, making them more scalable and portable. As we delve into the future of Machine Learning, it is vital to understand how these factors will continue shaping the ML landscape.

The Future of Machine Learning Workflow Optimization

As Machine Learning workflows become more complex, the role of these key components in streamlining operations continues to increase. This indicates a future where ML workflows are not only more efficient but are also highly reliable, repeatable, scalable and standardized.

Model Serving, CI/CD and ML Orchestration

Going ahead, model serving, CI/CD, and ML Orchestration could eventually be integrated into an all-in-one solution that facilitates the management of ML models from development to deployment. Such a tool could support real-time updates ensuring models are continually optimized and functional. This implies leaner workflows and quicker market times for Machine Learning products.

Model Deployment, Local AI and Docker

Similarly, as organizations adopt local AI more widely, and with the continued growth of Docker, they might start to deploy pre-configured ML models locally, either as stand-alone solutions or on dockerized applications. This presents a potential shift from the traditional ways of approaching ML model deployment – making it easier for anyone, anywhere, to use AI.

Long-Term Implications

Such advancements present significant long-term implications for both ML practitioners and businesses. On one hand, ML practitioners will need to acquire new skills and competencies to adapt to the evolving landscape. For businesses, this shift means they could deploy ML models faster, at a reduced cost and with increased scalability and portability, thereby optimizing performance.

Actionable Advice

Organizations and individuals looking to take advantage of these advancements should consider the following:

- Invest In Training: ML practitioners should seek to enhance their skills around model serving, CI/CD, ML orchestration, and Docker. This will position them well to capitalize on these advancements.

- Embrace Local AI: Organizations should shift towards the embrace and implementation of local AI, as it reduces complications associated with securing large volumes of data over the internet, offering increased control over data security.

- Pay Attention to New Solutions: Keeping an eye out for the development and release of new management tools that combine components like CI/CD, ML Orchestration and Docker could provide significant workflow efficiencies.

Final Thoughts

Ultimately, the future of ML workflows lies in the ability to streamline and automate processes. As new technologies and methods continue to emerge, they promise a future where ML models are deployed faster, at reduced costs, and with greater scalability – transforming the operational efficiency and efficacy of machine learning applications and solutions across the globe.

Read the original article

by jsendak | Mar 29, 2025 | DS Articles

Biometric authentication systems are one of the most widespread and accessible forms of cyber hygiene in consumer products, and they’ve gone beyond phone face scanners to include more advanced technology. Data scientists are interested in the myriad ways biometrics can secure information privacy and enhance authentication systems. How is this scanning technology making digital environments… Read More »Data science is key to securig biometric authentication systems

Key Insights into Biometric Authentication Systems and Data Science

Biometric authentication systems are increasingly being incorporated into consumer products. These systems, which extend beyond simple face scanners on smartphones, rely on advanced technologies to offer a robust layer of protection. Given the significant role data science plays in securing these systems, it continues to be a fascinating subject of study and application for data scientists.

Long-term Implications of Biometric Authentication Systems

Biometric authentication systems signify a significant leap forward for consumer privacy. Because biometrics are inherently difficult to replicate or steal, compared to traditional text-based passwords, this technology holds huge potential for data security in the long term.

However, these systems also present a range of unexplored, potential challenges. For instance, data breaches could expose a biometric trait resulting in someone losing control over a crucial piece of their identity, not to mention the privacy concerns that arise with biometric data collection and storage.

Future Developments in Biometric Authentication Systems

In the face of these potential challenges, it’s reasonable to anticipate future technological developments and adaptations in this sphere.

For instance, it’s possible we will see the rise of multi-factor biometric authentication, which would offer enhanced security by requiring several biometric identifications rather than just one.

Furthermore, privacy-supporting algorithms and tech could also be integrated into biometric authentication systems to ensure biometric data is securely stored and processed – this would go a long way in quelling consumer privacy fears.

Take Action based on these Insights

Given these future developments, there are several steps both consumers and businesses should consider:

- Stay informed: Keep abreast of the latest developments in biometric technology, as well as laws and regulations regarding data privacy and protection.

- Prioritize security: Invest in secure, modern technology that employs robust biometric authentication systems to protect sensitive data.

- Encourage transparency: Businesses should be open about their biometric data collection, storage, and use practices. Consumers should seek out this information.

- Prepare for potential breaches: Have contingency plans in place in case of data breaches involving biometric data.

In conclusion, while biometric authentication systems offer enhanced security, it’s vital to remain vigilant and proactive, constantly evolving with the technology’s advancements and associated challenges.

Read the original article

“}

“}