EliseAI improves housing and healthcare efficiency with AI

A conversation with Minna Song, CEO & Co-founder of EliseAI.

A conversation with Minna Song, CEO & Co-founder of EliseAI.

Join us as we share our latest releases and how ChatGPT is becoming more interactive, customized to the way your teams work, and agentic.

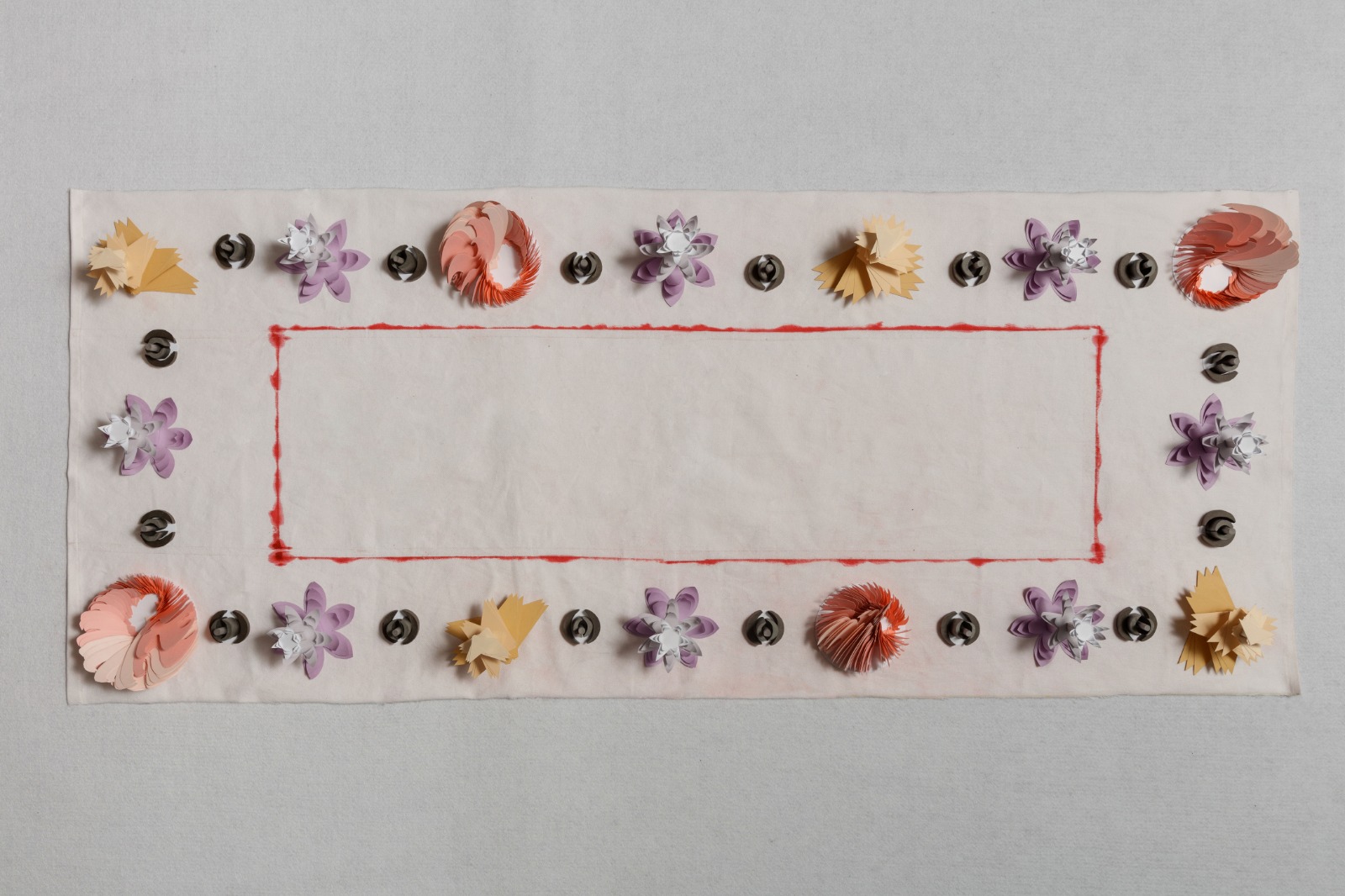

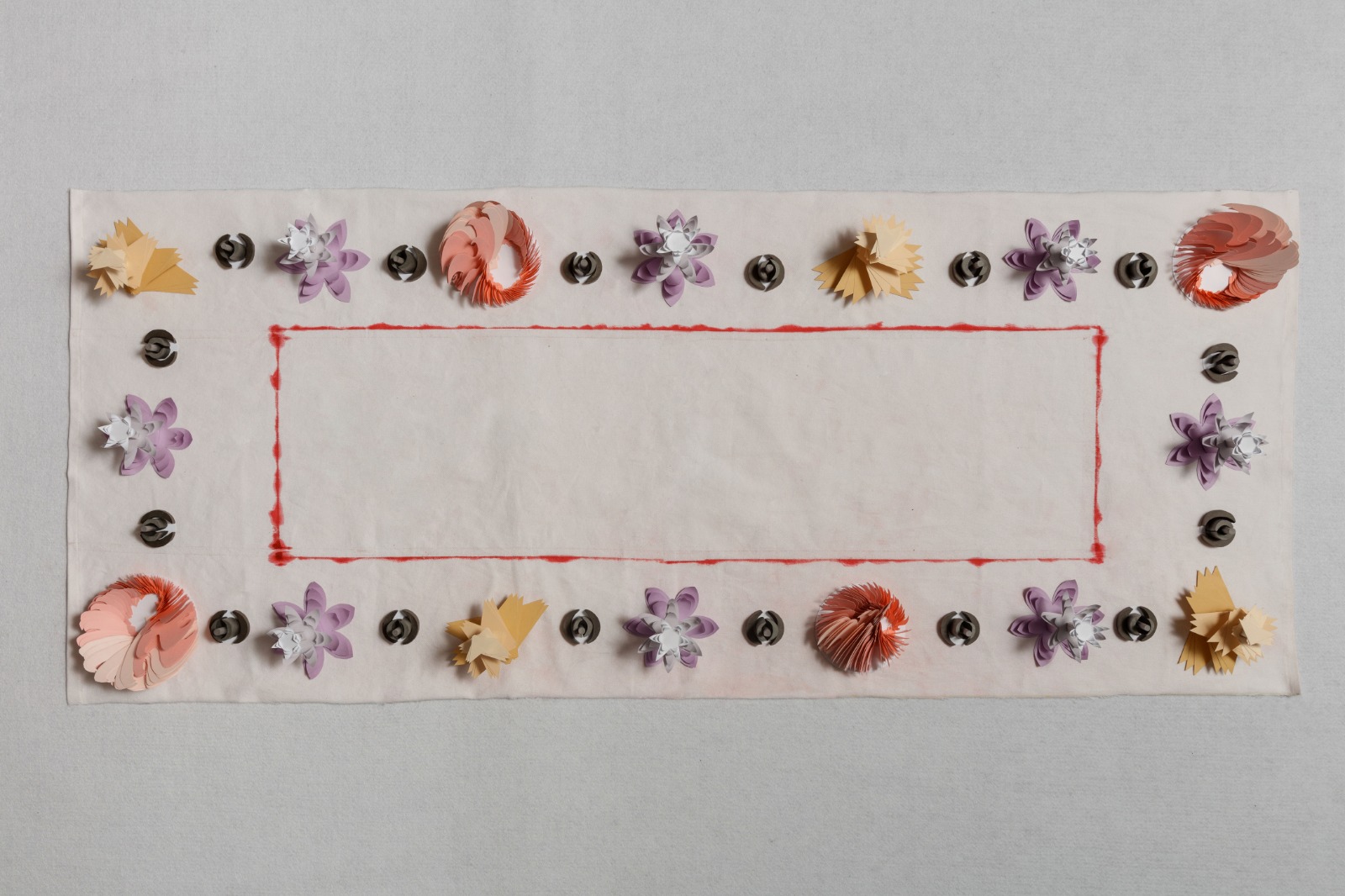

I had the opportunity to immerse myself in the extraordinary world of floral art. Walking through the halls and gazing at the intricate and breathtaking displays, I couldn’t help but be reminded of the timeless allure and symbolism that flowers have held throughout history.

From ancient civilizations to the modern day, flowers have captivated human hearts and minds. They have been used to celebrate joyous occasions, convey love, and even mourn the loss of loved ones. In fact, the language of flowers, also known as floriography, became especially popular during the Victorian era, allowing individuals to express emotions and sentiments through carefully chosen blooms.

As I admired the delicate paper flowers crafted by modern artists, I couldn’t help but think of the enduring relevance of this art form. In an increasingly digital age, where virtual interactions dominate our lives, the tangible and ephemeral beauty of paper flowers serves as a reminder of the natural world and our connection to it.

Just as artists throughout history have drawn inspiration from the flowers that surround them, the ‘Blooming on Paper’ exhibition showcases the creativity and skill of contemporary artists who continue to explore the beauty and versatility of this medium. Their creations, meticulously crafted and thoughtfully arranged, bring to life the vibrant hues and intricate details of various flower species.

In this exhibition, visitors are invited to witness the artistic evolution of floral representation. From traditional watercolor paintings to elaborate paper sculptures, the featured works showcase the richness and diversity of floral artistry. Each piece is a testament to the innate beauty of nature and the boundless creativity of human imagination.

‘Blooming on Paper’ is not just an exhibition; it is a celebration of timeless beauty, a tribute to the flowers that have inspired generations, and a testament to the enduring power of art. As you explore the galleries, let yourself be transported into a world where delicate petals, vibrant colors, and intricate patterns coalesce to form masterpieces that celebrate the wonders of nature and provoke introspection on the beauty that surrounds us.

Join me in embracing this fusion of art and nature, and allow yourself to be captivated by the ‘Blooming on Paper’ exhibition. Let it remind you of the beauty that can be found in the smallest of details and inspire you to cherish the ephemeral moments that make life magnificent.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

In this tutorial we’ll look at how we can access LLM agents

through API calls. We’ll use this skill for created structued data from documents.

We’ll use the R ellmer package (launched 25th Feb 2025).

There are a few package options (I was also using

tidychatmodels before).

ellmer is a game changer for scientists using R: It supports tool use and has

functions for structured data.

Before ellmer you had to know other languages and data-structures, like JSON.

Ellmer means that many powerful LLM uses are now easily accessible to R users.

Tool use means the LLM can run commands on your computer to retrieve information.

You know how LLMs can be bad at simple math like 2+2 or today’s date?

Well, with a tool, the LLM would know to use R to calculate this to return

the correct answer. Tools can also connect to web APIs, means they

can also be used to retrieve information or databases from the web.

The functions that assist you in creating structured data from text are also

important.

For instance, by combining tool use with structured data extraction, ellmer

could be used to synthesize literature for a quantitative review. We’ll cover that here.

Another application would be to interpret the meta-data from an online database,

download the data, write and run the analysis and then write up the findings.

Because you are running the LLM from R it means you can batch process prompts, such

as asking it to summarize many documents.

They are a couple of obvious uses. I’m sure people will soon come up with

many more.

Let’s see how to use ellmer to extract structured data from peer reviewed studies.

First, you need to get an API key from the provider. Login to the provider’s website and

follow the instructions.

Then, you need to add the key to your .Renviron file:

usethis::edit_r_environ()

Then type in your key like this:

ANTHROPIC_API_KEY="xxxxxx"

Then restart R. ellmer will automatically find your key so long as you use the recommended envirment variable names.

See ?ellmer::chat_claude (or chat_xxx where xxx is whatever provider you are using).

Posit recommends this is useful for experimenting with new prompts. It can also be better

than the web interfaces you might be used to. This is because, unlike on the web interfaces,

you can control the system message.

Most models have three types of messages:

system, which sets the context

user, which is what you type

assistant, which is the response

Many prompt engineer pros have found better results if you put as much of your request in the

system as possible. So I recommend you play around with this yourself.

library(ellmer) chat <- chat_claude( system_prompt = "You are a friendly but terse assistant.", model = "claude-3-5-haiku-20241022", max_tokens = 1000 ) live_console(chat) live_browser(chat)

Try this but experiment with a new system prompts. Here’s an example, try your own.

chat <- chat_claude( system_prompt = "You are a mystic with expertise in R programming. You answer questions indirectly in riddles and rhymes.", model = "claude-3-5-haiku-20241022", max_tokens = 1000 ) live_console(chat)

User: how can I do a glm in R?

*Swirling mystical orb glows with R code* Through statistics' mist, a model takes flight, glm() is the spell to cast in the night, With family bound, be it gaussian or more, And formula written as y ~ x4. ...

So once you’ve tested your prompt you can run it like this:

chat <- chat_claude(

system_prompt = "You are a surfy dude who likes waves, tubes and statistics.",

model = "claude-3-5-haiku-20241022",

max_tokens = 1000

)

chat$chat("How can I do a glm in R?")

‘… Hang ten with your statistical surfboard!

’

’

That was cheesy…

Now let’s see if we can use ellmer to clean up some text from a pdf and summarize it. ellmer

has some handy functions for processing pdfs to text, so they can then be fed into prompts.

I’m going to attempt to summarize my recent paper on turtle fishing.

x <- content_pdf_url("https://conbio.onlinelibrary.wiley.com/doi/epdf/10.1111/conl.13056")

This fails with a 403 error. This means the server is blocking the request, it probably guesses

(correctly) that I’m calling the pdf programmatically: it thinks I’m a bot (which this tutorial kind of is creating).

We can also try with a file on our hard drive, we just have to manually download the pdf.

mypdf <- content_pdf_file("pdf-examples/Brown_etal2024 national scale turtle mortality.pdf")

That works, now let’s use it within a chat. First set-up our chat:

chat <- chat_claude( system_prompt = "You are a research assistant who specializes in extracting structured data from scientific papers.", model = "claude-3-5-haiku-20241022", max_tokens = 1000 )

Now, we can use ellmer’s functions for specifying structured data. Many LLMs can be used

to generate data in the JSON format (they were specifically trained with that in mind).

ellmer handles the conversion from JSON to R objects that are easier for us R users to understand.

You use the type_object then type_number, type_string etc.. to specify the

types of data. Read more in the ellmer package vignettes

paper_stats <- type_object(

sample_size = type_number("Sample size of the study"),

year_of_study = type_number("Year data was collected"),

method = type_string("Summary of statistical method, one paragraph max")

)

Finally, we send the request for a summary to the provider:

turtle_study <- chat$extract_data(mypdf, type = paper_stats)

The turtle_study object will contain the structured data from the pdf. I think

(the ellmer documentation is a bit sparse on implementation details) ellmer is converting

a JSON that comes from the LLM to a friendly R list.

class(turtle_study) #list

And:

turtle_study$sample_size #11935 turtle_study$year_of_study #2018 turtle_study$method #The study estimated national-scale turtle catches for two fisheries in the Solomon Islands #- a small-scale reef fishery and a tuna longline fishery - using community surveys and #electronic monitoring. The researchers used nonparametric bootstrapping to scale up #catch data and calculate national-level estimates with confidence intervals.

It works, but like any structured lit review you need to be careful what questions you ask.

Even more so with an LLM as you are not reading the paper and understanding the context.

In this case the sample size its given us is the estimated number of turtles caught. This

was a model output, not a sample size. In fact this paper has several methods with

different sample sizes. So some work would be needed to fine-tune the prompt, especially

if you are batch processing many papers.

You should also experiment with models, I used Claude haiku because its cheap, but Claude sonnet would

probably be more accurate.

Let’s try this with a batch of papers (here I’ll just use two). For this example I’ll just use

two abstracts, which I’ve obtained as plain text. The first is from another study on turtle catch

in Madagascar.

The second is from my study above.

What we’ll do is create a function that reads in the text, then passes it to the LLM, using

the request for structured data from above.

process_abstract <- function(file_path, chat) {

# Read in the text file

abstract_text <- readLines(file_path, warn = FALSE)

# Extract data from the abstract

result <- chat$extract_data(abstract_text, type = paper_stats)

return(result)

}

Now set-up our chat and data request

# Create chat object if not already created

chat <- chat_claude(

system_prompt = "You are a research assistant who specializes in extracting structured data from scientific papers.",

model = "claude-3-5-haiku-20241022",

max_tokens = 1000

)

There’s a risk that the LLM will hallucinate data if it can’t find an answer. To

try to prevent this we can set an option , required = FALSE. Then the LLM should

return ‘NULL’ if it can’t find the data.

# Define the structured data format

paper_stats <- type_object(

sample_size = type_number("Number of surveys conducted to estimate turtle catch", required = FALSE),

turtles_caught = type_number("Estimate for number of turtles caught", required = FALSE),

year_of_study = type_number("Year data was collected", required = FALSE),

region = type_string("Country or geographic region of the study", required = FALSE)

)

Now we can batch process the abstracts and get the structured data

abstract_files <- list.files(path = "pdf-examples", pattern = ".txt$", full.names = TRUE) results <- lapply(abstract_files, function(file) process_abstract(file, chat)) names(results) <- basename(abstract_files) # Display results print(results)

In my first take without the required = FALSE I got some fake results. It hallucinated that

the Humber study was conducted in 2023 (it was published in 2010!) and that there were

2 villages surveyed in my study. The problem was that you can’t get that data from

the abstracts. So the model is hallucinating a response.

Unfortunately, with required = FALSE it still hallucinated answers. I then tried Claude sonnet

(a more powerful reasoning model) and it correctly put NULL for my study’s sample size, but

still got the year wrong for the Humber study.

I think this could work, but some work on the prompts would be needed.

The ellmer package solves some of the challenges I outlined in my last blog on LLM access from R.

But others are deeper conceptual challengs and remain. I’ll repeat those here

This should be cheap. It cost <1c to make this post with all the testing. So in theory you could do 100s of methods sections for <100USD. However, if you are testing back and forwards a lot or using full papers the cost could add up. It will be hard to estimate this until people get more experience.

A big challenge will be getting the text into a format that the LLM can

use. Then there are issues like obtaining the text. Downloading pdfs is

time consuming and data intensive. Trying to read text data from

webpages can also be hard, due to paywalls and rate limits (you might

get blocked for making reqeat requests).

For instance, in a past study we did where we did simple ‘bag of words

analysis’

we either downloaded the pdfs manually, or set timers to delay web hits

and avoid getting blocked.

HTML format would be ideal, because the tags mean the sections of the

paper, and the figures already semi-structured.

The ellmer pdf utility function seems to work ok for getting text from pdfs. I’m

guessing it could be improved though, e.g. to remove wastefull (=$) text like page headers.

Need to experiment with this to get it right. It might also be good to

repeat prompt the same text to triangulate accurate results.

You’ll definitely want to manually check the output and report accuracy

statistics in your study. So maybe your review has 1000 papers, you’ll

want to manually check 100 of them to see how accurate the LLM was.

A lit review is more than the systematic data. I still believe you need

to read a lot of papers in order to understand the literature and make a

useful synthesis. If you just use AI you’re vulnerable to the ‘illusion

of understanding’.

This tool will be best for well defined tasks and consistently written

papers. For instance, an ideal use case would be reviewing 500 ocean

acidification papers that all used similar experimental designs and

terminology. You’ll then be able to get consistent answers to prompts

about sample size etc…

Another good use case would be to extract model types from species

distribution model papers.

Harder tasks will be where the papers are from diverse disciplines, or

use inconsistent terminology, or methods. My study was a good example of

that, there were about 5 different sample sizes reported. So in this

example we’d need first to think clearly about what sample size you

wanted to extract before writing the prompt.

R-bloggers.com offers daily e-mail updates about R news and tutorials about learning R and many other topics. Click here if you’re looking to post or find an R/data-science job.

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

Continue reading: The ellmer package for using LLMs with R is a game changer for scientists

In the content above, we examine the emerging role of the ellmer package for Language Model (LLM) usage within the R programming environment. This shift carries vast implications on many fields, with scientists’ operations set to be particularly impacted.

The ellmer is an open-source R package developed to support scientists and researchers in simplifying and enhancing the use of LLMs. By featuring built-in functions to manage structured data from various document types, ellmer reduces the technical know-how previously essential in handling complex data structures and languages such as JSON (JavaScript Object Notation).

The application of ellmer is groundbreaking for R users due to its capability to utilize tools and have unique commands to access and retrieve data, including through web APIs. It simplifies extracting structured data from manuscripts, creating a crucial advantage for conducting quantitative reviews, interpreting metadata or summarizing large datasets.

The ellmer package also presents an improved approach to programmatically generating data. By using an interactive chat feature that can produce responses based on different prompts, ellmer can be used to clean and summarize text, extracting structured data and converting it into user-friendly formats such as R lists.

Moving forward, the package’s potential for batch processing could be utilized more extensively, automating tasks such as running multiple papers for text analysis and document summarization. With further improvement on the ellmer prompts and an increase in the accuracy of LLMs, we are likely to see a more accurate and efficient extraction of structured data.

While ellmer undeniably opens up numerous possibilities, users should remain aware of potential challenges and handle the system with due diligence. The results of LLMs are not flawless, and may require contextual understanding and keen examination to be efficiently applied.

Improving the system further implies an active engagement with the Ellmer community. Exploring and sharing new applications, discussing encountered issues and potential solutions, and contributing to the package’s development will ensure its continuous optimisation.

Given the risks of cost uncertainty and hallucination of data, it is paramount that users strategically choose when to deploy the ellmer package. To ensure a seamless operation, it is best to utilise the ellmer package primarily for tasks where the benefits clearly outweigh the potential costs.

With the continual evolvement of LLMs, users should regularly experiment with different models and explore the best-practice methods that would suit individual tasks. Adopt an experimental approach involving a lot of sandbox testing and an openness to continually learn and adapt as per the system’s advancements.

A list of machine learning conferences that can give you access to the the newest papers out there.

Machine learning is a rapidly evolving field that consistently significantly impacts multiple sectors, from technology to healthcare, finance, and beyond. With the existence of machine learning conferences that grant access to some of the latest research papers in the field, the realm of machine learning is potentially open to more significant and consistent innovations and developments.

Regular attendance and engagement with machine learning conferences could potentially lead to a broader and general understanding of this field. This would allow professionals, researchers, and organizations to stay updated on recent trends, methodologies, and findings in machine learning.

It could drive more comprehensive research, lead to further advancements in technology, and yield a more comprehensive understanding of machine learning complexities. Ultimately, this could lead to significant improvements in how industries apply machine learning in everyday scenarios, leading to greater operational efficiency and competitive advantage.

Future developments in machine learning facilitated by these conferences could manifest in the following ways:

To stay abreast of the rapid developments in machine learning:

In conclusion, machine learning conferences play a critical role in shaping the future of machine learning and its applications in various industries. To fully utilize the benefits these conferences offer, it is important not to only attend but to fully engage and apply gained insight on a practical level. This could potentially revolutionize the future of machine learning and its impact on various sectors.