by jsendak | Aug 19, 2025 | Art

The Power of Art: Reflecting on History and Exploring Contemporary Themes

Throughout history, art has served as a powerful vehicle for expression, reflection, and communication. From the ancient cave paintings of Lascaux to the masterpieces of the Renaissance, art has played a crucial role in shaping our understanding of the world and ourselves. It has the ability to transcend language barriers, cultural divides, and time itself, offering a glimpse into the soul of humanity.

Today, in an increasingly digital and interconnected world, the role of art remains as important as ever. Artists continue to push boundaries, challenge norms, and provoke thought through their creations. With each brushstroke, sculpture, or photograph, they explore complex themes such as identity, politics, social justice, and the environment, inviting us to pause, reflect, and engage with the world around us.

SWANFALL ART at Mall Galleries

This month, SWANFALL ART returns to Mall Galleries in London with its annual exhibition, showcasing a diverse range of works from emerging and established artists. With a focus on contemporary themes and innovative techniques, the exhibition promises to be a thought-provoking experience for art enthusiasts and casual visitors alike.

As you wander through the galleries, take a moment to consider the power of art in shaping our past, present, and future. Allow yourself to be inspired, challenged, and moved by the beauty and complexity of the works on display. For in the hands of talented artists, art has the ability to transform, connect, and elevate the human experience.

SWANFALL ART returns to Mall Galleries, London this month with its annual exhibition.

Read the original article

by jsendak | Aug 19, 2025 | DS Articles

[This article was first published on

Open Analytics, and kindly contributed to

R-bloggers]. (You can report issue about the content on this page

here)

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

Cloud providers are no longer just offering traditional x86-based servers,

ARM-based servers are now becoming a serious alternative. And for good reason:

they’re often far more cost-effective and power-efficient than their x86

counterparts. For instance, we found a provider offering a server with 16

virtual cores and 32 GB of RAM for just €30 per month. To be realistic, most

providers charge between €100 and €200 per month for such a server. These

more expensive servers usually have less customers on a single server, improving

the real life performance. In this post, we’ll put one of these budget-friendly

ARM servers to the test. Of course, similar results can be achieved with an

Intel or AMD server. The goal is to set up a production-grade ShinyProxy

environment, designed to host a single public app, and see how many concurrent

users it can realistically handle. (And yes, the title probably gives a hint

already!).

Although this post focuses on a specific use case, the same principles also

apply when running multiple apps with authentication.

Server selection

The first step is to pick a cloud provider and order a server. Choose a

datacenter that’s located close to your end users to minimize latency and

improve performance. Most providers also allow you to resize your server later

by adding more CPU or RAM, so you can start small and scale up as demand grows.

Make sure the server comes with a public IP address, since there is no need for

a load balancer in this setup.

When it comes to the operating system, you can use any Linux distribution you

prefer. In this tutorial, we’ll use Ubuntu 24.04. Once your server is

provisioned, the next step is to connect via SSH. Usually your cloud provider

provides documentation that explains how to do this.

As a final prerequisite, you’ll need a domain name. This makes it easier to

access your server using a human-friendly address and is also necessary for

configuring TLS. You can use either a root domain (e.g. example.com) or a

subdomain (e.g. shinyproxy.example.com). In your domain’s DNS settings, create

an A record that points the domain or subdomain to your server’s public IP

address.

Installation

With the server up and running, the next step is to install the ShinyProxy

Operator. This tool takes care of the ShinyProxy installation process as well as

all the required side components, so you don’t have to manage them manually. For

detailed instructions, follow the

official documentation.

For the best setup, make sure to enable TLS and the monitoring stack. At this

point you should be able to login and start the demo app.

Inspecting the resource consumption of the app

With ShinyProxy deployed, you can deploy your app. Once again, the

documentation contains

everything you need to know. To keep things simple, you can build the Docker

image on the server. For example, clone your Git repository with all your code on the

server and build the image using Docker. This way, you don’t need a container

registry. After you added the app to the ShinyProxy configuration, you can start

it through ShinyProxy. In order to know how many concurrent

users are supported by the server, it’s a good idea to first have a look at the

resources consumed by the app. Start the app, perform some user actions and run

the following command:

sudo docker stats

The output will be similar too:

The last line in the screenshot shows the container running our Shiny app. It

uses about 125 MiB of RAM and very little CPU time. Keep in mind that we’re

working with a small demo app, CPU usage will increase if your app processes

more data or performs heavier computations. Most Shiny apps typically use less

than 500 MiB of RAM, but some can consume multiple gigabytes, especially when

loading large datasets. To keep our example realistic, we’ll assume each app

requires 500 MiB of RAM. The other system components (including ShinyProxy) also

consume some memory. In the screenshot, these consume about 1.5 GiB. ShinyProxy

generally requires up to 2 GiB of RAM. To avoid degrading server performance,

it’s a good practice to leave a few gigabytes free. For this setup, we’ll

reserve 4 GiB for ShinyProxy and the server itself, leaving 28 GiB available for

the Shiny containers. With each container using 0.5 GiB, this allows us to run

up to 56 containers concurrently.

Optimizing the configuration

Originally, ShinyProxy created a new container for each user. While this approach

is simple and improves security, it’s not ideal for hosting a public app with

many users. Fortunately, since quite some time, ShinyProxy has excellent

support for what we

call pre-initialization and container-sharing.

With this feature, a single container can serve multiple users simultaneously.

Since the containers are pre-initialized, loading times are minimal. However,

each Shiny container still has a limit on the number of users it can handle. For

simple dashboards, you can typically expect about 20 concurrent users per

container. In our example, we’ll assume 10 concurrent users per container. Given

our 56 containers, this setup allows the server to handle up to 560 concurrent

users. To stay on the safe side and avoid overloading the server, we’ll round

this down to 500 concurrent users.

With these numbers in mind, it’s time to adjust the configuration. Let’s start

with the app configuration.

proxy:

specs:

- id: my-app

container-image: openanalytics/shinyproxy-demo

seats-per-container: 10

max-total-instances: 500

minimum-seats-available: 500

The seats-per-container: 10 setting tells ShinyProxy that each container can

handle 10 users or “seats”. To prevent overloading the server, we limit the

total number of concurrent users to 500 with the max-total-instances: 500

setting. If more than 500 users try to access the app at the same time, they

will see a message indicating that not enough capacity is available. Additionally, the

minimum-seats-available: 500 setting ensures that ShinyProxy pre-creates 50

containers and keeps them running, so users can start the app immediately

without waiting for containers to initialize.

In this example, we estimated the maximum number of concurrent users and limited

the server to that value. Since we’re deploying only one app, it makes sense to

create all containers at startup. However, if you are hosting multiple apps on a

single server (which ShinyProxy fully supports), it’s better to start with just a

few containers per app. ShinyProxy automatically creates new containers as

users begin accessing the apps, ensuring resources are used efficiently.

While you are updating the configuration of ShinyProxy, add (or update) the

following settings as well:

proxy:

hide-navbar: true

landing-page: SingleApp

authentication: none

# ...

memory-limit: 2Gi

The first property hides the ShinyProxy navbar, which is often unnecessary when

hosting a single public app. By setting landing-page: SingleApp, users are

automatically redirected to the app when they visit the landing page. Since this

is a public app, we also disable authentication. Finally, we limit the memory

that ShinyProxy can use to ensure there is always enough RAM available for the

Shiny apps.

After updating the configuration, the operator creates a new ShinyProxy instance

with the updated settings. This process usually takes a few minutes. You might

see a “forbidden” page if you previously logged in using a username and

password. This happens only once when switching from authentication: simple to

authentication: none. To fix it, simply clear the ShinyProxy cookies in your

browser.

As soon as ShinyProxy starts, it creates 50 new containers. When you launch your

app, it loads almost instantly, thanks to the pre-initialized containers. We

tested this setup with 500 browser sessions, and the system handled them

effortlessly. Both ShinyProxy and the Shiny app responded quickly, and the server

load remained minimal. Of course, actual performance depends on the resource

consumption of your app, but ShinyProxy is designed to manage these variations

efficiently. The setup demonstrates that even a single ARM-based server can

easily manage hundreds of concurrent users, delivering robust, production-grade

performance.

The first panel in the screenshot shows how long users wait before being

assigned a seat, and the second panel shows the number of available seats. When

we launched 500 sessions within just two minutes, the available seats dropped

quickly. Even so, loading times stayed at only a few milliseconds, which makes

it clear that ShinyProxy remains highly responsive during a sudden surge of

hundreds of new sessions.

Once all apps are loaded, ShinyProxy’s CPU usage drops and remains low, as shown

by the docker stats output:

Monitoring

Although the monitoring stack was enabled when ShinyProxy was first deployed,

disabling authentication means that Grafana is no longer accessible through

ShinyProxy. A simple workaround is to deploy a second ShinyProxy instance that

still uses authentication. This private instance can also serve as a testing

environment for new development versions of your app before deploying them to

the public production server. To set this up, create a new configuration file in

the input directory, for example private.shinyproxy.yaml, and add the

following code:

server:

servlet:

context-path: /private

Be sure to update the proxy.realm-id, for example by setting it to private.

After deploying the server, you can access it at the same hostname as the main

server, but under the /private/ sub-path. For instance, if your server is

available at http://localhost/, the private instance is accessible at

http://localhost/private/, and Grafana at http://localhost/private/grafana/.

To view the logs and metrics of the public instance, you need to adjust the

namespace variable in the dashboards.

Conclusion

With the introduction of the ShinyProxy Operator for Docker, setting up a

production-grade ShinyProxy server has never been easier. This post demonstrates

that only minimal configuration is needed to handle a large number of concurrent

users. Expensive servers or clusters aren’t required, just a single server is

sufficient. If you need to support more users, simply scale up the server: in

most cases, doubling CPU and RAM roughly doubles the number of concurrent users.

Compared to the resource usage of the Shiny apps themselves, ShinyProxy consumes

very little CPU and RAM, so it rarely becomes a bottleneck even under heavy

load.

Don’t hesitate to send in questions or suggestions and have fun with ShinyProxy!

Continue reading: Affordable Shiny app hosting for 500 concurrent users

Long-Term Implications and Future Developments

The shift towards using ARM-based servers instead of traditional x86-based servers has key long-term implications. These servers have the potential to provide cost-effective and power-efficient solutions for users, which will inevitably drive competitiveness among cloud providers and ultimately lower costs. Furthermore, the adoption of these servers will open more opportunities for app developers, as hosting solutions such as ShinyProxy become more affordable and accessible.

Potential ARM Success in the Cloud Server Market

ARM-based servers have a promising future in the cloud server market as they provide a significant cost-effective and power-efficient alternative to traditional x86-based servers. This may encourage cloud service providers to invest more in ARM architecture, resulting in advanced and more efficient cloud services. The expected growth of ARM-based servers further points towards a potential drop in the price of server hosting, making it affordable for startups and small business owners. This affordability will also serve to drive competitiveness among providers, benefiting users and the market as a whole.

The Future of Application Hosting

As demonstrated through the deployment of ShinyProxy on an ARM-based server, platforms such as ShinyProxy have the potential to revolutionize the manner applications are hosted. With the possibility of limiting the maximum number of concurrent users and pre-creating containers at startup, ShinyProxy and similar tools will provide app developers with unprecedented control over their hosted applications. Furthermore, the efficient use of resources by such platforms means that even single servers can support high-traffic applications, and scalability becomes seamless and cost-effective.

Actionable Advice

Consider the Shift to ARM-based Servers

If you are a business owner considering an upgrade or installation of new servers, you should contemplate ARM-based servers. Their cost-effectiveness and power-efficiency will help lower your overheads and improve performance. This shift might also future-proof your organization for potential cloud service improvements centered around ARM servers.

Utilize Affordable Application Hosting Solutions

ShinyProxy’s ability to efficiently manage applications on a server makes it a favorable tool for developers. Its efficient management of resources means that businesses can host their applications without requiring expensive server or cloud solutions. If affordability and high-traffic capability are vital factors for your application hosting needs, consider deploying your apps through ShinyProxy.

Prepare for the Future

Given the potential growth and future impact of ARM-based servers, staying informed about this technology could be crucial for your enterprise. Proactively incorporating modern and future-proofed tools like ShinyProxy into your technology stack can place your business in a better position to adapt to future industry changes and trends.

Read the original article

by jsendak | Aug 19, 2025 | DS Articles

This article explains how to install and setup Couchbase and start easily storing data.

Analyzing the Key Points of Couchbase Installation and Setup

By examining the information provided in the previous piece regarding the installation and setup of Couchbase, we can uncover potential long-term implications and future advancements. This document will aim to provide additional insights and advice based on these possible future scenarios.

Extended Implication and Future Developments

Couchbase, as a NoSQL database, offers distributed architecture designed to perform at scale. This should instigate future advancements aimed at improving overall performance, scalability, flexibility, and agility. The following are possible future developments.

- Enhanced Performance: Couchbase is likely to introduce performance enhancements to support the growing number of businesses embracing big data analytics.

- Increased Scalability: As data volumes grow, Couchbase will have to improve its scalability features to accommodate this surge.

- Upgraded Security: With growing incidences of cybercrime, future versions of Couchbase are expected to provide advanced security features.

Potential Long-term Implications

“The continuous use and development of Couchbase potentially has several long-term implications.”

- A Shift towards NoSQL Databases: More businesses could transition to NoSQL databases like Couchbase given its advanced features.

- Emergence of New Services: There could be an increase in services centered around Couchbase, such as consulting, maintenance, and support.

- Increased Demand for Couchbase Skills: As more organizations implement Couchbase, there will be a greater demand for professionals with Couchbase skills.

Actioning Insights for Future Success

To capitalize on these implications and developments, here are some strategies organizations and individuals might consider to stay ahead.

- Up-skilling or Re-skilling: Organizations should invest in training their workforce on Couchbase while individuals should consider acquiring Couchbase skills due to its growing demand.

- Invest in Couchbase Managed Services: To minimize the complexity of managing and optimizing the database, businesses should consider investing in Couchbase managed services.

- Plan for scalability: Businesses should prepare their infrastructures to handle the increased data volumes and performance needs that will come with advancing Couchbase versions.

By staying attuned to the ongoing developments of Couchbase and strategically implementing the above insights, organizations and individuals can be better poised for success in the dynamic tech landscape.

Read the original article

by jsendak | Aug 19, 2025 | DS Articles

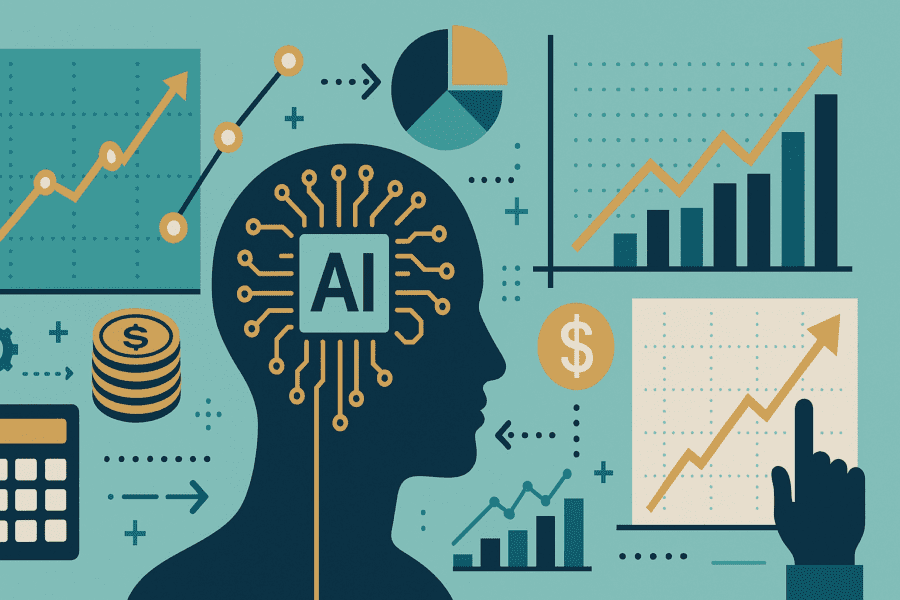

Discover how AI is transforming financial forecasting for SMBs and enterprises from various industries. From real-time insights to predictive scenario planning, AI tools are helping companies improve accuracy, reduce risk, and adapt faster to market shifts.

The Transformation of Financial Forecasting through AI

Artificial Intelligence (AI) is significantly impacting various sectors, including the financial markets. For small and medium-sized businesses (SMBs) to large enterprises, AI plays an essential role in revolutionizing financial forecasting. AI tools’ abilities range from providing real-time insights to carrying out predictive scenario planning, key factors in improving accuracy, minimizing risks, and facilitating rapid adaptation to changes in the market. The importance of AI in finance is increasingly becoming apparent, but its long-term implications and potential future advancements are worth examining.

Future Developments and Long-term Implications

As AI’s capacities continue to evolve, it’s critical to explore these tools’ capability to alleviate several financial forecasting challenges. Companies utilizing AI can anticipate a transformative shift in their approach towards financial planning and forecasting.

“AI tools are the future of financial market analysis and decision making. They have the potential to make complex procedures simple, accurate, and time-effective” -Unknown Technology Expert

SMBs and enterprises investing in AI technologies may find significant returns on investment (ROI) over time. AI can unlock greater analytical power for financial forecasting, making it a vital tool in strategic business planning. Implementation of AI in financial forecasting signifies a move towards data-driven decision making, which can encompass everything from day-to-day operations to long-term strategic planning.

Advancements in AI for Financial Forecasting

- Real-time Insights: AI can process and analyze vast amounts of data almost instantly, providing real-time insights into market trends and customer behaviors. This speed and accuracy can help businesses stay ahead of their competitors.

- Predictive Scenario Planning: AI has the ability to simulate multiple financial scenarios based on historical data. This opens up opportunities for companies to prepare for various market situations, facilitating better business agility and resilience.

- Risk Management: AI can identify patterns and trends in data that human analysts may overlook. This data analysis can enable more accurate risk assessment, thereby reducing potential losses and improving overall financial security.

Actionable Advice for SMBs and Enterprises

For SMBs and enterprises combing over the horizon for a competitive edge in financial forecasting, seamless integration, and utilization of AI tools should be a top priority. Here are some steps for companies to move towards leveraging AI for financial forecasting:

- Invest in AI Tools: Companies should start by identifying and investing in suitable AI tools for their financial forecasting needs. This requires an assessment of their current data analysis capabilities and how AI might enhance them.

- Training and Skill Development: To efficiently use AI tools, businesses must ensure their staff possess the necessary skills to interpret AI outputs. This involves relevant training and development programs.

- Continual Evaluation: Once implemented, the effectiveness of these AI tools should be regularly checked. Through periodic evaluation, companies can gain insights into how well the AI tool matches the business’s changing needs.

By harnessing AI’s power, SMBs and large-scale enterprises can profoundly transform their financial forecasting, enhancing precision, reducing risks, and enabling prompt responses to market changes.

Read the original article

by jsendak | Aug 19, 2025 | Computer Science

arXiv:2508.12020v1 Announce Type: new

Abstract: The Audio-to-3D-Gesture (A2G) task has enormous potential for various applications in virtual reality and computer graphics, etc. However, current evaluation metrics, such as Fr’echet Gesture Distance or Beat Constancy, fail at reflecting the human preference of the generated 3D gestures. To cope with this problem, exploring human preference and an objective quality assessment metric for AI-generated 3D human gestures is becoming increasingly significant. In this paper, we introduce the Ges-QA dataset, which includes 1,400 samples with multidimensional scores for gesture quality and audio-gesture consistency. Moreover, we collect binary classification labels to determine whether the generated gestures match the emotions of the audio. Equipped with our Ges-QA dataset, we propose a multi-modal transformer-based neural network with 3 branches for video, audio and 3D skeleton modalities, which can score A2G contents in multiple dimensions. Comparative experimental results and ablation studies demonstrate that Ges-QAer yields state-of-the-art performance on our dataset.

Expert Commentary: Exploring Human Preference and Quality Assessment for AI-generated 3D Human Gestures

The Audio-to-3D-Gesture (A2G) task holds significant potential for various applications in virtual reality, computer graphics, and beyond. However, current evaluation metrics like Fre’chet Gesture Distance and Beat Constancy may not accurately capture human preference for generated 3D gestures. This gap highlights the need to delve deeper into understanding human perception and developing objective quality assessment metrics for AI-generated 3D human gestures.

Multi-disciplinary in nature, this research bridges the fields of multimedia information systems, animations, artificial reality, augmented reality, and virtual realities. By introducing the Ges-QA dataset, which comprises 1,400 samples with multidimensional scores for gesture quality and audio-gesture consistency, the authors have laid a solid foundation for further exploration in this domain.

The inclusion of binary classification labels to determine emotional matching between generated gestures and audio adds another layer of complexity to the task. This dataset enables the development of a multi-modal transformer-based neural network with separate branches for video, audio, and 3D skeleton modalities. This approach allows for scoring A2G contents across multiple dimensions, providing a more comprehensive assessment of gesture quality.

The comparative experimental results and ablation studies presented in the paper showcase the effectiveness of the proposed Ges-QAer model, demonstrating state-of-the-art performance on the dataset. This research not only contributes to advancing the field of AI-generated 3D human gestures but also underscores the importance of incorporating human preference into evaluation metrics for such tasks.

Read the original article