by jsendak | Mar 22, 2024 | DS Articles

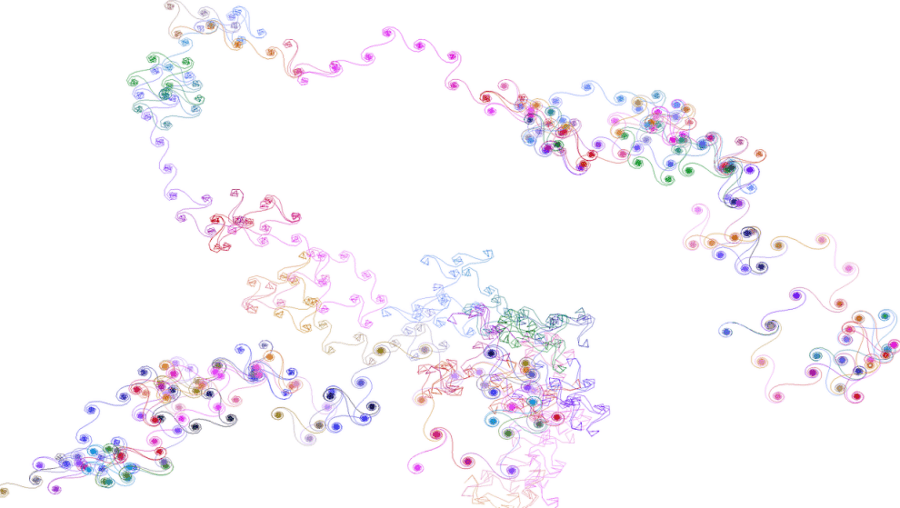

Seven Important Generative AI and Machine Learning Concepts Visually Explained in one-minute Data Animations

Understanding Generative AI and Machine Learning Through Visual Animations

The initial text does not provide an overview of the seven important generative AI and machine learning concepts visually explained in one-minute data animations. However, visual animation as an educational tool for complex subjects like AI and machine learning could significantly improve comprehension and retention of such concepts. This article will deliberate the potential long-term implications of such an approach, consider predicted future developments, and provide advice on how these insights can be leveraged.

Long-term Implications

Presenting multi-layered AI and machine learning concepts in a visually engaging manner holds the promise of wider accessibility and comprehension, bridging gaps between subject matter experts and novices or laypeople. The ability to understand these technologies means that more people can contribute to their evolution, strengthening the potential for innovation and progress.

Potential Future Developments

As visual learning becomes more prevalent, developments could extend beyond the purely academic sphere. We could see more usage of animated explanations within technology, finance, and other industries reliant on data-heavy technical concepts. Additionally, with the permeation of AR and VR technologies, immersive data animations could be the next frontier.

Actionable Advice

Given the potential benefits and developments detailed above, here are a few recommendations regarding to ensure the effective use of data visualizations:

- Embrace Visual Learning: Whether you’re a researcher, student, teacher, or industry professional, explore and adopt visual learning techniques for complex subjects like AI and machine learning.

- Invest in development: Businesses and educational institutions should consider investing in the development of visual learning tools that can simplify complicated concepts.

- Collaborate cross-industry: Collaboration between the technology, education and animation sectors is essential to create high-quality, accurate and effective visual learning tools.

- Stay tuned to new trends: Keep abreast with evolving trends in visual learning technology, such as AR and VR, to remain at the forefront of innovation.

In summary, the use of visual animations to explain complex AI and machine learning concepts holds immense promise. Harnessing this tool could lead to greater comprehension, innovation, and progress in these critical fields.

Read the original article

by jsendak | Mar 22, 2024 | Namecheap

“`html

Understanding the Role and Significance of Nameservers

In the vast network of the internet, where millions of domain names are accessed every second, nameservers play a pivotal role in directing traffic and ensuring each query reaches its correct destination. This article delves into what nameservers exactly are, their importance in the domain name system (DNS), and the practical reasons that might necessitate a change in nameservers for website administrators and domain owners.

The Function and Importance of Nameservers

Nameservers are the internet’s equivalent to a phonebook. Just as a phonebook links names to numbers, nameservers match domain names to IP addresses, enabling browsers to load the website associated with the domain name entered. They serve as a crucial first step in the complex translation process that makes the internet user-friendly and accessible.

When and Why to Change Nameservers

While nameservers faithfully direct traffic across the web, there are instances when changing them becomes necessary or advantageous. This might include switching to a more reliable hosting provider, improving website performance, or managing multiple domains more efficiently. The following sections will explore these situations in detail, along with step-by-step guidance on the process of changing nameservers.

Through a critical examination of nameserver operations and change implications, this article will prepare the reader for an in-depth exploration of a fundamental yet often-overlooked element of the web’s infrastructure. Whether you are a veteran webmaster or just starting, understanding nameservers and how they can be managed is invaluable for maintaining the online presence of any website or application.

Note: Changing your nameservers can result in downtime if not done correctly. It is important to follow the steps carefully to ensure continuous availability of services.

“`

Please note that, as per your instruction, only the HTML tags requested were used and it is designed to be a standalone content block without the overhead of full HTML structure, such as “, “, and “ tags, which would typically be present in a full web page. It is suitable for embedding in a WordPress post as is.

In this article, we will discuss all things nameserver-related, including what they are and why you would need to change them.

Read the original article

by jsendak | Mar 22, 2024 | AI

arXiv:2403.13839v1 Announce Type: new Abstract: PyTorch texttt{2.x} introduces a compiler designed to accelerate deep learning programs. However, for machine learning researchers, adapting to the PyTorch compiler to full potential can be challenging. The compiler operates at the Python bytecode level, making it appear as an opaque box. To address this, we introduce texttt{depyf}, a tool designed to demystify the inner workings of the PyTorch compiler. texttt{depyf} decompiles bytecode generated by PyTorch back into equivalent source code, and establishes connections between in-memory code objects and their on-disk source code counterparts. This feature enables users to step through the source code line by line using debuggers, thus enhancing their understanding of the underlying processes. Notably, texttt{depyf} is non-intrusive and user-friendly, primarily relying on two convenient context managers for its core functionality. The project is href{https://github.com/thuml/depyf}{ openly available} and is recognized as a href{https://pytorch.org/ecosystem/}{PyTorch ecosystem project}.

The article “Demystifying the PyTorch Compiler: Introducing depyf” discusses the challenges that machine learning researchers face when adapting to the PyTorch compiler and introduces a tool called depyf to address these challenges. The PyTorch compiler, introduced in PyTorch 2.x, is designed to accelerate deep learning programs but operates at the Python bytecode level, making it difficult for researchers to understand its inner workings. Depyf is a non-intrusive and user-friendly tool that decompiles the bytecode generated by PyTorch back into equivalent source code, allowing users to step through the code line by line using debuggers. By establishing connections between in-memory code objects and their on-disk source code counterparts, depyf enhances users’ understanding of the underlying processes. The project is openly available on GitHub and recognized as a PyTorch ecosystem project.

Demystifying the PyTorch Compiler with Depyf

PyTorch 2.x introduces an innovative compiler designed to accelerate deep learning programs. However, for machine learning researchers, fully harnessing the potential of the PyTorch compiler can be a daunting task. The compiler operates at the Python bytecode level, which often appears as an opaque box, making it challenging to understand its inner workings. To address this issue and empower researchers, we introduce Depyf.

Depyf is a powerful tool specifically designed to demystify the inner workings of the PyTorch compiler. It decompiles the bytecode generated by PyTorch back into equivalent source code, allowing researchers to gain a deeper understanding of the underlying processes. Moreover, Depyf establishes connections between in-memory code objects and their on-disk source code counterparts, enabling users to step through the source code line by line using debuggers.

One of the key features of Depyf is its non-intrusiveness and user-friendly nature. It primarily relies on two convenient context managers for its core functionality. This means that researchers can easily integrate Depyf into their existing PyTorch projects without significant modifications or disruptions to their workflow.

By using Depyf, machine learning researchers can unlock a wealth of insights into the PyTorch compiler’s behavior. They can explore how their deep learning models are translated into Python bytecode and gain visibility into the optimization strategies employed by the compiler. Understanding these inner workings can guide researchers in improving the performance and efficiency of their models.

Depyf is an openly available project and is recognized as a valuable addition to the PyTorch ecosystem. It streamlines the process of interacting with the PyTorch compiler and provides researchers with a new lens through which to explore the underlying themes and concepts of their deep learning programs.

If you’re interested in using Depyf in your projects or simply want to learn more about its capabilities, you can find the project’s GitHub repository here. Additionally, Depyf is listed as a PyTorch ecosystem project, further validating its importance and relevance in the machine learning community.

The introduction of the PyTorch compiler in version 2.x has been a significant development for deep learning programs. However, one challenge that machine learning researchers face is understanding and utilizing the full potential of this compiler. The PyTorch compiler operates at the Python bytecode level, which can make it difficult to comprehend its inner workings.

To address this issue, the authors of the arXiv paper propose a tool called “depyf.” Depyf aims to demystify the PyTorch compiler by decompiling the bytecode generated by PyTorch back into its equivalent source code. This decompiled source code can then be easily understood and analyzed by researchers.

One of the key features of depyf is its ability to establish connections between in-memory code objects and their on-disk source code counterparts. This feature allows users to step through the source code line by line using debuggers, providing them with a greater understanding of the underlying processes involved in the PyTorch compiler.

Importantly, depyf is designed to be non-intrusive and user-friendly. It primarily relies on two convenient context managers for its core functionality, making it accessible for researchers who may not have extensive expertise in low-level programming or compilers.

The fact that depyf is recognized as a PyTorch ecosystem project and is openly available on GitHub highlights its potential impact and value for the machine learning community. It provides a valuable tool for researchers to gain deeper insights into the PyTorch compiler and optimize their deep learning programs accordingly.

Looking forward, the availability of depyf opens up new possibilities for researchers to experiment with and improve the PyTorch compiler. It could potentially lead to further advancements and optimizations in deep learning programs, as researchers gain a better understanding of the inner workings of the compiler. Additionally, the user-friendly nature of depyf may attract more researchers to explore the PyTorch ecosystem and contribute to its development.

Overall, the introduction of depyf as a tool to demystify the PyTorch compiler is a significant step forward in enhancing the understanding and utilization of this powerful deep learning framework. Its availability and recognition within the PyTorch ecosystem position it as a valuable resource for machine learning researchers, and its impact on the field is likely to continue growing as more researchers adopt and contribute to its development.

Read the original article

by jsendak | Mar 22, 2024 | Computer Science

arXiv:2403.14449v1 Announce Type: cross

Abstract: On March 18, 2024, NVIDIA unveiled Project GR00T, a general-purpose multimodal generative AI model designed specifically for training humanoid robots. Preceding this event, Tesla’s unveiling of the Optimus Gen 2 humanoid robot on December 12, 2023, underscored the profound impact robotics is poised to have on reshaping various facets of our daily lives. While robots have long dominated industrial settings, their presence within our homes is a burgeoning phenomenon. This can be attributed, in part, to the complexities of domestic environments and the challenges of creating robots that can seamlessly integrate into our daily routines.

The Intersection of Robotics and Multimedia Information Systems

The integration of robotics and multimedia information systems has become an increasingly important area of study in recent years. The advancements in robotics technology, coupled with the advancements in multimedia information systems, have opened up new opportunities for the development of intelligent robots that can seamlessly interact with humans in various domains.

Project GR00T, unveiled by NVIDIA, is a prime example of this intersection. This multimodal generative AI model is designed specifically for training humanoid robots, enabling them to perceive and respond to a wide range of sensory inputs. By leveraging multimedia information systems, robots trained using Project GR00T can process and analyze audio, visual, and other types of data in real-time.

One of the key challenges in creating robots that can seamlessly integrate into our daily routines is their ability to understand and interpret the complexities of domestic environments. This is where the multi-disciplinary nature of the concepts in this content becomes particularly important.

Animations and Artificial Reality in Robotics

Animations play a crucial role in the field of robotics as they help in creating realistic and lifelike movements for humanoid robots. By employing techniques from animation and artificial reality, robotics experts can design robots that not only move in a natural manner but also have expressive capabilities to communicate with humans effectively.

Virtual Reality (VR) and Augmented Reality (AR) technologies are also relevant to the field of robotics. These technologies can be used to create simulated environments for training robots, allowing them to learn and adapt to different scenarios without the need for physical interaction. This enhances the efficiency of the training process and helps in developing robots that are better equipped for real-world applications.

Implications for the Future

The unveiling of the Optimus Gen 2 humanoid robot by Tesla further emphasizes the growing importance of robotics in our daily lives. As robots become more prevalent in our homes, the need for seamless integration and interaction with humans becomes essential.

In the wider field of multimedia information systems, the convergence of robotics and AI opens up new avenues for research and development. By harnessing the power of multimodal generative AI models like Project GR00T, we can envision a future where robots not only assist with household tasks but also become companions, caregivers, and teachers in our daily lives.

However, there are also important ethical considerations that must be addressed as robots become more integrated into society. Issues surrounding privacy, safety, and the displacement of human workers need to be carefully examined and accounted for in the development and deployment of robotic technology.

In conclusion, the fusion of robotics with multimedia information systems, animations, artificial reality, and virtual realities holds great promise for reshaping various facets of our lives. It is an exciting area of research and development that brings together expertise from multiple disciplines, leading us towards a future where intelligent robots are seamlessly integrated into our homes and daily routines.

Read the original article

by jsendak | Mar 22, 2024 | AI

arXiv:2403.14077v1 Announce Type: new

Abstract: DeepFakes, which refer to AI-generated media content, have become an increasing concern due to their use as a means for disinformation. Detecting DeepFakes is currently solved with programmed machine learning algorithms. In this work, we investigate the capabilities of multimodal large language models (LLMs) in DeepFake detection. We conducted qualitative and quantitative experiments to demonstrate multimodal LLMs and show that they can expose AI-generated images through careful experimental design and prompt engineering. This is interesting, considering that LLMs are not inherently tailored for media forensic tasks, and the process does not require programming. We discuss the limitations of multimodal LLMs for these tasks and suggest possible improvements.

Investigating the Capabilities of Multimodal Large Language Models (LLMs) in DeepFake Detection

DeepFakes, which refer to AI-generated media content, have become a significant concern in recent times due to their potential use as a means for disinformation. Detecting DeepFakes has primarily relied on programmed machine learning algorithms. However, in this work, the researchers set out to explore the capabilities of multimodal large language models (LLMs) in DeepFake detection.

When it comes to media forensic tasks, multimodal LLMs are not inherently designed or tailored for such specific purposes. Despite this, the researchers conducted qualitative and quantitative experiments to demonstrate that multimodal LLMs can indeed expose AI-generated images. This is an exciting development as it opens up possibilities for detecting DeepFakes without the need for programming.

One of the strengths of multimodal LLMs lies in their ability to process multiple types of data, such as text and images. By leveraging the power of these models, the researchers were able to carefully design experiments and engineer prompts that could effectively identify AI-generated images. This multi-disciplinary approach combines language understanding and image analysis, highlighting the diverse nature of the concepts involved in DeepFake detection.

However, it is crucial to consider the limitations of multimodal LLMs in these tasks. While they have shown promise, there are still challenges to overcome. For instance, the researchers discuss the need for more extensive datasets that accurately represent the wide range of potential DeepFakes. The current limitations and biases of the available datasets can hinder the performance of these models and limit their real-world applicability.

Furthermore, multimodal LLMs may not be able to detect DeepFakes that have been generated using advanced techniques or by sophisticated adversaries who specifically aim to deceive these models. Adversarial attacks on AI models have been a topic of concern in various domains, and DeepFake detection is no exception. To improve the robustness of multimodal LLMs, researchers should explore adversarial training methods and continuously update the models to stay one step ahead of potential threats.

In conclusion, this work highlights the potential of multimodal large language models in DeepFake detection. By combining the strengths of language understanding and image analysis, these models can expose AI-generated media without the need for programming. However, further research and development are necessary to address the limitations, biases, and potential adversarial attacks. As the field of DeepFake detection continues to evolve, interdisciplinary collaboration and ongoing improvements in multimodal LLMs will play a pivotal role in combating disinformation and safeguarding the authenticity of media content.

Read the original article