by jsendak | Nov 26, 2024 | DS Articles

Learn how to utilize this advanced AI-driven IDE for your work.

Analysis: The Future of AI-Driven IDEs and Their Implications

The text briefly references a new development in the technology industry—an artificial intelligence-driven integrated development environment (IDE). As AI continues to proliferate several facets of our lives, this little piece points towards the growing influence of AI in the development sector. It also suggests a call to action- learning how to leverage this advanced tool in one’s work. Let’s take an in-depth exploration of potential long-term implications and future transformations this technology promises to deliver.

The Long-Term Implications of AI-Driven IDEs:

- Towards Smarter Coding: With AI at their core, IDEs can improve efficiency and accuracy in development workflows. AI can help developers refactor code, spot errors, and even suggest improvements on the fly. These advancements could significantly reduce software development time and costs.

- AI-Assisted Learning: AI-driven IDE can also provide learning support in real time. It can offer suggestions and solutions during the development process which indirectly aids in learning new languages and development practices.

- Automation of Routine Tasks: Coders often spend a large portion of their time doing monotonous tasks such as code reviews and bug fixes. AI-driven IDEs can automate these tasks, freeing developers to focus on more strategic, creative problem solving.

Future Developments:

The future of AI-driven IDEs promises changes that could shape software development in unprecedented ways. Possible developments may include:

- Fully Autonomous Coding: As AI technology evolves, we might witness IDEs creating pieces of software autonomously, potentially opening up a new dimension in software development.

- Improved Collaboration: Future AI-driven IDEs might enhance collaboration experience through features such as improved code sharing or real-time collaboration tools.

- Advanced Debugging: Advanced IDEs could go beyond identifying bugs and step into providing detailed diagnostics and step-by-step guidance on how to address them.

Actionable Advice:

Stay in the loop and quickly adapt to AI-driven IDEs to stay ahead in your career.

These advancements, promising though they are, also present a learning curve. Here are steps to take to harness them:

- Learn and Adapt: Commit to understanding and mastering AI-driven IDEs. This may range from going through online tutorials to enrolling in specialized training programs.

- Connect with Community: Joining IDE user forums and communities can provide additional learning resources, and insight into practical utilization techniques. It also opens up opportunities for collaboration and networking.

- Apply Incrementally: Start by integrating AI-driven IDEs into your current projects incrementally. As you grow comfortable, start taking full advantage of their automation and learning features.

In conclusion, embracing AI-driven IDEs could provide enormous benefits from enhanced efficiency to improved learning and collaboration opportunities. Be proactive in learning and adapting to this development to stay relevant in a rapidly evolving technological landscape.

Read the original article

by jsendak | Nov 26, 2024 | DS Articles

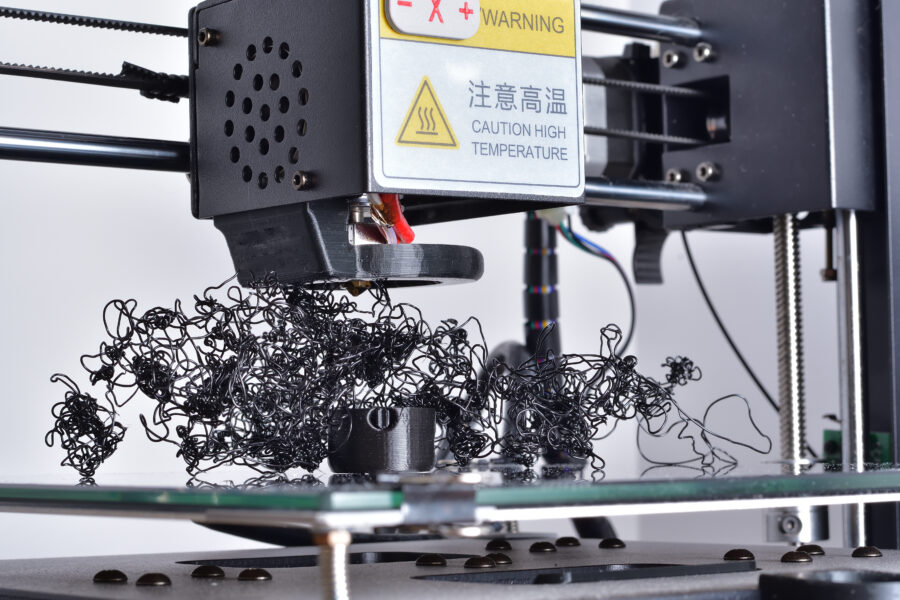

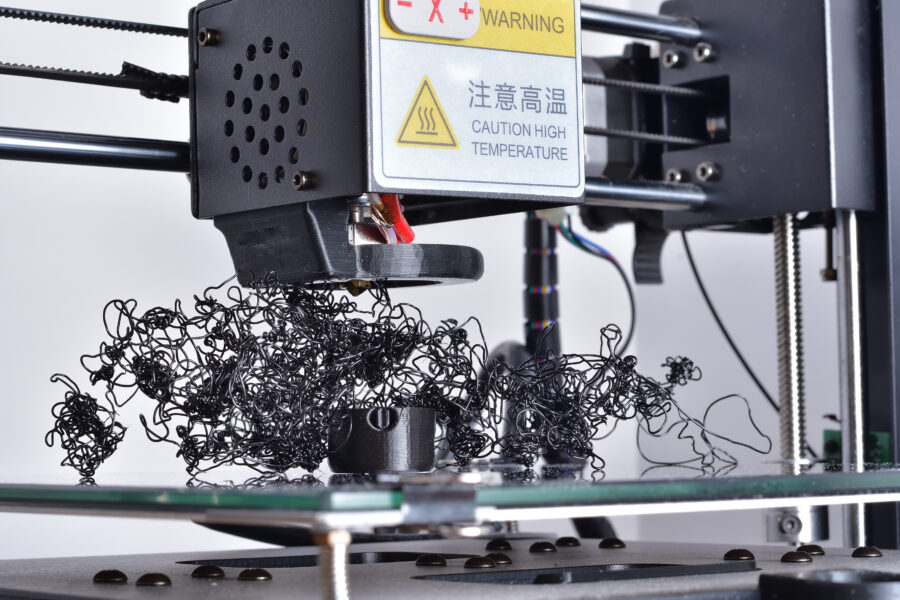

In the last few years, consumer-level 3D printing hardware has been gaining popularity in the hobby space, and it’s easy to see why. The ease of accessible or free CAD software, in conjunction with the culture of hosting custom-designed models for free, has given the niche tool a broad appeal. The gold standard for entry-level… Read More »AI visual analysis in 3D printing hardware

Future Projections for 3D Printing Hardware and AI Visual Analysis

The burgeoning field of 3D printing has seen rapid developments within the hobby space in the last couple of years. The combination of easy accessibility or cost-effectiveness offered by Computer Aided Design (CAD) software and the practice of freely sharing custom-designed models has given this unique tool a wide appeal. In addition, AI visual analysis, being an emergent technology, has a lot to offer in this domain, thereby setting a new gold standard for entry-level 3D hardware.

Three Dimensional Printing: A New Phenomenon

The popularity of 3D printing in recent years is attributable to the user-friendly tools that enable the creation of personalized items. The charm of generating a physical artifact from a digital model has appealed to hobbyists and enthusiasts alike. Cognition of its potential for widespread personal and commercial use will only fuel its growth.

Artificial Intelligence in Visual Analysis

AI visual analysis can significantly improve the 3D printing process by facilitating dimensional accuracy and detailing. It could also hasten the prototyping phase and reduce product development time.

Long-term Implications and Future Developments

Advancement in 3D Technology & AI

As the technology continues to mature, we can expect a greater blend of 3D printing with AI. AI could automate the design process, perceiving and correcting flaws that would otherwise go unnoticed. Improved AI visual analysis could lead to more detailed and intricate designs, thereby extending the capabilities of 3D printers.

Increased Accessibility and Versatility

As more users adopt 3D printing and develop their unique models, CAD software will become even more widespread and versatile. This could make it more accessible to the average consumer, paving the way for a broader range of applications.

Actionable Advice

- Invest in Good Quality Printing Hardware: Despite the cost, good-quality 3D printing hardware guarantees better results and longevity.

- Learn and Understand CAD Software: To enjoy the benefits of 3D printing, individuals and businesses should learn how to use CAD software efficiently.

- Stay Up-to-date: Keep abreast of advancements in AI visual analysis, as it could considerably improve the quality of your 3D printed prototypes or models.

- Share Your Work: Sharing your 3D models will contribute to the community and encourage the proliferation of 3D printing technology.

With continued advancements in AI and CAD software, the scope and popularity of 3D printing are bound to skyrocket. As the technology attains further refinement and precision, its applications in diverse fields like architecture, medicine, and art will be fascinating to watch.

Read the original article

by jsendak | Nov 25, 2024 | DS Articles

[This article was first published on

Getting Genetics Done, and kindly contributed to

R-bloggers]. (You can report issue about the content on this page

here)

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

Reposted from https://blog.stephenturner.us/p/tech-im-thankful-for-2024

Data science and bioinformatics tech I’m thankful for in 2024: tidyverse, RStudio, Positron, Bluesky, blogs, Quarto, bioRxiv, LLMs for code, Ollama, Seqera Containers, StackOverflow, …

It’s a short week here in the US. As I reflect on the tools that shape modern bioinformatics and data science it’s striking to see how far we’ve come in the 20 years I’ve been in this field. Today’s ecosystem is rich with tools that make our work faster, better, enjoyable, and increasingly accessible. In this post I share some of the technology I’m particularly grateful for — from established workhorses that have transformed how we code and analyze data, to emerging platforms that are reshaping scientific communication and development workflows.

-

The tidyverse: R packages for data science. Needs no further introduction.

-

devtools + usethis + testthat: I use each of these tools at least weekly for R package development.

-

Rstudio, Positron, and VS Code: Most of the time I’m using a combination of VS Code and RStudio. My first experience with Positron was a positive one, and as several of my dealbreaker functionalities are brought into Positron, I imagine next year it’ll be my primary IDE for all aspects of data science.

-

Bluesky. This place feels like the “old” science Twitter of the late 00s / early teens. I wrote about Bluesky for Science to get you started. It’s so great to have a place for civil and good-faith discussions of new developments in science, to be able to create my own algorithmic feeds, and to create thermonuclear block/mute lists.

-

Slack communities. There are many special interest groups and communities with Slack/Discord communities open to anyone. A few that I’m a part of:

-

Blogs. Good old 2000s-era long form blogs. I blogged regularly at Getting Genetics Done for nearly a decade. Over time, Twitter made me a lazy blogger. My posts got shorter, fewer, and further between. I’m pretty sure the same thing happened to many of the blogs I followed back then. In an age where so much content on the internet is GenAI slop I’ve come to really appreciate long-form treatment of complex topics and deep dives into technical content. A few blogs I read regularly:

-

Quarto: The next generation of RMarkdown. I’ve used this to write papers, create reports, to create entire books (blog post coming soon on this one), interactive dashboards, and much more.

-

Zotero: I’ve been using Zotero for over 15 years, ever since Zotero was only a Firefox browser extension. It’s the only reference manager I’m aware of that integrates with Word, Google Docs, and RStudio for citation management and bibliography generation. The PDF reader on the iPad has everything I want and nothing I don’t — I can highlight and mark up a PDF and have those annotations sync across all my devices. Zotero is free, open-source, and with lots of plugins that extend its functionality, like this one for connecting with Inciteful.

-

bioRxiv: bioRxiv launched about 10 years ago and every year gains more traction in the life sciences community. And attitudes around preprints today are so much different than they were in 2014 (“but what if I get scooped?”).

-

LLMs for code: I use a combination of GitHub Copilot, GPT 4o, Claude 3.5 Sonnet, and several local LLMs to aid in my development these days.

-

Seqera Containers: I’m not a Seqera customer, and I don’t (yet) use Seqera Containers URIs in my production code, but this is an amazing resource that I use routinely for creating Docker images with multiple tools I want. I just search for and add tools, and I get back a Dockerfile and a conda.yml file I can use to build my own image.

-

Ollama: I use Ollama to interact with local open-source LLMs on my Macbook Pro, for instances where privacy and security is of utmost concern.

-

StackOverflow: SO used to live in my bookmarks bar in my browser. I estimate my SO usage is down 90% from what it was in 2022. However, none of the LLMs for code would be what they are today without the millions of questions asked and answered on SO over the years. I’m not sure what this means for the future of SO and LLMs that rely on good training data.

Continue reading: Tech I’m thankful for (repost)

Key Insights from “Tech I’m Thankful for”

The original article by Stephen Turner presents an engaging reflection on the remarkable journey of bioinformatics and data science spanning 20 years. Acknowledging that technology has accelerated the efficiency, quality, and accessibility of work in these fields, Turner outlines novel platforms and established tools that have transformed coding and data analysis.

Tech Tools and Platforms Changing the Landscape

The assemblage of software by Turner encompasses a broad spectrum. From foundational tools like R packages for data science (‘tidyverse’), RStudio, and Visual Studio Code used for data manipulation and visualization to innovative platforms such as Ollama and Seqera for interacting with open-source local language models (LLMs) and creating Docker images respectively, Turner appreciates their convenience and utility. He appreciates Bluesky for its constructive scientific discussions and StackOverflow’s contribution towards LLM training data.

Impacts on Communication and Development Workflows

Turner perceives transformations not just in data handling, but also in walks like scientific communication and development workflows. Platforms like bioRxiv have updated the stance on preprints within the life science community. Tools like Zotero are deemed indispensable for citation management and bibliography generation. Furthermore, he reiterates his fondness for long-form blogging and enhanced engagement avenues through Slack communities.

Possible Future Developments

The analysis reveals that the future of bioinformatics and data science largely veers toward automation with increased reliance on LLMs for streamlining the development process. Embracing the open-source paradigm is apparent for the augmentation of privacy and security. Furthermore, the expanding traction of preprints might reshape scientific communication, leading to transparent, faster, and open dissemination of research.

Actionable Advice

- Significant benefits can be derived by staying updated with emerging platforms and tools as they add value to professional growth by making work more efficient and high-quality.

- Engaging in online communities, reading long-form blogs, retrospecting and learning from past work can foster knowledge and skill development at personal and community levels.

- Understanding and leveraging LLMs like GitHub Copilot and GPT 4o can help in accelerating code development and maintaining good programming practices.

- Embrace tools like Zotero that assist in efficient management of literature and capitalize on preprint servers like bioRxiv for disseminating research findings rapidly and openly.

Read the original article

by jsendak | Nov 25, 2024 | DS Articles

Here’s what it takes to become a successful data scientist in 2024.

An Analysis of How to Become a Successful Data Scientist in 2024 and Beyond

In the age of information, there has been a surge in the number of professionals working in data science. Therefore, keeping up with developments and future demands in this line of work is crucial for long-term success and career development. This article discusses the long-term implications of becoming a successful data scientist, future predictions, and provides key points to assist professionals in this field.

Long-term Implications

In the long-term, mastering the blend of technological expertise and sophisticated business understanding will be pivotal for success in the field of data science. As data processing and analysis become more complex, those who expand their knowledge and skill sets accordingly will be poised to prosper. That being said, mastering specific software will be less important than understanding how to apply the knowledge to solve real-world problems and impacting business growth positively.

Predictions for the Future

Current trends suggest the importance of data scientists will not wane anytime soon. On the contrary, as companies across all sectors increasingly rely on data analyses to make informed business decisions, the demand for skilled data professionals is projected to rise. Other predictions include a shift towards automated data analysis, the rise in AI and Machine learning, and the increase in the role of big data.

Actionable Advice for Aspiring and Existing Data Scientists

- Updating Skills: Adapting to the latest data analysis tools and techniques is essential. A career in data science requires continual education and updating of skills.

- Fostering Interdisciplinary Knowledge: With the increasing integration of data science and various business aspects, knowledge expansion beyond the boundaries of this field is a plus. For instance, understanding business principles will help in applying data insights to enhance business performance.

- Embracing Change: Given the fast-paced nature of the technology world, willingness to learn and adapt to new tools, algorithms, and methods is a must.

- Implementing AI and Machine Learning: Acquiring proficiency in AI and ML concepts could provide a consequential edge over peers in the contemporary job market.

- Being Versatile: Flexibility and versatility are important personal traits that will increase your chances of success in this dynamic field.

In conclusion, the concept isn’t just about becoming a successful data scientist in 2024 but maintaining that relevance and success in the years to come. This demands a commitment to continuous learning and skill adaptation, as well as a broad perspective on how data is integrated into business strategies.

Read the original article

by jsendak | Nov 24, 2024 | DS Articles

If there is one development at the moment which I full heartedly enjoy reading about it’s that the remains of what was once called Twitter is seeing a large E𝕏odus.

Since a certain billionaire has taken over that platform, it has continuously become worse and I was hoping that politcians, media outlets and my fellow social scientists would come to Bluesky instead, which is apparently exactly what is happening now.

So after a lot of disappointment with world events this year, my wish that Bluesky would become Twitter’s heir, seems to come true.

The reasons I like Bluesky so much are that it connects me with a peer group that is spread around the world, like Twitter once did, but that it is built on open source infrastructure, which not only makes it billionaire proof, but also incredibly easy to tap into the data.

Overall it is just a place of joy right now and thanks to how serious the developers took community moderation, I’m hopeful that it will stay this way.

However, that led to a problem this week which can only be described as ‘incredibly first world’.

I was getting too many notifications about new followers!

So many that it became impossible to go through all of them and check whom to follow back.

My approach to solving the problem?

Using R and the atrrr package I created with friends ealiers this year.

Who follows me, but I’m not following back?

I start by looking at who follows me, and whom I already follow back:

library(atrrr)

library(tidyverse)

my_followers <- get_followers("jbgruber.bsky.social", limit = Inf) |>

# remove columns containing more complex data

select(-ends_with("_data"))

my_follows <- get_follows("jbgruber.bsky.social", limit = Inf) |>

select(-ends_with("_data"))

not_yet_follows <- my_followers |>

filter(!actor_handle %in% my_follows$actor_handle)

Now not_yet_follows contains 372 people!

More than I thought.

My assumption is that they are interested in similar topics and it would probably enrich my feed if I followed a chunk of them back.

But how to decide?

I came up with three criteria:

- who is already followed by a large chunk of my follows

- who has #commsky, #polsky or #rstats in their description

- who has a big account, which I defined at the moment as 1,000 followers+

Number 1 and 3 are made under the assumption that popular accounts are popular for a reason and I’m relying on the wisdom of the crowd.

Who is followed by the people I follow?

To answer this, we need to get quite a bit of data.

Specifically, I loop through all accounts that I follow and get the follows from them:

follows_of_follows <- my_follows |>

pull(actor_handle) |>

# iterate over follows getting their follows

map(function(handle) {

get_follows(handle, limit = Inf, verbose = FALSE) |>

mutate(from = handle)

}, .progress = interactive()) |>

bind_rows() |>

# not sure what this means

filter(actor_handle != "handle.invalid")

This data is huge, with over 450,000 accounts.

So who in the not_yet_follows list shows up there most often?

follows_of_follows_count <- follows_of_follows |>

count(actor_handle, name = "n_following", sort = TRUE)

follows_of_follows_count

## # A tibble: 160,440 × 2

## actor_handle n_following

## <chr> <int>

## 1 jbgruber.bsky.social 400

## 2 claesdevreese.bsky.social 352

## 3 rossdahlke.bsky.social 292

## 4 alessandronai.bsky.social 285

## 5 favstats.eu 263

## 6 feloe.bsky.social 263

## 7 jamoeberl.bsky.social 246

## 8 brendannyhan.bsky.social 226

## 9 fgilardi.bsky.social 225

## 10 dfreelon.bsky.social 224

## # ℹ 160,430 more rows

Unsurprisingly, I’m on top of this very specific list since this is a network around my own account.

But let’s see who among my not_yet_follows list is popular here:

popular_among_follows <- not_yet_follows |>

left_join(follows_of_follows_count, by = "actor_handle") |>

filter(n_following > 30)

I put the people who have more than 30 n_following here, which is an arbitry number I picked, and ended up with 76 people I should look into.

Who matches my interest in their description?

Specifically, I look for a couple of key hashtags: #commsky, #polsky or #rstats in their description.

These are the words I look for when checking out someone’s bio and it is very likely I want to follow them then.

Looking for the keywords is pretty simple, since we already have the data:

probably_interesting_content <- not_yet_follows |>

filter(!is.na(actor_description)) |>

filter(str_detect(actor_description, regex("#commsky|#polsky|#rstats",

ignore_case = TRUE)))

Only 20 accounts fit this filter.

Maybe I could find better keywords?

But this is just a demo of what you could do, so let’s move on.

Who are the big accounts trying to connect?

We can look up the user info to see how many followers they have.

popular_not_yet_follows <- not_yet_follows |>

mutate(followers_count = get_user_info(actor_handle)$followers_count) |>

filter(followers_count > 1000)

Again the 1,000 follower number is arbitrary, but when I look at an account and see four figure follower counts, I still think it’s a lot.

This gave me 80 accounts.

So what could I do now?

Two ways to approach it:

- let’s just follow them all if they fit these criteria:

lets_follow <- bind_rows(

popular_among_follows,

probably_interesting_content,

popular_not_yet_follows

) |>

distinct(actor_handle) |>

pull(actor_handle)

follow(lets_follow)

- More realistically though, I still want to have a look at the 136 accounts before following them.

This can be done relatively conveniently by opening the user profiles in my browser.

I can do that with:

walk(

paste0("https://bsky.app/profile/", lets_follow),

browseURL

)

How else can I find followers?

What you can also do with the data is to simply check follows_of_follows_count which of the accounts that are popular among your friends you don’t yet follow – without the condition that they are following you.

popular_among_follows2 <- follows_of_follows_count |>

filter(!actor_handle %in% my_follows$actor_handle) |>

filter(n_following > 30)

This gives me another 60 accounts to look through.

Of course the best way to search for intersting accounts when you are new to the platform is to look for starter packs.

The website Bluesky Directory has these ordered by topics and let’s you search through it.

How can I learn more about atrrr?

We collected a couple of tutorials on the package’s website: https://jbgruber.github.io/atrrr/

If there is something you would like to have explained (better) or you went through the docs and found an interesting endpoint, head over to GitHub and create and issue.

We are very open for ideas that make the package better!

Continue reading: So many new people on Bluesky! Who should I follow?

Examining the Potential Effects and Implications of the Transition from Twitter to Bluesky

As the remains of the once-popular social media platform, Twitter, experience a present E𝕏odus, a parallel move towards the open-source platform, Bluesky, is apparent. This shift is not only noticeably observed in the actions of social scientists, media outlets, and politicians, but also, supported by a large number of users who might prioritize the open-source infrastructure of Bluesky over Twitter.

A Better Social Media Experience

Bluesky is rising in popularity and preference as it offers an experience quite close to Twitter’s golden days – efficiently connecting users with a peer group scattered around the globe, with an emphasis on ease of data access. Such ease of data access is attributed to Bluesky’s underlying open-source architecture, which manages to keep the platform immune from the influences of billionaires, simultaneously promoting community moderation.

Although the increasing engagement has recently led to situations where users might feel overwhelmed by the notification count from new followers, it still reflects the platform’s growing popularity.

Edging Towards Personalized Experience

A solution proposed to efficiently manage this problem is crafted around using R, a programming language, and a package called ‘atrrr’ to create filters based on personalized criteria. One of the algorithms designed using ‘atrrr’ first determines who follows a user without being followed back. The output list is then filtered based on popularity among fellow followers, content relevance or specific keywords in bio, and the amount of followers they have. These filters help list potential followers that would ideally enrich the user’s feed.

Actionable Advice Based on the Transition Implications

Evidently, the advent of Bluesky seems to offer a promising alternative for Twitter users and is seen as a refreshing development in the sphere of social media. However, the issue of efficiently managing a growing number of followers is a challenge that must be addressed. The long-term implications of this transition may include some of the following:

- The continuous exodus from Twitter to Bluesky could signify a broader shift in priority towards open-source platforms. Platforms that can guard against negative billionaire influence and offers straightforward data access could soon be the norm.

- As these platforms grow, the need for efficient tools that can manage increasing engagement becomes absolutely crucial. This means there could be a surge in demand for social data analysis and manipulation packages/tools such as ‘atrrr’.

- As users become more selective about who they follow, more personalized algorithms will be needed to monitor content relevance. This signifies an increased emphasis on AI and data science expertise in social media planning and development.

Advice for Bluesky Users

Those feeling overwhelmed by follower notifications on Bluesky might consider using ‘atrrr’ to help manage the influx. Notably, ‘atrrr’ allows users to filter potential followers they might want to follow back based on their customized criteria, significantly enhancing their social media experience.

For Bluesky newbies or individuals seeking to expand their network, the website Bluesky Directory offers starter packs ordered by topics to help find interesting accounts. It could be a good starting point to navigate the platform and establish a strong presence.

For Learners and Developers

Educational resources and tutorials on using ‘atrrr’ can be found on the package’s website. To improve and build upon the current version of ‘atrrr’, the creators warmly welcome suggestions, issues, or ideas that could enhance the package. This is an opportunity for positive collaboration and an open invitation for individuals wanting to contribute to a relevant and influential project.

A Conclusion on the Transition

In conclusion, the transition from Twitter to Bluesky seems to be a reflection of user’s desire for a better social media experience. Developers and social media strategists can build upon this shift, focusing on creating tools and algorithms that help manage growing engagement and deliver personalized, enriching content. Bluesky seems to have started its journey on the right note. The successful management of large-scale user migration and active participation of its users in development might write the success story for Bluesky, making it an ideal successor to Twitter.

Read the original article

by jsendak | Nov 24, 2024 | DS Articles

Learn R from top institutions like Harvard, Stanford, and Codecademy.

Overview

Learning R programming language from premier institutions such as Harvard, Stanford, and digital platforms like Codecademy has become prevalent. The popularity and significance of this data science language is constantly increasing due to its immense potential in fields such as data analysis, data visualization, and machine learning.

Long-Term Implications and Potential Developments

In the future, the demand for R programming is expected to skyrocket. This is because businesses of all types and sizes are recognizing the power of data and the essential role that data analysis plays in making informed decisions. Harnessing R’s power for advanced data science, statistical analysis and predictive modeling can help businesses meet their objectives more efficiently and compete in the digital data-driven era.

Also, the push towards artificial intelligence and machine learning, areas where R shines, combined with its open-source nature, is only going to enhance the value of this language. Global institutions like Harvard or Stanford providing courses in R signals the growing influence and importance of this language.

Online Learning Platforms

Digital education platforms like Codecademy are making it easier for individuals worldwide to learn this language. As remote work and online learning continue to gain traction, we expect a surge in online courses that teach R. It is entirely possible that more such reputable platforms might start offering comprehensive courses in R, making it accessible to a wider audience.

Actionable Advice

If you’re considering learning R, you’re on the right track. It’s a powerful tool that will continue to be in demand. Here’s what you could do:

- Enroll in a course: Choose an online course from any of the aforementioned institutions or from platforms like Codecademy, based on your needs and schedule.

- Practice: Consistently practice what you learn. Like any other programming language, proficiency in R comes from experience and practical application.

- Dive Deep Enough: Ensure you understand not just the basics but also the advanced features. Machine Learning and Data Science are complex fields, and they require a deep understanding of the tools used.

- Stay Updated: Given the open-source nature of R, it regularly gets updated with new features. Stay updated with these changes for better utilization.

Learning R is an investment in your future. Whether you are a student, a professional, or a data enthusiast, understanding and being able to work with R will enhance your skillset and make you highly attractive to potential employers.

Read the original article

︎

︎