by jsendak | Jan 11, 2024 | DS Articles

[This article was first published on

R – Xi'an's Og, and kindly contributed to

R-bloggers]. (You can report issue about the content on this page

here)

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

Continue reading: max spacing between mass shootings [data graphics]

Insights and Implications

From the given text, we can glean that R-bloggers is a platform that provides daily updates about R news, tutorials for learning R, and other relevant topics. The blog features articles from authors who share their knowledge about R and data science, and allows readers to engage in discussions by leaving comments on the authors’ blogs. It seems that there is an interest in data analysis related to mass shootings, as indicated by the reference to an article titled ‘max spacing between mass shootings [data graphics]’.

Given the information available, there are implications for both future developments and long-term growth. R-bloggers has potential to become a hub for data scientists interested in R, a programming language that is particularly strong for tasks requiring complex data analysis. This could include anything from social issues like gun violence, as hinted at with ‘max spacing between mass shootings’, to purely mathematical or scientific problems.

Possible Future Developments

The reference to a particular analysis of mass shooting data hints at the possibility for development of a more specialized content on R-bloggers. By featuring more in-depth analyses on pressing societal issues, the blog could attract a broader audience beyond just data experts. The platform could also potentially serve as a source of data-driven insights for policy makers, activists, and other stakeholders.

Actionable Advice

- Expand Content Selection: Diversifying content to include analysis on timely social issues could generate interest from a larger audience. It might also establish R-bloggers as a trusted source for people interested in understanding complex issues through a data-driven lens.

- Promote User Engagement: Encourage more engagement by providing clear and simple methods for readers to leave comments or ask questions. This could generate valuable discussions and increase user retention.

- Collaboration and Partnerships: Consider collaborating with influencers or leading experts in data science, machine learning, and other relevant fields. Invite guest postings to diversify content and attract their followers to the site.

- Job Posting Section: Expanding the job posting section might help users looking to either hire R professionals or find R-related work, reinforcing the platform’s role in the R community.

Read the original article

by jsendak | Jan 11, 2024 | DS Articles

Enroll in the free OSSU Computer Science degree program and launch your career in tech today. Learn from high-quality courses from professors from leading universities like MIT, Harvard, and Princeton.

The Future of the OSSU Computer Science Degree Program

The Open Source Society University (OSSU) Computer Science degree program mentioned in the reference text is a free, open-sourced online learning platform that seeks to offer quality educational materials from prestigious institutions like MIT, Harvard, and Princeton. This innovative program provides an excellent opportunity for students worldwide to gain invaluable knowledge and skills from reputable universities at no cost.

Long-term Implications

The OSSU Computer Science degree program embodies the potential to revolutionize the global educational landscape. Traditional geographical and financial barriers that often limit access to high-quality education are being dismantled, giving rise to a more inclusive educational system. This program could lead to increased global literacy rates and generally improve living standards.

Tech companies are likely to see a significant increase in the number of qualified applicants as well, which would bolster technological advancements and foster a more competitive market space. This could eventually result in the creation of more high-value jobs and economic growth.

Possible Future Developments

Given its current trajectory and considering technological advancements, there are potential developments that could further enhance the OSSU program:

- Improved user-interface to foster better online learning experiences

- More partnerships with renowned universities and professors around the globe

- Creation of similar programs for other fields of study

- Development of credit-transfer partnerships with tertiary institutions

Actionable Advice

Students interested in a career in tech or seeking to enrich their knowledge in computer science are encouraged to:

- Enroll for Free: The OSSU Computer Science degree program is open to everyone, regardless of financial means. It’s a great opportunity to learn from top-rated professors from esteemed institutions.

- Stay Motivated: Online learning can be challenging. Students must maintain a high level of discipline and motivation to excel in their studies.

- Stay Updated: With the constant technological advancements and likely improvements to the OSSU program, students should ensure they stay updated on recent changes, especially new partnerships and course offerings.

In conclusion, the OSSU Computer Science degree program is not just a remarkable innovation for the field of computer science education, but also serves as a beacon of hope for the democratization of education around the globe. It’s a testament to what’s possible with the power of technology and open-source educational resources.

Read the original article

by jsendak | Jan 11, 2024 | DS Articles

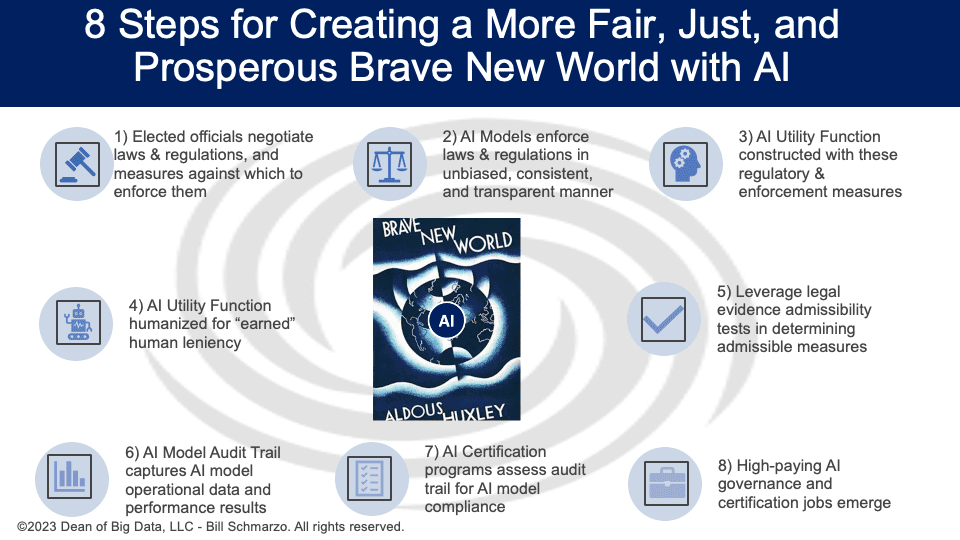

As I completed this blog series, the European Union (EU) announced its AI Regulation Law. The European Union’s AI Regulation Act seeks to ensure AI’s ethical and safe deployment in the EU. Coming on the heels of the White House’s “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence,” we… Read More »Creating a More Fair, Just, and Prosperous Brave New World with AI Summary

The European Union’s AI Regulation Law

The European Union recently announced its new AI Regulation Law, an important step in defining standards and providing guidelines for the use of artificial intelligence (AI). This law focuses on the ethical and safe application of AI, a groundbreaking move considering the explosive growth of detrimental unregulated AI usage.

Implications of the EU’s AI Regulation Law

The long-term implications of the EU’s AI regulation are multifold. It signifies a global stride in both technology management and digital ethics, affecting numerous sectors like healthcare, transport, and online services. Given the global nature of many tech companies, the law potentially imposes its standards beyond EU borders.

Promotion of Ethical and Safe AI Use

One of the foremost implications is the promotion of ethical and safe AI use. The regulation aims to reduce potential harm by enforcing stringent measures against harmful or biased AI functions.

Protection and Empowerment of Individuals

Another implication of this directive is the protection of individuals’ rights and tool empowerment. It gives individuals direct control over personal data usage, eradicating opaque algorithms’ operations and ensuring transparency.

Possible Future Developments

The introduction of this law may trigger a global wave of new regulations not confined to Europe. Countries around the world query about safer and ethical AI practices. AI’s robust governance may become a leading trend aiding global economies, ensuring rapid yet controlled technological advancement.

Enhanced Global Collaboration

In the future, we may witness enhanced global collaboration on AI ethics and governance, particularly in healthcare industries, where errors can be potentially life-threatening.

Evolution of AI Ethics in Academia

Academia might see an evolution in its course content and research agendas, with AI ethics becoming an integral part of technology and computer science curricula and research pursuits.

Actionable Advice

- Embrace Transparency: Companies should be open about their AI algorithms and models to build trust with stakeholders, particularly consumers.

- Ethical AI Strategies: Organizations need to develop ethical AI strategies framed within the set guidelines, ensuring AI systems don’t inadvertently harm users or marginalize certain groups.

- Collaborate with Governments: Tech organizations should actively collaborate with governments to shape future laws pertaining to AI usage.

- Incorporate AI Ethics in Academia: Academic institutions must include AI ethics, safety, and legal concerns in their programs to better prepare future tech leaders.

In conclusion, the EU’s AI Regulation Law holds significant implications for the global technological landscape. It’s a critical step towards safer, ethical, and more transparent AI deployment. This catalytic move may well school other regions into developing similar regulations, ultimately leading to a safer and smarter global tech economy.

Read the original article

by jsendak | Jan 10, 2024 | DS Articles

Eric Siegel highlights the chronic under-deployment of ML projects, with only 22% of data scientists saying their revolutionary initiatives usually deploy, and a lack of stakeholder visibility and detailed planning as key issues, in his industry survey and book “The AI Playbook.”

Maximizing the Effectiveness of Machine Learning Deployment

Eric Siegel’s survey and accompanying book “The AI Playbook,” underlines a severe issue in the data science industry: a major lack of deployment of machine learning (ML) projects. In fact, only about 22% of surveyed data scientists reported that their revolutionary AI initiatives are typically deployed.

Key Hurdles in Deployability

- Lack of Stakeholder Visibility: The information revealed by the AI models often stay within the domain of data science, failing to reach stakeholders who need the insights for decision-making.

- Inadequate Detailed Planning: ML projects often lack sufficient planning, which is necessary for successful execution and deployment.

Long-term Implications and Future Developments

Addressing this under-deployment issue is critical for an organization’s AI maturity, which could have implications for competitive positioning in the long run. As businesses become more dependent on AI and machine learning to drive decisions and strategies, those capable of deploying AI initiatives efficiently will emerge as industry leaders.

In the future, we may witness companies investing more in establishing robust deployment strategies for their ML projects. This would not just involve incorporating technical aspects like data integrity and model accuracy but also business aspects like clearer stakeholder communication and comprehensive planning.

Actionable Advice

- Improve Stakeholder Visibility: Ensure your ML project findings are presented using easily understandable insights and recommendations, making it simpler for stakeholders to comprehend and act upon. Regular stakeholder meetings, briefings, and accessible dashboards are some ways to do this.

- Invest in Detailed Planning: Create a comprehensive project plan, including necessary resources, responsibilities, timelines, contingency measures, and expected outcomes, thereby improving the chances of successful deployment.

- Integrate Deployment Strategy Early On: Ideally, the deployment strategy should not be an afterthought; instead, it should be integrated with the overall project design from the inception stage. By considering the deployment phase early on and throughout the project life cycle, the overall efficiency and effectiveness of the ML project can be significantly improved.

“The failure of ML projects is less about the technology itself and more about how it’s deployed. Therefore, shifting focus from developing to deploying is a game-changer.”

Read the original article

by jsendak | Jan 10, 2024 | DS Articles

“Data Monetization! Data Monetization! Data Monetization!” Note: This blog was originally posted on December 12, 2017. But given all the recent excitement, I thought it might be time to revisit this blog. The original blog post was corrupted, so I re-posted the same content. It’s the new mantra of many organizations. But what does “data… Read More »Data Monetization? Cue the Chief Data Monetization Officer

Data Monetization and the Role of Chief Data Monetization Officer

In this modern era of digitization, many organizations are chanting a new mantra: Data Monetization. This term has been increasingly buzzed about and its implications have been significantly felt across different industries. Data monetization refers to the method of transforming recorded digital information into measurable economic value. Understanding the dynamics and potential of data monetization is becoming an imperative for businesses aiming to stay ahead in the competitive market.

Long-Term Implications and Future Developments

With the rising prominence of data monetization, one can foresee certain long-lasting implications and emergent trends suiting the evolving market needs. Businesses that succeed in monetizing their data could enhance their financial performance, elaborate their consumer base, and secure a competitive advantage in the business world. However, companies also need to handle challenges like stringent data privacy regulations and protection of consumer data rights.

In addition, advancements in innovative technologies like artificial intelligence (AI) and machine learning (ML) could potentially propel the application of data monetization by providing powerful parsing and prediction capabilities. With the advent of advanced analytics, organizations can deepen their insights into complex data sets, understand consumer behavior, and identify new opportunities for revenue generation.

Introduction of the Chief Data Monetization Officer

A noteworthy development is the introduction of a new leadership role within organizations: Chief Data Monetization Officer. This role is likely to gain more traction in future. The key responsibility of a Chief Data Monetization Officer will not be limited to just managing and maintaining data infrastructure but will extend to making vital decisions regarding the elevation of a company’s economic value by leveraging its digital data assets.

Actionable Insights

- Prepare a data monetization strategy: Organizations should map out a clear data monetization strategy which incorporates their overall business goals. This strategy should determine the set of data to be monetized, identify potential clients for this data, and align with legal and ethical computing guidelines.

- Invest in the right technologies: To implement data monetization, investment in modern technologies such as AI, ML, and advanced analytics is essential. This increases the ability to derive meaningful insight from complex data sets.

- Appoint a Chief Data Monetization Officer: Designating a leader focused exclusively on data monetization could render an organization a significant competitive boost. This role can guide the development of strategies to extract maximum economic value from the company’s data assets.

- Maintain data privacy and security: With increased monetization comes the responsibility to ensure data privacy and security. Implementing robust cybersecurity measures and adhering to regulatory requirements should be integral parts of the data monetization process.

Read the original article

by jsendak | Jan 10, 2024 | DS Articles

Introduction

Welcome back, fellow data enthusiasts! Today, we embark on an exciting journey into the world of statistical distributions with a special focus on the latest addition to the TidyDensity package – the triangular distribution. Tightly packed and versatile, this distribution brings a unique flavor to your data simulations and analyses. In this blog post, we’ll delve into the functions provided, understand their arguments, and explore the wonders of the triangular distribution.

What’s So Special About Triangular Distributions?

- Flexibility in uncertainty: They model situations where you have a minimum, maximum, and most likely value, but the exact distribution between those points is unknown.

- Common in real-world scenarios: Project cost estimates, task completion times, expert opinions, and even natural phenomena often exhibit triangular patterns.

- Simple to understand and visualize: Their straightforward shape makes them accessible for interpretation and communication.

The triangular distribution is a continuous probability distribution with lower limit a, upper limit b, and mode c, where a < b and a ≤ c ≤ b. The distribution resembles a tent shape.

The probability density function of the triangular distribution is:

f(x) =

(2(x - a)) / ((b - a)(c - a)) for a ≤ x ≤ c

(2(b - x)) / ((b - a)(b - c)) for c ≤ x ≤ b

The key parameters of the triangular distribution are:

a – the minimum valueb – the maximum valuec – the mode (most frequent value)

The triangular distribution is often used as a subjective description of a population for which there is only limited sample data. It is useful when a process has a natural minimum and maximum.

Triangular Functions

TidyDensity’s Triangular Distribution Functions: Let’s start by introducing the main functions for the triangular distribution:

tidy_triangular(): This function generates a triangular distribution with a specified number of simulations, minimum, maximum, and mode values.

- .n: Specifies the number of x values for each simulation.

- .min: Sets the minimum value of the triangular distribution.

- .max: Determines the maximum value of the triangular distribution.

- .mode: Specifies the mode (peak) value of the triangular distribution.

- .num_sims: Controls the number of simulations to perform.

- .return_tibble: A logical value indicating whether to return the result as a tibble.

util_triangular_param_estimate(): This function estimates the parameters of a triangular distribution from a tidy data frame.

- .x: Requires a numeric vector, with all values satisfying 0 <= x <= 1.

- .auto_gen_empirical: A boolean value (TRUE/FALSE) with a default set to TRUE. It automatically generates tidy_empirical() output for the .x parameter and utilizes tidy_combine_distributions().

util_triangular_stats_tbl(): This function creates a tidy data frame with statistics for a triangular distribution.

- .data: The data being passed from a tidy_ distribution function.

triangle_plot(): This function creates a ggplot2 object for a triangular distribution.

- .data: Tidy data from the tidy_triangular function.

- .interactive: A logical value indicating whether to return an interactive plot using plotly. Default is FALSE.

Using tidy_triangular for Simulations

Suppose you want to simulate a triangular distribution with 100 x values, a minimum of 0, a maximum of 1, and a mode at 0.5. You’d use the following code:

library(TidyDensity)

triangular_data <- tidy_triangular(

.n = 100,

.min = 0,

.max = 1,

.mode = 0.5,

.num_sims = 1,

.return_tibble = TRUE

)

triangular_data

# A tibble: 100 × 7

sim_number x y dx dy p q

<fct> <int> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1 1 0.853 -0.140 0.00158 0.957 0.853

2 1 2 0.697 -0.128 0.00282 0.816 0.697

3 1 3 0.656 -0.116 0.00484 0.764 0.656

4 1 4 0.518 -0.103 0.00805 0.536 0.518

5 1 5 0.635 -0.0909 0.0130 0.733 0.635

6 1 6 0.838 -0.0786 0.0202 0.948 0.838

7 1 7 0.645 -0.0662 0.0304 0.748 0.645

8 1 8 0.482 -0.0539 0.0444 0.464 0.482

9 1 9 0.467 -0.0416 0.0627 0.437 0.467

10 1 10 0.599 -0.0293 0.0859 0.678 0.599

# ℹ 90 more rows

This generates a tidy tibble with simulated data, ready for your analysis.

Estimating Parameters and Creating Stats Tables

Utilize the util_triangular_param_estimate function to estimate parameters and create tidy empirical data:

param_estimate <- util_triangular_param_estimate(.x = triangular_data$y)

t(param_estimate$parameter_tbl)

[,1]

dist_type "Triangular"

samp_size "100"

min "0.0572515"

max "0.8822025"

mode "0.8822025"

method "Basic"

For statistics table creation:

stats_table <- util_triangular_stats_tbl(.data = triangular_data)

t(stats_table)

[,1]

tidy_function "tidy_triangular"

function_call "Triangular c(0, 1, 0.5)"

distribution "Triangular"

distribution_type "continuous"

points "100"

simulations "1"

mean "0.5"

median "0.3535534"

mode "1"

range_low "0.0572515"

range_high "0.8822025"

variance "0.04166667"

skewness "0"

kurtosis "-0.6"

entropy "-0.6931472"

computed_std_skew "-0.1870017"

computed_std_kurt "2.778385"

ci_lo "0.08311609"

ci_hi "0.8476985"

Visualizing the Triangular Distribution: Now, let’s visualize the triangular distribution using the triangle_plot function:

triangle_plot(.data = triangular_data, .interactive = TRUE)

triangle_plot(.data = triangular_data, .interactive = FALSE)

This will generate an informative plot, and if you set .interactive to TRUE, you can explore the distribution interactively using plotly.

Conclusion

In this blog post, we’ve explored the powerful functionalities of the triangular distribution in TidyDensity. Whether you’re simulating data, estimating parameters, or creating insightful visualizations, these functions provide a robust toolkit for your statistical endeavors. Happy coding, and may your distributions always be tidy!

Continue reading: Exploring the Peaks: A Dive into the Triangular Distribution in TidyDensity

Long-term Implications and Future Developments of Triangular Distributions in Data Analytics

The rapidly evolving field of data analytics has seen a number of exciting developments in recent years, one of the most notable being the introduction of the triangular distribution function within the ever-expanding TidyDensity package. With its inherent flexibility, adaptation to real-world scenarios, and ease of understanding and visualization, the triangular distribution paves the way for an enhanced data simulation and analysis experience.

The Potential Significance of Triangular Distributions

Triangular distributions epitomize a specific type of continuous probability distribution shaped like a ‘tent’. It is marked by three key parameters: ‘a’ (minimum value), ‘b’ (maximum value), and ‘c’ (mode or the most frequent value). This function plays a critical role as a subjective description of populations that have only a limited amount of sample data. Its potential becomes evident particularly when a process involves a natural minimum and maximum.

Over time, the application of this form of distribution could potentially transform the way data is interpreted, structured, and communicated in various subjects ranging from project cost estimation through to analysis of natural phenomena.

Functions of Triangular Distributions

The TidyDensity package provides two main functions for the triangular distribution. The first one, tidy_triangular(), facilitates the generation of a triangular distribution based on a set number of simulations, minimum, maximum, and mode values. The second one is util_triangular_param_estimate(), which estimates these parameters from a tidy data frame.

Potential Future Developments

As we look to the future, it is reasonable to anticipate that such functions will continue evolving to cater to the increasingly complex needs of data analysts. The ways in which these functions could evolve are manifold: they could be adapted to serve a wider range of scenario classifications, they may offer more nuanced simulations based on variable patterns found in a data set, and they might even include enhanced visualization tools for a more interactive data exploration experience.

Advice for Data Enthusiasts

The emergence of the triangular distribution function offers ample possibilities for data enthusiasts, especially those venturing into data analysis. Here’s some actionable advice –

- Revise Probability Basics: Understanding these functions requires a fundamental understanding of the basics of probability. So ensure your probability fundamentals are strong.

- Dive Deep Into Triangular Distributions: Gain a comprehensive understanding of triangular distributions – their formation, calculation, and application scenarios.

- Exercise Patience: Learning to master these functions, as with any subject, requires time and patience. Make sure you invest both in understanding and practicing these distributions and their features.

- Stay Updated: Follow the latest developments in statistical distributions and data analytics, and regularly update your skills and knowledge pool.

Lastly, remember that both learning and applying these distributions should be done in a systematic fashion – built solidly on a foundation of basic probability fundamentals and then expanded with practice and testing of individual techniques.

Read the original article