by jsendak | May 20, 2025 | DS Articles

[This article was first published on

ouR data generation, and kindly contributed to

R-bloggers]. (You can report issue about the content on this page

here)

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

A researcher recently approached me for advice on a cluster-randomized trial he is developing. He is interested in testing the effectiveness of two interventions and wondered whether a 2×2 factorial design might be the best approach.

As we discussed the interventions (I’ll call them (A) and (B)), it became clear that (A) was the primary focus. Intervention (B) might enhance the effectiveness of (A), but on its own, (B) was not expected to have much impact. (It’s also possible that (A) alone doesn’t work, but once (B) is in place, the combination may reap benefits.) Given this, it didn’t seem worthwhile to randomize clinics or providers to receive B alone. We agreed that a three-arm cluster-randomized trial—with (1) control, (2) (A) alone, and (3) (A + B)—would be a more efficient and relevant design.

A while ago, I wrote about a proposal to conduct a three-arm trial using a two-step randomization scheme. That design assumes that outcomes in the enhanced arm ((A + B)) are uncorrelated with those in the standalone arm (A) within the same cluster. For this project, that assumption didn’t seem plausible, so I recommended sticking with a standard cluster-level randomization.

The study has three goals:

- Assess the effectiveness of (A) versus control

- Compare (A + B) versus (A) alone

- If (A) alone is ineffective, compare (A + B) versus control

In other words, we want to make three pairwise comparisons. Initially, we were concerned about needing to adjust our tests for multiple comparisons. However, we decided to use a gatekeeping strategy that maintains the overall Type I error rate at 5% while allowing each test to be performed at (alpha = 0.05).

This post describes how I set up simulations to evaluate sample size requirements for the proposed trial. The primary outcome is a time-to-event measure: the time from an index physician visit to a follow-up visit, which the intervention aims to shorten. I first generated survival data based on estimates from the literature, then simulated the study design under various sample size assumptions. For each scenario, I generated multiple data sets and applied the gatekeeping hypothesis testing framework to estimate statistical power.

Preliminaries

Before getting started, here are the R packages used in this post. In addition, I’ve set a randomization seed so that if you try to replicate the approach taken here, our results should align.

library(simstudy)

library(data.table)

library(survival)

library(coxme)

library(broom)

set.seed(8271)

Generating time-to-event data

When simulating time-to-event outcomes, one of the first decisions is what the underlying survival curves should look like. I typically start by defining a curve for the control (baseline) condition, and then generate curves for the intervention arms relative to that baseline.

Getting parameters that define survival curve

We identified a comparable study that reported quintiles for the time-to-event outcome. Specifically, 20% of participants had a follow-up within 1.4 months, 40% by 4.7 months, 60% by 8.7 months, and 80% by just over 15 months. We used the survGetParams function from the simstudy package to estimate the Weibull distribution parameters—the intercept in the Weibull formula and the shape—that characterize this baseline survival curve.

q20 <- c(1.44, 4.68, 8.69, 15.32)

points <- list(c(q20[1], 0.80), c(q20[2], 0.60), c(q20[3], 0.40), c(q20[4], 0.20))

s <- survGetParams(points)

s

## [1] -1.868399 1.194869

We can visualize the idealized survival curve that will be generated using these parameters stored in the vector s:

survParamPlot(f = s[1], shape = s[2], points, limits = c(0, 20))

Generating data for a simpler two-arm RCT

Before getting into the more complicated three-armed cluster randomized trial, I started with a simpler, two-armed randomized controlled trial. The only covariate at the individual level is the binary treatment indicator (A) which takes on values of (0) (control) and (1) (treatment). The time-to-event outcome is a function of the Weibull parameters we just generated based on the quintiles, along with the treatment indicator.

def <- defData(varname = "A", formula = "1;1", dist = "trtAssign")

defS <-

defSurv(varname = "time", formula = "..int + A * ..eff", shape = "..shape") |>

defSurv(varname = "censor", formula = -40, scale = 0.5, shape = 0.10)

I generated a large data set to that we can recreate the idealized curve from above. I assumed a hazard ratio of 2 (which is actually parameterized on the log scale):

int <- s[1]

shape <- s[2]

eff <- log(2)

dd <- genData(100000, def)

dd <- genSurv(dd, defS, timeName = "time", censorName = "censor")

Here are the quintiles (and median) from the control arm, which are fairly close to the quintiles from the study:

dd[A==0, round(quantile(time, probs = c(0.20, 0.40, 0.50, 0.60, 0.80)), 1)]

## 20% 40% 50% 60% 80%

## 1.6 4.2 6.1 8.5 16.4

Visualizing the curve and assessing its properties

A plot of the survival curves from the two arms is shown below, with the control arm in yellow and the intervention arm in red:

Fitting a model

I fit a Cox proportional hazards model just to make sure I could recover the hazard ratio I used in generating the data:

fit <- coxph(Surv(time, event) ~ factor(A), data = dd)

tidy(fit, exponentiate = TRUE)

## # A tibble: 1 × 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 factor(A)1 1.99 0.00665 103. 0

Simulating the three-arm study data

In the proposed three-arm cluster randomized trial, there are three levels of measurement: patient, provider, and clinic. Randomization is conducted at the provider level, stratified by clinic. The hazard for individual (i), treated by provider (j) in clinic (k), is modeled as:

[

h_{ijk}(t) = h_0(t) expleft( beta_1 A_{ijk} + beta_2 AB_{ijk} + b_j + g_k right)

]

where:

- (h_0(t)) is the baseline hazard function,

- (beta_1) is the effect of treatment (A) alone,

- (beta_2) is the effect of the combination of (A + B),

- (b_j sim N(0, sigma^2_b)) is the random effect for provider (j),

- (g_k sim N(0, sigma^2_g)) is the random effect for clinic (k),

- (A_{ijk} = 1) if provider (j) is randomized to treatment (A), and (0) otherwise,

- (AB_{ijk} = 1) if provider (j) is randomized to treatment (A + B), and 0 otherwise.

Data definititions

While the model is semi-parametric (i.e., it does not assume a specific distribution for event times), the data generation process is fully parametric, based on the Weibull distribution. Despite this difference, the two are closely aligned: if all goes well, we should be able to recover the parameters used for data generation when fitting the semi-parametric model as we did in the simpler RCT case above.

Data generation occurs in three broad steps:

- Clinic-level: generate the clinic-specific random effect (g).

- Provider-level: generate the provider-specific random effect (b) and assign treatment.

- Patient-level: generate individual time-to-event outcomes.

These steps are implemented using the following definitions:

defC <- defData(varname = "g", formula = 0, variance = "..s2_clinic")

defP <-

defDataAdd(varname = "b", formula = 0, variance = "..s2_prov") |>

defDataAdd(varname = "A", formula = "1;1;1", variance = "clinic", dist = "trtAssign")

defS <- defSurv(

varname = "eventTime",

formula = "..int + b + g + (A==2)*..eff_A + (A==3)*..eff_AB",

shape = "..shape")

Data generation

For this simulation, we assumed 16 clinics, each with 6 providers, and 48 patients per provider. A key element of the study is that recruitment occurs over 12 months, and patients are followed for up to 6 months after their recruitment period ends. Thus, follow-up duration varies depending on when a patient enters the study: patients recruited earlier have longer potential follow-up, while those recruited later are more likely to be censored.

This staggered follow-up is implemented in the final step of data generation:

nC <- 16 # number of clinics (clusters)

nP <- 6 # number of providers per clinic

nI <- 48 # number of patients per provider

s2_clinic <- 0.10 # variation across clinics (g)

s2_prov <- 0.25 # variation across providers (b)

eff_A <- log(c(1.4)) # log HR of intervention A (compared to control)

eff_AB <- log(c(1.6)) # log HR of combined A+B (compared to control)

ds <- genData(nC, defC, id = "clinic")

dp <- genCluster(ds, "clinic", nP, "provider")

dp <- addColumns(defP, dp)

dd <- genCluster(dp, "provider", nI, "id")

dd <- genSurv(dd, defS)

# assign a patient to a particular month - 4 per month

dd <- trtAssign(dd, nTrt = 12, strata = "provider", grpName = "month")

dd[, event := as.integer(eventTime <= 18 - month)]

dd[, obsTime := pmin(eventTime, 18 - month)]

Below is a Kaplan-Meier plot showing survival curves for each provider within each clinic, color-coded by study arm:

The mixed-effects Cox model recovers the variance components and coefficients used in data generation:

me.fit <- coxme(

Surv(eventTime, event) ~ factor(A) + (1|provider) + (1|clinic),

data = dd

)

summary(me.fit)

## Mixed effects coxme model

## Formula: Surv(eventTime, event) ~ factor(A) + (1 | provider) + (1 | clinic)

## Data: dd

##

## events, n = 3411, 4608

##

## Random effects:

## group variable sd variance

## 1 provider Intercept 0.5437050 0.29561511

## 2 clinic Intercept 0.3051615 0.09312354

## Chisq df p AIC BIC

## Integrated loglik 919.4 4.00 0 911.4 886.9

## Penalized loglik 1251.0 86.81 0 1077.3 544.8

##

## Fixed effects:

## coef exp(coef) se(coef) z p

## factor(A)2 0.3207 1.3781 0.1493 2.15 0.03173

## factor(A)3 0.4650 1.5921 0.1472 3.16 0.00158

Typically, I would use this data generation and model fitting code to estimate power or sample size requirements. While I did carry out those steps, I’ve left them out here so that you can try them yourself (though I’m happy to share my code if you’re interested). Beyond estimating sample size, simulation studies like this can also be used to evaluate the Type I error rates of the gatekeeping hypothesis testing framework.

References:

Proschan, M.A. and Brittain, E.H., 2020. A primer on strong vs weak control of familywise error rate. Statistics in medicine, 39(9), pp.1407-1413.

Continue reading: Planning for a 3-arm cluster randomized trial with a nested intervention and a time-to-event outcome

Understanding the Implications of Planning a 3-Arm Cluster Randomized Trial with a Nested Intervention and a Time-to-Event Outcome

A recent case study has provided some valuable insights into the planning process of a three-arm cluster-randomized trial, which includes a nested intervention and a time-to-event outcome. This approach may have far-reaching implications for the way researchers design complex trials in future, with potential benefits for the efficiency and relevance of these studies.

The Importance of Choosing the Right Trial Design

The key points of the study involve advising a researcher on the most efficient approach to testing the effectiveness of two interventions, named A and B. After discussing the interventions, it became clear that intervention A was the primary focus, with intervention B potentially enhancing its effectiveness but having little impact on its own.

This dynamic led to the proposal of a three-arm cluster-randomized trial, which would test a control variable, A alone, and A combined with B. This design is a more efficient and relevant approach than randomizing clinics or providers to receive B alone, particularly because of the potential for B to work most effectively in combination with A.

The Three Goals of the Study

- Assess the effectiveness of A versus control

- Compare A+B versus A alone

- If A alone is ineffective, compare A+B versus control

The outcomes of these goals can add significant value to the field of research, particularly in our understanding of how interventions can be combined for maximum efficacy.

Facing Future Challenges

Going forward, it’s important to address potential challenges that may arise during this type of trial. Notably, the study pulled from a two-step randomization scheme based on the assumption that outcomes in the enhanced arm (A+B) are unrelated to those in the standalone arm (A) within the same cluster. However, this assumption may not always hold and could impact the effectiveness of the study.

Applying the Lessons Learned

Trialing Interventions

When designing trials that test interventions, consider the potential interaction effects between multiple interventions. Doing so may lead to more relevant and efficient trial designs which can provide greater insights.

Choosing the Right Approach

Ensure the correctness of your assumptions when selecting an approach to your trial design. For instance, the assumption that outcomes in one treatment arm are unrelated to those in another can significantly affect the success of your trials if it does not hold.

Considering Gatekeeping Strategies

To maintain an overall Type I error rate at 5% while allowing for multiple pairwise comparisons, as demonstrated in the case study, consider using a gatekeeping strategy. This approach could keep each test performed at a significance level of 0.05 while controlling for multiple comparisons.

Deeper Exploration

Running a detailed simulation study, as shown in this case, can be an effective method not only for determining sample size requirements for the proposed trial, but also to evaluate the Type I error rates of the gatekeeping hypothesis testing framework.

In conclusion, when planning for a three-arm, cluster-randomised trial with a nested intervention and a time-to-even outcome, it is essential to carefully consider the design and the potentially combined impacts of various interventions.

Read the original article

by jsendak | May 20, 2025 | AI

arXiv:2505.11584v1 Announce Type: new

Abstract: LLMs are being set loose in complex, real-world environments involving sequential decision-making and tool use. Often, this involves making choices on behalf of human users. However, not much is known about the distribution of such choices, and how susceptible they are to different choice architectures. We perform a case study with a few such LLM models on a multi-attribute tabular decision-making problem, under canonical nudges such as the default option, suggestions, and information highlighting, as well as additional prompting strategies. We show that, despite superficial similarities to human choice distributions, such models differ in subtle but important ways. First, they show much higher susceptibility to the nudges. Second, they diverge in points earned, being affected by factors like the idiosyncrasy of available prizes. Third, they diverge in information acquisition strategies: e.g. incurring substantial cost to reveal too much information, or selecting without revealing any. Moreover, we show that simple prompt strategies like zero-shot chain of thought (CoT) can shift the choice distribution, and few-shot prompting with human data can induce greater alignment. Yet, none of these methods resolve the sensitivity of these models to nudges. Finally, we show how optimal nudges optimized with a human resource-rational model can similarly increase LLM performance for some models. All these findings suggest that behavioral tests are needed before deploying models as agents or assistants acting on behalf of users in complex environments.

Expert Commentary: Understanding the Impact of Nudges on Language Model Models

Language models have become increasingly prevalent in real-world applications, including sequential decision-making and tool use. However, as highlighted in this study, there is a significant gap in our understanding of how these models make choices on behalf of human users and how susceptible they are to different choice architectures.

This case study delves into the nuanced differences observed when applying canonical nudges such as default options, suggestions, and information highlighting to language model models (LLMs) in a multi-attribute tabular decision-making problem. One key takeaway is the heightened susceptibility of LLMs to nudges compared to human decision-makers. This highlights the importance of considering the unique characteristics of these models when designing decision support systems.

Furthermore, the study reveals that LLMs exhibit divergent behaviors in points earned and information acquisition strategies, shedding light on their decision-making processes. The introduction of prompt strategies like zero-shot chain of thought (CoT) and few-shot prompting with human data demonstrates the potential for aligning LLM choices more closely with human preferences.

Despite these advancements, the study emphasizes the ongoing sensitivity of LLMs to nudges, even with optimal nudges designed using human resource-rational models. This underscores the need for thorough behavioral testing before deploying LLMs as agents or assistants in complex environments, where their decisions can impact human users.

The multi-disciplinary nature of this study, combining insights from behavioral science, artificial intelligence, and decision theory, underscores the complex interplay of factors influencing LLM choices. By further exploring the underlying mechanisms driving LLM decision-making, researchers can enhance the transparency, performance, and ethical considerations surrounding the use of LLMs in real-world applications.

Read the original article

by jsendak | May 19, 2025 | Art

The Transformative Nature of Queer Animals

In recent years, there has been a growing acceptance and celebration of diverse gender and sexual identities among humans. However, these conversations often overlook the existence of queer identities in the animal kingdom. From same-sex pairings to gender-bending behaviors, non-human animals exhibit a wide spectrum of gender and sexual expressions that challenge traditional binary understandings.

Exploring the lives of queer animals allows us to question the boundaries we have constructed between nature and culture, as well as challenge our preconceived notions of what is “natural” or “normal.” By acknowledging and studying queer identities in the animal world, we can gain valuable insights into the fluidity and complexity of gender and sexuality across species.

Historical Perspectives

Historically, queer identities in animals have often been dismissed or ignored in scientific research. Early studies focused primarily on heterosexual mating patterns and reproductive behaviors, reinforcing the concept of heteronormativity as the “natural” order of things. It was not until the late 20th century that researchers began to actively investigate and document queer behaviors in animals.

Contemporary Relevance

Today, as conversations around gender and sexuality continue to evolve, the study of queer animals has taken on new importance. Researchers are now documenting a wide range of queer behaviors in various species, from bonobos engaging in same-sex sexual activity to clownfish changing gender in response to environmental factors.

This article will delve into the fascinating world of queer animals, exploring the ways in which they challenge our assumptions about gender and sexuality. By examining the transformative cocoons of queer identities in the natural world, we can gain a deeper understanding of the diversity and beauty of life on Earth.

Queer animals and transformative cocoons.

Read the original article

by jsendak | May 19, 2025 | Art

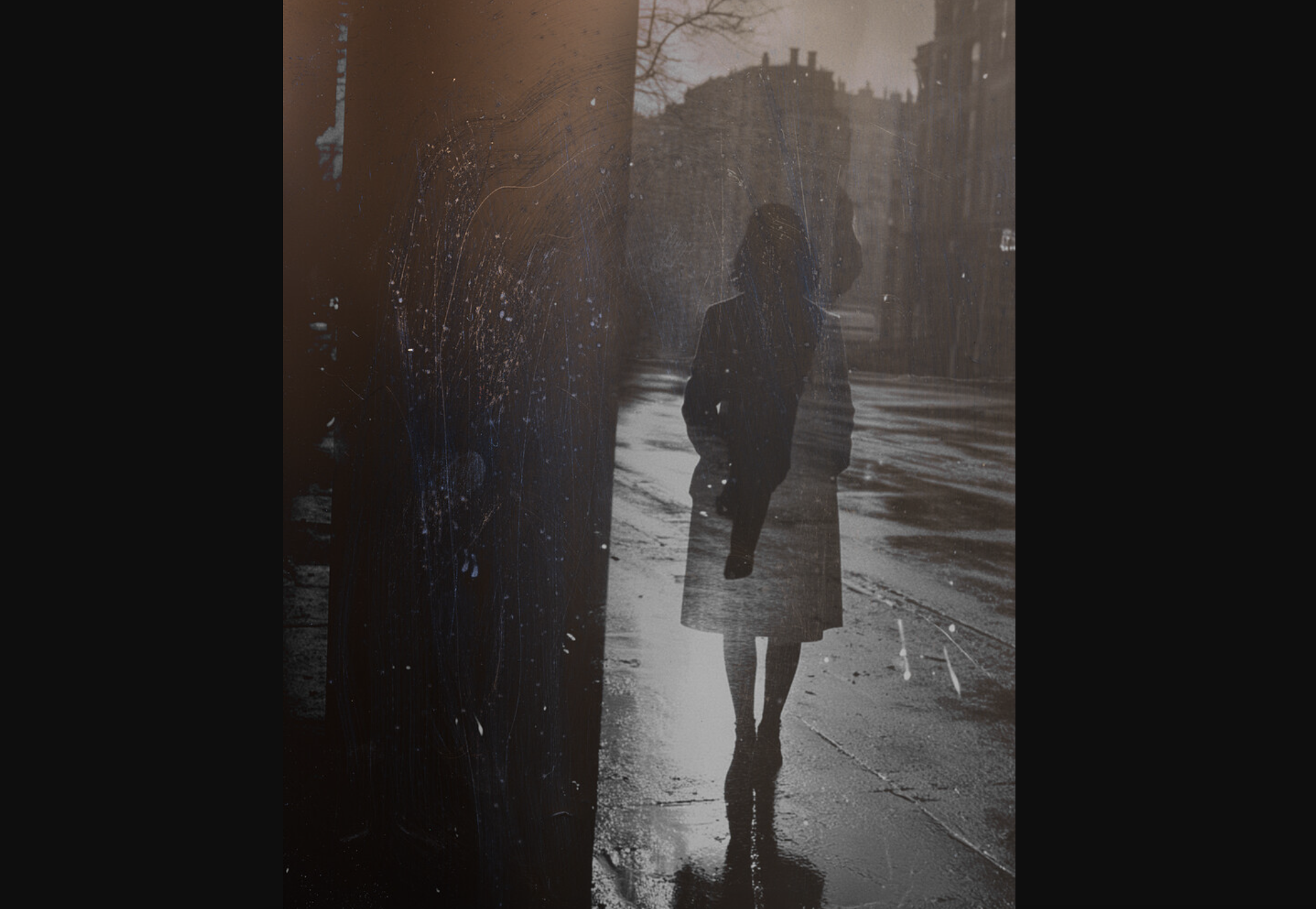

Welcome to No Denial, No Explanation

In his latest exhibition, Dirk Braeckman invites viewers to delve into a world of enigmatic beauty and introspection. Through his mesmerizing photographs, Braeckman challenges us to confront our own perceptions of reality and to question the narratives that shape our understanding of the world.

With a career spanning over three decades, Braeckman has established himself as a leading figure in the contemporary art world. His work is characterized by a unique blend of abstraction and realism, creating images that are both haunting and captivating.

The Power of Visual Language

Braeckman’s photographs are a testament to the power of visual language. By capturing moments of stillness and quietude, he allows us to see the world in a new light. Through his lens, everyday objects and scenes are transformed into poetic reflections on the human experience.

“I am interested in the process of seeing, in the act of looking,” Braeckman explains. “My work is about creating a space for contemplation and reflection, a space where viewers can lose themselves in the beauty of the moment.”

- By stripping away context and narrative, Braeckman forces us to confront the essence of his subjects.

- His images are devoid of explanation, inviting us to draw our own conclusions and interpretations.

- In a world inundated with information and noise, Braeckman’s work offers a moment of respite and reflection.

As we navigate the complexities of modern life, the photographs in No Denial, No Explanation serve as a poignant reminder of the importance of slowing down and truly seeing the world around us. Through Braeckman’s lens, we are encouraged to embrace the mysteries and uncertainties that define our existence.

Tim Van Laere Gallery presents No Denial, No Explanation, a new solo exhibition by internationally acclaimed artist Dirk Braeckman.

Read the original article

by jsendak | May 16, 2025 | DS Articles

[This article was first published on

Jason Bryer, and kindly contributed to

R-bloggers]. (You can report issue about the content on this page

here)

Want to share your content on R-bloggers? click here if you have a blog, or here if you don’t.

tl;dr

Once the login package is installed, you can run two demos using the following commands:

Note that this is cross posted with a vignette in the login R package. For the most up-to-date version go here: https://jbryer.github.io/login/articles/paramaters.html Comments can be directed to me on Mastodon at @jbryer@vis.social.

Introduction

Shiny is an incredible tool for interactive data analysis. For the vast majority of Shiny applications I have developed I make a choice regarding the default state of the application, but provide plenty of options for the user to change and/or customize the analysis. However, there are situations where the application would be better if the user was required to input certain parameters. Conceptually I often think of Shiny applications as an interactive version of a function, a function with many parameters, some of which the user needs to define the default parameters. This vignette describes a Shiny module where a given set of parameters must be set before the user engages with the main Shiny application, and those settings can be optionally saved as cookies to be used across sessions. Even though this is the main motivation for this Shiny module, it can also be used as a framework for saving user preferences where saving state on the Shiny server is not possible (e.g. when deployed to www.shinyapps.io).

The user parameter module is part of the login R package. The goal is to present the user with a set of parameters in a modal dialog as the Shiny application loads. The primary interface is through the userParamServer() function that can be included in the server code. The following is a basic example.

params <- userParamServer(

id = 'example',

params = c('name', 'email'),

param_labels = c('Your Name:', 'Email Address:'),

param_types = c('character', 'character'),

intro_message = 'This is an example application that asks the user for two parameters.'),

validator = my_validator

Like all Shiny modules, the id parameter is a unique identifier connected the server logic to the UI components. The params parameter is a character vector for the names of the parameters users need to input. These are the only two required parameters. By default all the parameters will assume to be characters using the shiny::textInput() function. However, the module supports multiple input types including:

date – Date valuesinteger – Integer valuesnumeric – Numeric valuesfile – File uploads (note the value will be the path to where the file is uploaded)select – Drop down selection. This type requires additional information vis-à-vis the input_params parameter discussed latter.

The above will present the user with a modal dialog immediately when the Shiny application starts up as depicted below.

The values can then be retrieved from the params object, which is depicted in the figure below.

The userParamServer() function returns a shiny::reactiveValues() object. As a result, any code that uses these values should automatically be updated if the values change.

There are two UI components, specifically the showParamButton() and clearParamButton() buttons. The former will display the modal dialog allowing the user to change the values. The latter will clear all the values set (including cookies if enabled).

Cookies

It is possible to save the user’s parameter values across session by saving them to cookies (as long as allow_cookies = TRUE). If the allow_cookies parameter is TRUE, the user can still opt to not save the values as cookies. It is recommend to set the cookie_password value so that the cookie values are encrypted. This feature uses the cookies R package and requires that cookies::cookie_dependency() is place somewhere in the Shiny UI.

Full Shiny Demo

The figures above are from the Shiny application provided below.

library(shiny)

library(login)

library(cookies)

#' Simple email validator.

#' @param x string to test.

#' @return TRUE if the string is a valid email address.

is_valid_email <- function(x) {

grepl("<[A-Z0-9._%+-]+@[A-Z0-9.-]+.[A-Z]{2,}>", as.character(x), ignore.case=TRUE)

}

#' Custom validator function that also checks if the `email` field is a valid email address.

my_validator <- function(values, types) {

spv <- simple_parameter_validator(values)

if(!is.logical(spv)) {

return(spv)

} else {

if(is_valid_email(values[['email']])) {

return(TRUE)

} else {

return(paste0(values[['email']], ' is not a valid email address.'))

}

}

return(TRUE)

}

ui <- shiny::fluidPage(

cookies::cookie_dependency(), # Necessary to save/get cookies

shiny::titlePanel('Parameter Example'),

shiny::verbatimTextOutput('param_values'),

showParamButton('example'),

clearParamButton('example')

)

server <- function(input, output) {

params <- userParamServer(

id = 'example',

validator = my_validator,

params = c('name', 'email'),

param_labels = c('Your Name:', 'Email Address:'),

param_types = c('character', 'character'),

intro_message = 'This is an example application that asks the user for two parameters.')

output$param_values <- shiny::renderText({

txt <- character()

for(i in names(params)) {

txt <- paste0(txt, i, ' = ', params[[i]], 'n')

}

return(txt)

})

}

shiny::shinyApp(ui = ui, server = server, options = list(port = 2112))

Validation

The validator parameter speicies a validation function to ensure the parameters entered by the user are valid. The default value of simple_parameter_validator() simply ensures that values have been entered. The Shiny application above extends this by also checking to see if the email address appears to be valid.

Validations functions must adhere to the following:

-

It must take two parameters: values which is a character vector the user has entered and types which is a character vector of the types described above.

-

Return TRUE if the validaiton passes OR a character string describing why the validation failed. This message will be displayed to the user.

If the validation function returns anything other than TRUE the modal dialog will be displayed.

Continue reading: User parameters for Shiny applications

Understanding Shiny Applications and User Parameters

The original text focuses on the use of Shiny, a powerful tool for interactive data analysis, with a new user parameter module termed as ‘login’ R package. This functionality allows for a comprehensive and personalized experience, offering the ability to define and customize default parameters before interacting with the Shiny app.

Future Developments and Long-term Implications

As the data analytics field continues to grow, personalization and interactivity are key aspects that contribute to the user experience. The incorporation of this module into Shiny applications allows for more tailored analytics, enhancing the user experience and potentially making Shiny applications more user-friendly.

Moreover, the ability to save user preferences across sessions through cookies can positively affect the long-term usage of the application. The ability to recall saved user preferences reduces the need to reconfigure settings during future sessions, thereby enhancing usage efficiency.

Potential Usecases

- Data Customisation: The ability to customise data through user parameters can find effective use in different sectors. It can tailor the analytics experience to individual user needs and preferences in everything from education and healthcare to marketing and ecommerce.

- User Experience: By improving the interactivity and personalization of Shiny applications, this development promises a more enriching user experience, boosting user engagement and satisfaction.

Actionable Advice

If you’re an app developer or data analyst, consider employing Shiny and its new user parameter module to provide a personalised and interactive experience for your users. By offering them control over defining default parameters, you can make the analytical process better tailored to their needs.

As Shiny applications have potential across a range of industries, keep an eye on emerging trends and make adjustments accordingly to maximise your application’s reach and impact.

Understand your user’s needs and design user parameters in a way that offers them a seamless experience, and enhance their interaction with your application.

Lastly, remember to acknowledge the importance of data privacy. If you choose to store cookies, make sure the users are clearly informed, and necessary encryption is used to ensure the privacy of user details.

Read the original article