by jsendak | Apr 5, 2025 | DS Articles

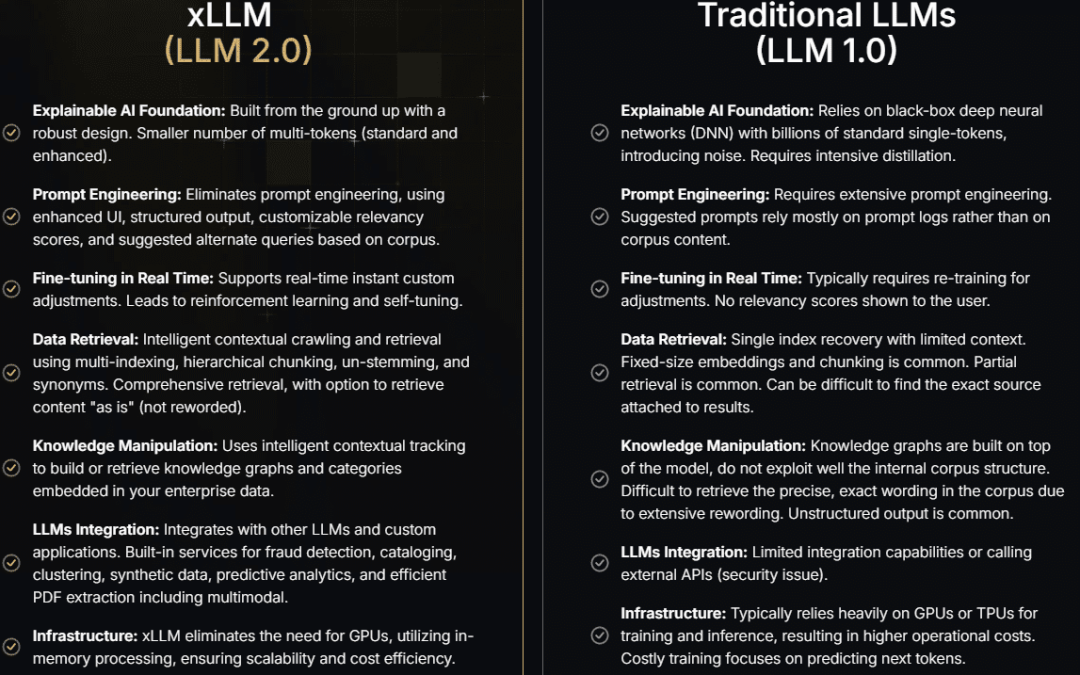

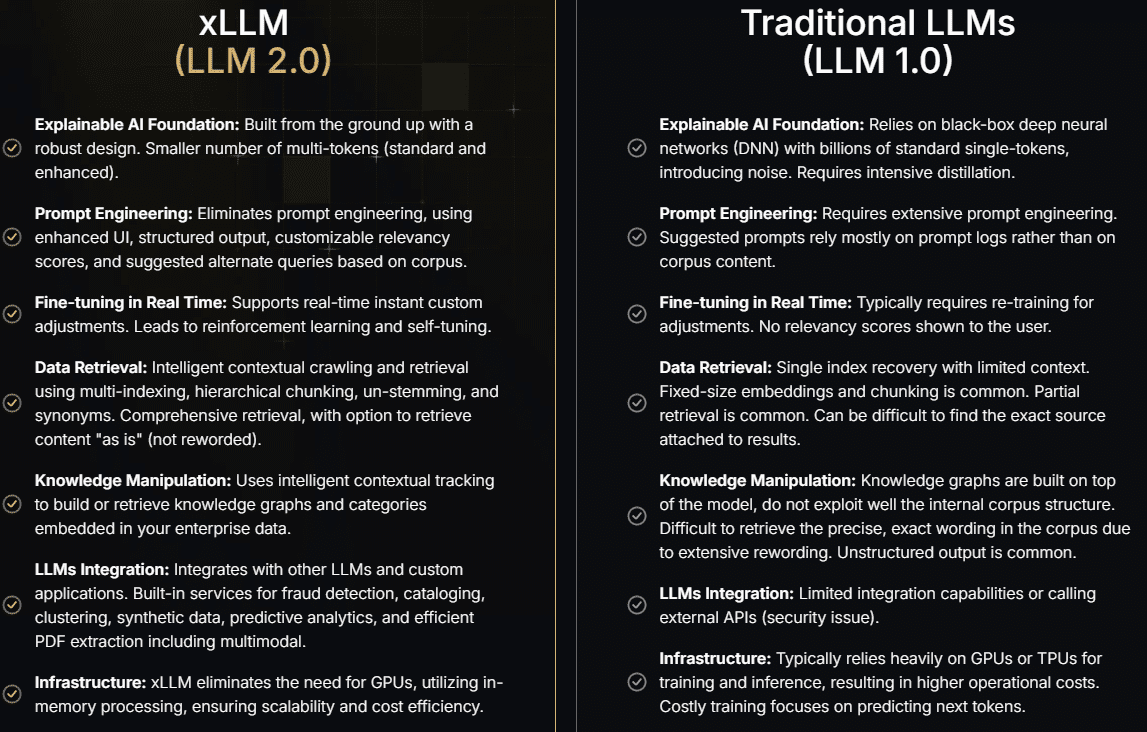

The Rise of Specialized LLMs for Enterprise | LLMs with Billions of Parameters | LLM 2.0, The NextGen AI Systems for Enterprise

Unpacking the Rise of Specialized LLMs

Large Language Models (LLMs) are revolutionizing business operations, giving rise to a new breed of specialized models for enterprises. The development of LLMs with billions of parameters – dubbed ‘LLM 2.0’ – reflects the next generation of AI systems designed for business, boasting potential implications for productivity, innovation, and efficiency.

Long-term Implications and Future Developments

LLMs have immense potential to transform how businesses operate. However, with their evolution to specialized LLMs and the introduction of LLM 2.0, the enterprise sector can anticipate some significant changes.

Improved Business Efficiency

Specialized LLMs can automate many routine tasks, such as managing emails, writing reports, and filling out forms. This automation can lead to greater efficiency, freeing up employee time to focus on more strategic and creative tasks.

Increased Innovation

LLMs ability to process vast amounts of data and generate models can spur innovation across industries. Businesses can use these models to uncover new insights, make predictions, and develop new products and services.

Enhanced Personalization

Given their capability to learn and adapt, specialized LLMs can deliver highly personalized experiences. From tailoring product recommendations to crafting personalized messages, LLMs can engage customers in a more meaningful way.

Anticipating Challenges

While the rise of specialized LLMs presents exciting opportunities, it also poses new challenges. Developing and managing these complex models require significant resources and expertise. Moreover, as LLMs become more sophisticated, issues related to privacy and security cannot be overlooked.

Actionable Advice for Enterprises

To leverage the potential of LLM 2.0 and specialized LLMs, businesses should consider the following:

- Invest in AI training: Staff should be trained in basic AI understanding and its implementation in the business setting.

- Collaborate with AI experts: Partner with AI experts or hire in-house talent to manage and optimize specialized LLMs.

- Set appropriate security measures: Develop robust security protocols to safeguard data and machine learning models.

- Focus on data privacy: Be transparent about how you collect, store, and use customer data and ensure compliance with regulations.

In summary, the emergence of specialized LLMs and the upgrade to LLM 2.0 present transformative opportunities for enterprises across industries. However, organizations must also anticipate and address potential challenges to make the most of these developments.

Read the original article

by jsendak | Feb 24, 2025 | Computer Science

Exploring the Need for Explainable AI (XAI)

Artificial Intelligence (AI) has become increasingly prevalent in various industries, but its lack of explainability poses a significant challenge. In order to mitigate the risks associated with AI technology, the industry and regulators must focus on developing eXplainable AI (XAI) techniques. Fields that require accountability, ethics, and fairness, such as healthcare, credit scoring, policing, and the criminal justice system, particularly necessitate the implementation of XAI.

The European Union (EU) recognizes the importance of explainability and has incorporated it as one of the fundamental principles in the AI Act. However, the specific XAI techniques and requirements are yet to be determined and tested in practice. This paper delves into various approaches and techniques that show promise in advancing XAI. These include model-agnostic methods, interpretability tools, algorithm transparency, and interpretable machine learning models.

One of the key challenges in implementing the principle of explainability in AI governance and policies is striking a balance between transparency and protecting proprietary information. Companies may be reluctant to disclose their AI algorithms or trade secrets due to intellectual property concerns. Finding a middle ground where transparency is maintained without compromising competitiveness is crucial for successful XAI implementation.

The Integration of XAI into EU Law

The integration of XAI into EU law requires careful consideration of various factors, including standard setting, oversight, and enforcement. Standard setting plays a crucial role in establishing the benchmark for XAI requirements. The EU can collaborate with experts and stakeholders to define industry standards that ensure transparency, interpretability, and fairness in AI systems.

Oversight is an essential component of implementing XAI in EU law. Regulatory bodies must have the authority and resources to monitor AI systems effectively. This includes conducting audits, assessing the impact of AI on individuals and society, and ensuring compliance with XAI standards. Additionally, regular reviews and updates of XAI guidelines should be conducted to keep up with evolving technological advancements.

Enforcement mechanisms are vital for ensuring compliance with XAI regulations. Penalties and sanctions for non-compliance should be clearly defined to promote adherence to the established XAI standards. Additionally, a system for reporting concerns and violations should be put in place to encourage accountability and transparency.

What to Expect Next

The journey towards implementing XAI in EU law is still in its early stages. As the EU Act on AI progresses, it is expected that further research and experimentation will be conducted to determine the most effective XAI techniques for different sectors. Collaboration between academia, industry experts, and regulators will be vital in this process.

Additionally, the EU is likely to focus on international cooperation. Given the global nature of AI technology, harmonization of XAI standards and regulations across countries can maximize the benefits of explainability while minimizing its challenges. Encouraging dialogue and collaboration with other regions will be essential for creating a unified approach to XAI governance.

In conclusion, the implementation of XAI is crucial for ensuring transparency, accountability, and fairness in AI systems. The EU’s emphasis on explainability in the AI Act reflects a commitment to addressing these concerns. The challenges of implementing XAI in governance and policies must be navigated thoughtfully, considering factors such as intellectual property protection and enforcement mechanisms. Collaboration and research will pave the way for successful integration of XAI into EU law.

Read the original article

by jsendak | Feb 7, 2025 | DS Articles

Although it’s rarely publicized in the media, not everything about deploying—and certainly not about training or fine-tuning—advanced machine learning models are readily accessible through an API. For certain implementations, the success of enterprise-scale applications of language models hinges on hardware, supporting infrastructure, and other practicalities that require more than just a cloud service provider. Graphics… Read More »Underpinning advanced machine learning models with GPUs

Analyzing the Crucial Role of Hardware and Supporting Infrastructure in Deploying Advanced Machine Learning Models

While the media often magnifies the role of APIs in deploying machine learning models, many critical elements deserve attention. The success of large-scale applications of language models depends not only on accessible APIs but also on hardware components, a supportive infrastructure, and other practical aspects. These elements often necessitate more reliance on a comprehensive cloud service provider, rather than a simple API structure.

More than an API: The Need for a Solid Back-end Infrastructure

APIs might streamline the process of accessing and deploying machine learning models, but they just make up a part of the process. However, the underpinnings- the graphics processing units (GPUs), robust supportive infrastructure are what truly power such advanced models. When deploying on a large, enterprise-scale, this backbone becomes even more essential.

Potential Future Developments

Given this realization, it’s likely that future developments will focus more on strengthening and advancing these back-end components. More efficient GPUs and stronger cloud service infrastructures will be the cornerstone to handle increasingly complex machine learning models.

How to Prepare for These Changes

Given the long-term implications of these findings, companies and individuals interested in deploying advanced machine learning models should focus on the following steps:

- Invest in capable hardware: Owing to the increased workloads of machine learning models, investing in high-performance GPUs has become a necessity. Future-proof your system by opting for hardware that can support the ongoing advancements in machine learning.

- Choose a strong cloud service provider: APIs may provide the interface, but a strong cloud service provider will provide the supporting infrastructure crucial for successful deployments. Choose providers that not only offer extensive functionality but also ensure high reliability and robustness.

- Stay updated on AI advancements: As AI and machine learning continue to advance, staying updated with the latest trends and developments ensures preparedness for any system-related adjustments and overhauls.

“Shifting the focus from simply deploying machine learning models via APIs to developing a stronger infrastructure for these models will prove most beneficial in the long run.”

Take the above points into consideration when designing a strategy for the implementation of enterprise-scale machine learning models. Investing in the right hardware, partnering with a robust cloud service provider, and staying on top of AI trends will ensure the successful deployment and long-term efficiency of your machine learning applications.

Read the original article

by jsendak | Feb 5, 2025 | Computer Science

Fruits are not only delicious but also incredibly beneficial for our health. They provide essential vitamins and nutrients that are vital for our well-being. To promote safe fruit consumption and reduce health risks, a study has introduced two fully automated devices: FruitPAL and its updated version, FruitPAL 2.0.

FruitPAL and FruitPAL 2.0 leverage a high-quality dataset of fifteen different fruit types and utilize advanced models, namely YOLOv8 and YOLOv5 V6.0, to enhance detection accuracy. The original FruitPAL device can detect various fruit types and even notify caregivers if it detects an allergic reaction. This is made possible by the improved accuracy and rapid response time of the YOLOv8 model. Notifications are instantly transmitted to mobile devices through the cloud, ensuring that caregivers receive real-time updates and can quickly address any health concerns.

FruitPAL 2.0 takes fruit detection a step further by not only identifying the fruit but also estimating its nutritional value. This additional capability encourages healthy consumption by providing users with valuable dietary insights. By training on the YOLOv5 V6.0 model, FruitPAL 2.0 can analyze fruit intake and help individuals make informed choices about their diet.

This study aims to promote fruit consumption by balancing health benefits with allergy awareness. By alerting users to potential allergens and promoting the consumption of nutrient-rich fruits, both FruitPAL and FruitPAL 2.0 support health maintenance and dietary awareness.

With the continuous advancements in machine learning models and datasets, the future possibilities for these devices are promising. For example, future versions of FruitPAL could incorporate even more accurate models for fruit detection and allergy identification. This would further enhance the devices’ ability to provide vital information to caregivers, ensuring the safety of individuals with fruit allergies.

Moreover, as the nutritional value estimation feature of FruitPAL 2.0 becomes more accurate and comprehensive, it could potentially be extended to include recommendations for personalized dietary plans based on an individual’s specific health needs and goals. This would empower users to make informed decisions and optimize their fruit consumption for overall well-being.

Overall, the introduction of FruitPAL and FruitPAL 2.0 is a significant step towards promoting safe and healthy fruit consumption. These automated devices not only enhance detection accuracy but also provide valuable insights into the nutritional value of fruits. As these devices continue to evolve, they have the potential to revolutionize the way we approach fruit consumption, benefiting both individuals and caregivers in maintaining optimal health.

Read the original article

by jsendak | Jan 20, 2025 | AI

arXiv:2501.09890v1 Announce Type: new

Abstract: This paper investigates the application of artificial intelligence (AI) in early-stage recruitment interviews in order to reduce inherent bias, specifically sentiment bias. Traditional interviewers are often subject to several biases, including interviewer bias, social desirability effects, and even confirmation bias. In turn, this leads to non-inclusive hiring practices, and a less diverse workforce. This study further analyzes various AI interventions that are present in the marketplace today such as multimodal platforms and interactive candidate assessment tools in order to gauge the current market usage of AI in early-stage recruitment. However, this paper aims to use a unique AI system that was developed to transcribe and analyze interview dynamics, which emphasize skill and knowledge over emotional sentiments. Results indicate that AI effectively minimizes sentiment-driven biases by 41.2%, suggesting its revolutionizing power in companies’ recruitment processes for improved equity and efficiency.

Artificial intelligence (AI) has shown promise in various industries, and its potential to transform the recruitment process is no exception. This paper delves into the use of AI in early-stage recruitment interviews, specifically focusing on reducing sentiment bias. Sentiment bias is prevalent in traditional interviews, where human interviewers can unknowingly be influenced by their own biases.

The study highlights several biases that can affect the interviewer’s judgment, including interviewer bias, social desirability effects, and confirmation bias. These biases can lead to non-inclusive hiring practices and a lack of diversity in the workforce. By using AI interventions, such as multimodal platforms and interactive candidate assessment tools, companies can aim for a more unbiased and equitable recruitment process.

However, this paper takes it a step further by introducing a unique AI system designed to transcribe and analyze interview dynamics with a focus on skill and knowledge rather than emotional sentiments. This approach aims to minimize the impact of sentiment-driven biases, effectively reducing them by 41.2% based on the results of the study.

The interdisciplinary nature of this topic is worth noting. The application of AI in recruitment interviews combines elements of psychology, computer science, and data analysis. Understanding human biases requires a deep understanding of psychology and social dynamics, while developing AI systems involves advanced computer science and machine learning techniques. Analyzing the data collected from interviews also requires expertise in data analysis and statistical methods.

The results of this study suggest that AI has the potential to revolutionize the recruitment process by reducing bias and ensuring a more diverse and inclusive workforce. By focusing on objective measures of skill and knowledge, companies can make more informed decisions during the early-stage recruitment process. This not only improves equity but also enhances overall efficiency by removing subjective biases that can cloud judgment.

It is important to note that AI interventions in early-stage recruitment interviews should be used as a complement to human judgment, rather than a replacement. Human intuition and qualitative assessment still hold value in assessing candidate fit and cultural compatibility. AI can serve as a valuable tool in screening and analyzing large volumes of candidates, reducing bias, and improving efficiency.

As AI technology continues to advance, there is potential for further enhancements in assessing interview dynamics and reducing bias. Natural language processing algorithms can be refined to better understand nuances in communication, while machine learning models can be trained on larger and more diverse datasets to improve accuracy. Ongoing research and collaboration between experts in various fields will be crucial in harnessing the full potential of AI in early-stage recruitment interviews.

Read the original article