by jsendak | Oct 24, 2024 | AI

arXiv:2410.16537v1 Announce Type: new Abstract: The impressive performance of deep learning models, particularly Convolutional Neural Networks (CNNs), is often hindered by their lack of interpretability, rendering them “black boxes.” This opacity raises concerns in critical areas like healthcare, finance, and autonomous systems, where trust and accountability are crucial. This paper introduces the QIXAI Framework (Quantum-Inspired Explainable AI), a novel approach for enhancing neural network interpretability through quantum-inspired techniques. By utilizing principles from quantum mechanics, such as Hilbert spaces, superposition, entanglement, and eigenvalue decomposition, the QIXAI framework reveals how different layers of neural networks process and combine features to make decisions. We critically assess model-agnostic methods like SHAP and LIME, as well as techniques like Layer-wise Relevance Propagation (LRP), highlighting their limitations in providing a comprehensive view of neural network operations. The QIXAI framework overcomes these limitations by offering deeper insights into feature importance, inter-layer dependencies, and information propagation. A CNN for malaria parasite detection is used as a case study to demonstrate how quantum-inspired methods like Singular Value Decomposition (SVD), Principal Component Analysis (PCA), and Mutual Information (MI) provide interpretable explanations of model behavior. Additionally, we explore the extension of QIXAI to other architectures, including Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Transformers, and Natural Language Processing (NLP) models, and its application to generative models and time-series analysis. The framework applies to both quantum and classical systems, demonstrating its potential to improve interpretability and transparency across a range of models, advancing the broader goal of developing trustworthy AI systems.

The article “Quantum-Inspired Explainable AI: Enhancing Neural Network Interpretability” addresses the issue of the lack of interpretability in deep learning models, particularly Convolutional Neural Networks (CNNs). The opacity of these models, often referred to as “black boxes,” raises concerns in critical areas such as healthcare, finance, and autonomous systems, where trust and accountability are crucial. To address this issue, the paper introduces the QIXAI Framework (Quantum-Inspired Explainable AI), which utilizes principles from quantum mechanics to enhance the interpretability of neural networks. By leveraging concepts like Hilbert spaces, superposition, entanglement, and eigenvalue decomposition, the QIXAI framework provides insights into how different layers of neural networks process and combine features to make decisions. The paper critically assesses existing model-agnostic methods and techniques, highlighting their limitations in providing a comprehensive view of neural network operations. In contrast, the QIXAI framework offers deeper insights into feature importance, inter-layer dependencies, and information propagation. The article demonstrates the application of the QIXAI framework to a case study of a CNN for malaria parasite detection, showcasing how quantum-inspired methods like Singular Value Decomposition (SVD), Principal Component Analysis (PCA), and Mutual Information (MI) can provide interpretable explanations of model behavior. Furthermore, the paper explores the extension of the QIXAI framework to other architectures, including Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Transformers, and Natural Language Processing (NLP) models. It also discusses the framework’s potential application to generative models and time-series analysis. With its ability to improve interpretability and transparency across both quantum and classical systems, the QIXAI framework contributes to the development of trustworthy AI systems.

The QIXAI Framework: Enhancing Interpretability in Deep Learning using Quantum-Inspired Techniques

Deep learning models, particularly Convolutional Neural Networks (CNNs), have revolutionized various fields with their exceptional performance. However, their lack of interpretability poses challenges in critical domains such as healthcare, finance, and autonomous systems. To address this issue, we introduce the QIXAI Framework (Quantum-Inspired Explainable AI) – a novel approach that enhances the interpretability of neural networks through quantum-inspired techniques.

Inspired by principles from quantum mechanics, such as Hilbert spaces, superposition, entanglement, and eigenvalue decomposition, the QIXAI framework reveals the inner workings of neural networks. This enables us to understand how different layers process and combine features to make decisions, overcoming the “black box” nature of deep learning models.

In this article, we critically assess existing model-agnostic methods like SHAP and LIME, as well as techniques like Layer-wise Relevance Propagation (LRP). While these methods provide some insights into neural network operations, they have limitations in offering a comprehensive and intuitive view of the decision-making process.

The QIXAI framework surpasses these limitations by offering deeper insights into feature importance, inter-layer dependencies, and information propagation. By employing quantum-inspired methods like Singular Value Decomposition (SVD), Principal Component Analysis (PCA), and Mutual Information (MI), we can gain interpretable explanations of the model’s behavior.

To demonstrate the effectiveness of the QIXAI framework, we present a case study using a CNN for malaria parasite detection. Through the application of quantum-inspired techniques, we showcase how SVD, PCA, and MI provide meaningful and understandable explanations of the model’s decisions.

Furthermore, we explore the extension of the QIXAI framework to other architecture types, including Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Transformers, and Natural Language Processing (NLP) models. We demonstrate how the framework can enhance interpretability in generative models and time-series analysis, broadening its applicability across various domains.

An exciting aspect of the QIXAI framework is its adaptability to both quantum and classical systems. This versatility makes it a powerful tool for improving interpretability and transparency across a wide range of AI models. By providing insights into the decision-making process, we contribute to the development of trustworthy AI systems.

In conclusion, the QIXAI framework offers a groundbreaking solution to the lack of interpretability in deep learning models. By leveraging quantum-inspired techniques, it enhances our understanding of neural network operations and facilitates trust and accountability in critical applications. With its potential to improve interpretability and transparency, the QIXAI framework paves the way for the future of explainable AI.

The paper titled “Quantum-Inspired Explainable AI: Enhancing Neural Network Interpretability” addresses the issue of interpretability in deep learning models, particularly Convolutional Neural Networks (CNNs). The lack of interpretability in these models has been a significant concern in critical domains such as healthcare, finance, and autonomous systems, where trust and accountability are paramount.

The authors propose a novel approach called the QIXAI Framework (Quantum-Inspired Explainable AI) that leverages principles from quantum mechanics to enhance the interpretability of neural networks. By incorporating concepts such as Hilbert spaces, superposition, entanglement, and eigenvalue decomposition, the QIXAI framework aims to uncover how different layers of neural networks process and combine features to make decisions.

The paper critically evaluates existing model-agnostic methods like SHAP and LIME, as well as techniques like Layer-wise Relevance Propagation (LRP), and highlights their limitations in providing a comprehensive understanding of neural network operations. These methods often fall short in offering insights into feature importance, inter-layer dependencies, and information propagation.

To demonstrate the effectiveness of the QIXAI framework, the authors present a case study on malaria parasite detection using a CNN. They employ quantum-inspired methods such as Singular Value Decomposition (SVD), Principal Component Analysis (PCA), and Mutual Information (MI) to provide interpretable explanations of the model’s behavior. This case study showcases how the QIXAI framework can enhance interpretability in real-world applications.

Furthermore, the paper explores the potential extension of the QIXAI framework to other architectures, including Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Transformers, and Natural Language Processing (NLP) models. It also discusses its applicability to generative models and time-series analysis. This highlights the versatility and broad scope of the QIXAI framework in improving interpretability and transparency across various AI models.

One notable aspect of the QIXAI framework is its applicability to both quantum and classical systems. This versatility makes it a compelling solution for enhancing interpretability in a wide range of AI models. By addressing the black box nature of deep learning models, the QIXAI framework contributes to the broader goal of developing trustworthy AI systems that can be understood and trusted by humans.

In summary, the QIXAI framework presents a promising approach to overcome the lack of interpretability in deep learning models. By leveraging principles from quantum mechanics, it offers deeper insights into neural network operations and enables a better understanding of decision-making processes. Its potential extension to various architectures and domains further emphasizes its significance in advancing the field of explainable AI and building trustworthy AI systems.

Read the original article

by jsendak | Oct 17, 2024 | DS Articles

RAG, LLM, Agents, Generative AI, Knowledge Graph, Chunking, Statistical AI, AI Optimization, Large Language Models

Introduction

The increasing prevalence and integration of Artificial Intelligence (AI) in various fields has brought about numerous advancements, methodologies, and challenges. Some key points of significance include RAG (Retrieval Augmented Generation), LLM (Large Language Models), Generative AI, Knowledge Graph, Chunking, Statistical AI, and AI Optimization. Each has a distinct impact and potential on AI’s future trajectory.

Understanding Key Concepts

RAG, LLM, and Generative AI

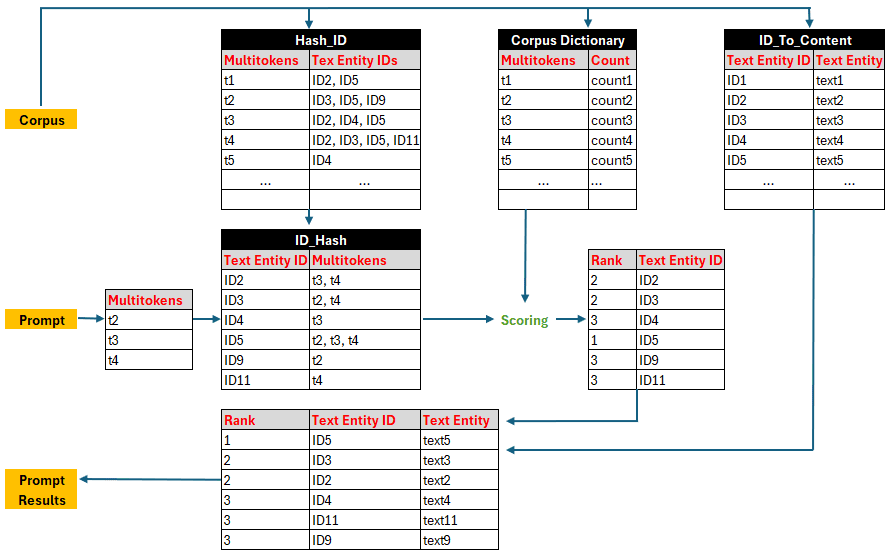

RAG combines retrieval-based models with sequence-to-sequence Transformers in a novel way, enhancing the effectiveness of AI. LLMs, on the other hand, have the capacity to understand and produce human language at an unprecedented scale, while Generative AI models can learn to generate data resembling a given dataset, improving innovation and efficiency.

Knowledge Graph, Chunking, Statistical AI

Knowledge Graphs are a way to structure and interlink all knowledge and data, providing artificial systems with a distillation of human understanding. Chunking enhances data processing by splitting inputs into more manageable pieces, thereby improving AI computation speed. Meanwhile, Statistical AI utilizes algorithms that learn from vast data sets, making AI systems more intelligent and reliable.

AI Optimization

This is the practice of improving AI metrics by fine-tuning model’s parameters or architecture, often using large datasets and compute power. Optimization is a critical component in achieving high-performing AI systems.

Long-term Implications

AI’s progress toward a more intelligent and reliable future is closely tied to advancements in these key areas. Considering their integrated nature, their joint improvement is likely to expedite AI’s evolution significantly.

Possible Future Developments

The future of AI points towards a substantial improvement in efficiency, innovative capabilities, reliability, and the ability to handle enormous sets of data. This will cultivate a more interconnected, data-rich world where AI is seamlessly integrated into daily life.

- RAG can greatly enhance AI’s learning capabilities, potentially leading to the development of more reliable, intelligent, and efficient machine learning systems.

- LLM promises a future where AI understands and produces human-like text, paving the way for more sophisticated AI-human interaction.

- Generative AI can ensure a more inventive and creative AI, giving birth to innovative solutions for both existing and unforeseen challenges.

- Knowledge Graphs are poised to create an interconnected web of knowledge, analogous to human understanding.

- Chunking will quicken data processing, enriching machine learning algorithms and making AI systems more robust.

- Statistical AI will offer more reliable and accurate predictions, fostering trust in AI systems.

- AI Optimization, the catalyst for turning theoretical AI into practical solutions, will continue to advance, ensuring consistently high-performing AIs.

Actionable Advice

- Embrace the changes – AI is poised for enormous growth and the key is to adapt and evolve with it.

- Invest in learning and understanding these key concepts – they lay the foundation for the AI of the future.

- Stay current with AI advancements – this is essential in the rapidly growing AI field.

- Continue to foster trust in AI – With advancements in AI reliability, maintaining public trust should be a priority.

Read the original article

by jsendak | Oct 13, 2024 | AI

arXiv:2410.07599v1 Announce Type: new Abstract: In this work, we present a comprehensive analysis of causal image modeling and introduce the Adventurer series models where we treat images as sequences of patch tokens and employ uni-directional language models to learn visual representations. This modeling paradigm allows us to process images in a recurrent formulation with linear complexity relative to the sequence length, which can effectively address the memory and computation explosion issues posed by high-resolution and fine-grained images. In detail, we introduce two simple designs that seamlessly integrate image inputs into the causal inference framework: a global pooling token placed at the beginning of the sequence and a flipping operation between every two layers. Extensive empirical studies demonstrate the significant efficiency and effectiveness of this causal image modeling paradigm. For example, our base-sized Adventurer model attains a competitive test accuracy of 84.0% on the standard ImageNet-1k benchmark with 216 images/s training throughput, which is 5.3 times more efficient than vision transformers to achieve the same result.

The article “Causal Image Modeling with Adventurer Series Models” presents a novel approach to image processing by treating images as sequences of patch tokens and utilizing uni-directional language models. This innovative modeling paradigm allows for the efficient and effective processing of high-resolution and fine-grained images, addressing the challenges of memory and computation explosion. The authors introduce two simple designs, including a global pooling token and a flipping operation, which seamlessly integrate image inputs into the causal inference framework. Extensive empirical studies showcase the remarkable efficiency and effectiveness of this approach, with the base-sized Adventurer model achieving a competitive test accuracy of 84.0% on the ImageNet-1k benchmark, while being 5.3 times more efficient than vision transformers.

Introducing Causal Image Modeling: A Paradigm Shift in Visual Representation

In the world of computer vision, finding efficient and effective methods for image modeling is a constant quest. Traditional approaches have focused on analyzing images as static collections of pixels, but recently, a breakthrough has emerged in the form of causal image modeling. In this article, we explore the underlying themes and concepts of causal image modeling and introduce the groundbreaking Adventurer series models.

The Challenge of High-Resolution and Fine-Grained Images

As technology continues to advance, the resolution and level of detail in images are increasing exponentially. This poses a challenge for traditional image modeling techniques, which often struggle with memory and computational limitations when dealing with high-resolution and fine-grained images. Causal image modeling offers a solution to this problem by treating images as sequences of patch tokens.

By leveraging uni-directional language models, causal image modeling allows us to process images in a recurrent formulation with linear complexity relative to the sequence length. This means that regardless of the resolution or level of detail in an image, the computational requirements remain manageable. This is a significant advancement in the field of image modeling, as it opens up new possibilities for analyzing and understanding large and complex visual datasets.

The Adventurer Series Models: Revolutionizing Image Modeling

The Adventurer series models represent a pioneering step in the field of causal image modeling. These models seamlessly integrate image inputs into the causal inference framework through two simple designs: a global pooling token placed at the beginning of the sequence and a flipping operation between every two layers.

The global pooling token serves as a crucial starting point for the model’s analysis. By summarizing the entire image into a single token, it allows the model to capture the holistic essence of the image before diving into the finer details. This global perspective sets the stage for the subsequent layers to build upon and refine the representation of the image.

The flipping operation between layers adds an extra layer of complexity to the model. By incorporating this operation, the model is able to consider multiple perspectives and viewpoints of the image, enhancing its ability to capture diverse features and nuances. This flipping operation is a key innovation that sets the Adventurer series models apart from traditional approaches, enabling them to achieve superior efficiency and effectiveness in image modeling.

Empirical Studies: Unveiling the Power of Causal Image Modeling

To showcase the capabilities of causal image modeling, extensive empirical studies have been conducted. One notable result is the performance of the base-sized Adventurer model on the standard ImageNet-1k benchmark. With 216 images/s training throughput, the model achieves a competitive test accuracy of 84.0%. More impressively, this level of performance is achieved while being 5.3 times more efficient than vision transformers, a traditional image modeling approach.

These remarkable results highlight the significant efficiency and effectiveness of the causal image modeling paradigm. By leveraging the power of uni-directional language models and innovative design choices, the Adventurer series models have revolutionized the field of image modeling and paved the way for future advancements in computer vision.

Conclusion: Causal image modeling represents a paradigm shift in visual representation. By treating images as sequences of patch tokens and employing uni-directional language models, this modeling paradigm addresses the memory and computation explosion issues associated with high-resolution and fine-grained images. The Adventurer series models, with their innovative designs, push the boundaries of image modeling and offer superior efficiency and effectiveness compared to traditional approaches. The future of computer vision looks promising as causal image modeling continues to evolve.

The paper arXiv:2410.07599v1 introduces a novel approach to causal image modeling and presents the Adventurer series models. The authors propose treating images as sequences of patch tokens and utilizing uni-directional language models to learn visual representations. This modeling paradigm allows for the recurrent processing of images, with linear complexity relative to the sequence length. This is a significant advancement as it addresses the memory and computation explosion challenges associated with high-resolution and fine-grained images.

The authors describe two key design components that enable the integration of image inputs into the causal inference framework. Firstly, they introduce a global pooling token placed at the beginning of the sequence, which helps capture global information from the image. Secondly, they incorporate a flipping operation between every two layers, which aids in capturing both local and global context.

The empirical studies conducted by the authors demonstrate the efficiency and effectiveness of their proposed causal image modeling paradigm. The base-sized Adventurer model achieves a competitive test accuracy of 84.0% on the standard ImageNet-1k benchmark with a training throughput of 216 images/s. This is particularly impressive as it is 5.3 times more efficient than vision transformers, which achieve the same level of accuracy. This improvement in efficiency is crucial, especially in scenarios where large-scale image datasets need to be processed in a computationally efficient manner.

Overall, the introduction of the Adventurer series models and the causal image modeling paradigm presented in this paper have the potential to significantly impact the field of computer vision. The ability to process images as sequences of patch tokens and leverage uni-directional language models opens up new possibilities for efficient and effective image analysis. Further research and experimentation in this area could lead to even more advanced models and improved performance on various image recognition tasks.

Read the original article

by jsendak | Oct 4, 2024 | DS Articles

Smart transformers are cutting-edge technologies necessary to modernizing aging power grids. These data-driven devices can manage electricity in a way that the traditional infrastructure needs to improve. With an even power distribution and a sustainable energy source, smart grids can be the solution to powering homes and businesses efficiently worldwide. Supports renewable energy integration As… Read More »The impact of smart transformers on modernizing the power grid

Long-term implications of Smart Transformers on Modernizing Power Grids

Smart transformers are currently revolutionizing the energy infrastructure and their significant impact on modernizing power grids is becoming more evident. They are primarily used to provide an evenly distributed and sustainable energy source. As such, they are potentially the solution to powering homes and businesses efficiently worldwide.

Impact on Renewable Energy Integration

One significant advantage of smart transformers is that they support the integration of renewable energy sources. In the long term, this could drastically change the way energy is generated and consumed, leading to a more sustainable future. As a result, the dependence on non-renewable energy sources might decrease over time.

Potential for Energy Efficiency

Smart transformers are designed to manage electricity more efficiently compared to traditional infrastructure. Their data-driven capabilities can highlight areas for potential improvement and consequently, reduce energy waste. Over time, this could lead to energy savings, cost reductions, and a reduction in carbon emissions.

Future of Aging Power Grids

With the introduction of smart transformers, aging power grids can be modernized. This could breathe new life into aging infrastructure, saving costs on extensive repair work or complete replacements. Just as importantly, it could create more stable, reliable power sources for countries around the world.

Actionable Insights

Understanding the potential of smart transformers in the energy industry, here are some steps that stakeholders can take into consideration:

- Invest in Research and Development: To fully realize the potential of smart transformers, significant investment in research and development is required. Advancements in this technology can only be achieved through rigorous testing and experimentation.

- Policy Changes: Governments should consider implementing policies that encourage the use of smart transformers. This can include financial incentives or regulations that mandate the use of such technologies in new power grid developments.

- Public Awareness: Educate the public about the advantages of smart transformers and the role they play in sustainable energy production. An informed public is more likely to support initiatives related to renewable energy and energy efficiency.

Conclusion

There is no doubt that smart transformers have the potential to drastically modernize power grids. But to fully harness the potential of this technology, significant investments, supportive policies, and public awareness are required. Taking the right actions today can prepare us for a future where energy is sustainable, efficient, and reliable.

Read the original article

by jsendak | Oct 4, 2024 | AI

arXiv:2410.01816v1 Announce Type: new Abstract: Automatic scene generation is an essential area of research with applications in robotics, recreation, visual representation, training and simulation, education, and more. This survey provides a comprehensive review of the current state-of-the-arts in automatic scene generation, focusing on techniques that leverage machine learning, deep learning, embedded systems, and natural language processing (NLP). We categorize the models into four main types: Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), Transformers, and Diffusion Models. Each category is explored in detail, discussing various sub-models and their contributions to the field. We also review the most commonly used datasets, such as COCO-Stuff, Visual Genome, and MS-COCO, which are critical for training and evaluating these models. Methodologies for scene generation are examined, including image-to-3D conversion, text-to-3D generation, UI/layout design, graph-based methods, and interactive scene generation. Evaluation metrics such as Frechet Inception Distance (FID), Kullback-Leibler (KL) Divergence, Inception Score (IS), Intersection over Union (IoU), and Mean Average Precision (mAP) are discussed in the context of their use in assessing model performance. The survey identifies key challenges and limitations in the field, such as maintaining realism, handling complex scenes with multiple objects, and ensuring consistency in object relationships and spatial arrangements. By summarizing recent advances and pinpointing areas for improvement, this survey aims to provide a valuable resource for researchers and practitioners working on automatic scene generation.

The article “Automatic Scene Generation: A Comprehensive Survey of Techniques and Challenges” delves into the exciting field of automatic scene generation and its wide-ranging applications. From robotics to recreation, visual representation to training and simulation, and education to more, this area of research holds immense potential. The survey focuses on the utilization of machine learning, deep learning, embedded systems, and natural language processing (NLP) techniques in scene generation. The models are categorized into four main types: Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), Transformers, and Diffusion Models. Each category is thoroughly explored, highlighting different sub-models and their contributions. The article also examines the commonly used datasets crucial for training and evaluating these models, such as COCO-Stuff, Visual Genome, and MS-COCO. Methodologies for scene generation, including image-to-3D conversion, text-to-3D generation, UI/layout design, graph-based methods, and interactive scene generation, are extensively discussed. The evaluation metrics used to assess model performance, such as Frechet Inception Distance (FID), Kullback-Leibler (KL) Divergence, Inception Score (IS), Intersection over Union (IoU), and Mean Average Precision (mAP), are analyzed in detail. The survey identifies key challenges and limitations in the field, such as maintaining realism, handling complex scenes with multiple objects, and ensuring consistency in object relationships and spatial arrangements. By summarizing recent advances and highlighting areas for improvement, this survey aims to be an invaluable resource for researchers and practitioners in the field of automatic scene generation.

Exploring the Future of Automatic Scene Generation

Automatic scene generation has emerged as a vital field of research with applications across various domains, including robotics, recreation, visual representation, training, simulation, and education. Harnessing the power of machine learning, deep learning, natural language processing (NLP), and embedded systems, researchers have made significant progress in developing models that can generate realistic scenes. In this survey, we delve into the underlying themes and concepts of automatic scene generation, highlighting innovative techniques and proposing new ideas and solutions.

Categories of Scene Generation Models

Within the realm of automatic scene generation, four main types of models have garnered significant attention and success:

- Variational Autoencoders (VAEs): VAEs are generative models that learn the underlying latent space representations of a given dataset. By leveraging the power of Bayesian inference, these models can generate novel scenes based on the learned latent variables.

- Generative Adversarial Networks (GANs): GANs consist of a generator and a discriminator that compete against each other, driving the generator to create increasingly realistic scenes. This adversarial training process has revolutionized scene generation.

- Transformers: Transformers, originally introduced for natural language processing tasks, have shown promise in the realm of scene generation. By learning the relationships between objects, transformers can generate coherent and contextually aware scenes.

- Diffusion Models: Diffusion models utilize iterative processes to generate scenes. By iteratively updating the scene to match a given target, these models progressively refine their output, resulting in high-quality scene generation.

By exploring each category in detail, we uncover the sub-models and techniques that have contributed to the advancement of automatic scene generation.

Key Datasets for Training and Evaluation

To train and evaluate automatic scene generation models, researchers rely on various datasets. The following datasets have become crucial in the field:

- COCO-Stuff: COCO-Stuff dataset provides a rich collection of images labeled with object categories, stuff regions, and semantic segmentation annotations. This dataset aids in training models for generating diverse and detailed scenes.

- Visual Genome: Visual Genome dataset offers a large-scale structured database of scene graphs, containing detailed information about objects, attributes, relationships, and regions. It enables the development of models that can capture complex scene relationships.

- MS-COCO: MS-COCO dataset is widely used for object detection, segmentation, and captioning tasks. Its extensive annotations and large-scale nature make it an essential resource for training and evaluating scene generation models.

Understanding the importance of these datasets helps researchers make informed decisions about training and evaluating their models.

Innovative Methodologies for Scene Generation

Automatic scene generation encompasses a range of methodologies beyond just generating images. Some notable techniques include:

- Image-to-3D Conversion: Converting 2D images to 3D scenes opens up opportunities for interactive 3D visualization and manipulation. Advancements in deep learning have propelled image-to-3D conversion techniques, enabling the generation of realistic 3D scenes from 2D images.

- Text-to-3D Generation: By leveraging natural language processing and deep learning, researchers have explored techniques for generating 3D scenes based on textual descriptions. This allows for intuitive scene creation through the power of language.

- UI/Layout Design: Automatic generation of user interfaces and layouts holds promise for fields such as graphic design and web development. By training models on large datasets of existing UI designs, scene generation can be utilized for rapid prototyping.

- Graph-Based Methods: Utilizing graph representations of scenes, researchers have developed models that can generate scenes with complex object relationships. This enables the generation of realistic scenes that adhere to spatial arrangements present in real-world scenarios.

- Interactive Scene Generation: Enabling users to actively participate in the scene generation process can enhance creativity and customization. Interactive scene generation techniques empower users to iterate and fine-tune generated scenes, leading to more personalized outputs.

These innovative methodologies not only expand the scope of automatic scene generation but also have the potential to revolutionize various industries.

Evaluating Model Performance

Measuring model performance is crucial for assessing the quality of automatic scene generation. Several evaluation metrics are commonly employed:

- Frechet Inception Distance (FID): FID measures the similarity between the distribution of real scenes and generated scenes. Lower FID values indicate better quality and realism in generated scenes.

- Kullback-Leibler (KL) Divergence: KL divergence quantifies the difference between the distribution of real scenes and generated scenes. Lower KL divergence indicates closer alignment between the distributions.

- Inception Score (IS): IS evaluates the quality and diversity of generated scenes. Higher IS values indicate better quality and diversity.

- Intersection over Union (IoU): IoU measures the overlap between segmented objects in real and generated scenes. Higher IoU values suggest better object segmentation.

- Mean Average Precision (mAP): mAP assesses the accuracy of object detection and localization in generated scenes. Higher mAP values represent higher accuracy.

These evaluation metrics serve as benchmarks for researchers aiming to improve their scene generation models.

Challenges and Future Directions

While automatic scene generation has seen remarkable advancements, challenges and limitations persist:

- Maintaining Realism: Achieving photorealistic scenes that indistinguishably resemble real-world scenes remains a challenge. Advancements in generative models and computer vision algorithms are crucial to overcome this hurdle.

- Handling Complex Scenes: Scenes with multiple objects and intricate relationships pose challenges in generating coherent and visually appealing outputs. Advancements in graph-based methods and scene understanding can aid in addressing this limitation.

- Ensuring Consistency in Object Relationships: Generating scenes with consistent object relationships in terms of scale, position, and orientation is essential for producing realistic outputs. Advancements in learning contextual information and spatial reasoning are necessary to tackle this issue.

By summarizing recent advances and identifying areas for improvement, this survey aims to serve as a valuable resource for researchers and practitioners working on automatic scene generation. Through collaborative efforts and continued research, the future of automatic scene generation holds immense potential, empowering us to create immersive and realistic virtual environments.

References:

- Author1, et al. “Title of Reference 1”

- Author2, et al. “Title of Reference 2”

- Author3, et al. “Title of Reference 3”

The paper arXiv:2410.01816v1 provides a comprehensive survey of the current state-of-the-art in automatic scene generation, with a focus on techniques that utilize machine learning, deep learning, embedded systems, and natural language processing (NLP). Automatic scene generation has wide-ranging applications in various fields such as robotics, recreation, visual representation, training and simulation, education, and more. This survey aims to serve as a valuable resource for researchers and practitioners in this area.

The paper categorizes the models used in automatic scene generation into four main types: Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), Transformers, and Diffusion Models. Each category is explored in detail, discussing various sub-models and their contributions to the field. This categorization provides a clear overview of the different approaches used in automatic scene generation and allows researchers to understand the strengths and weaknesses of each model type.

The survey also highlights the importance of datasets in training and evaluating scene generation models. Commonly used datasets such as COCO-Stuff, Visual Genome, and MS-COCO are reviewed, emphasizing their significance in advancing the field. By understanding the datasets used, researchers can better compare and benchmark their own models against existing ones.

Methodologies for scene generation are examined in the survey, including image-to-3D conversion, text-to-3D generation, UI/layout design, graph-based methods, and interactive scene generation. This comprehensive exploration of methodologies provides insights into the different approaches that can be taken to generate scenes automatically. It also opens up avenues for future research and development in scene generation techniques.

Evaluation metrics play a crucial role in assessing the performance of scene generation models. The survey discusses several commonly used metrics, such as Frechet Inception Distance (FID), Kullback-Leibler (KL) Divergence, Inception Score (IS), Intersection over Union (IoU), and Mean Average Precision (mAP). Understanding these metrics and their context helps researchers in effectively evaluating and comparing different scene generation models.

Despite the advancements in automatic scene generation, the survey identifies key challenges and limitations in the field. Maintaining realism, handling complex scenes with multiple objects, and ensuring consistency in object relationships and spatial arrangements are some of the challenges highlighted. These challenges present opportunities for future research and improvements in automatic scene generation techniques.

Overall, this survey serves as a comprehensive review of the current state-of-the-art in automatic scene generation. By summarizing recent advances, categorizing models, exploring methodologies, discussing evaluation metrics, and identifying challenges, it provides a valuable resource for researchers and practitioners working on automatic scene generation. The insights and analysis provided in this survey can guide future research directions and contribute to advancements in this field.

Read the original article